| |

Your second midterm examination will appear here. It covers Chapters 6 - 11.

It will be a 50 minute, closed book exam.

Expectations:

- See course goals and objectives

- See Objectives at the beginning of each chapter

- See Summary at the end of each chapter

- Read, understand, explain, and desk-check C programs

-

See previous exams:

Section and page number references in old exams refer to the edition of the text in use when that exam was given. You may have to do a little looking to map to the edition currently in use. Also, which chapters were covered on each exam varies a little from year to year.

Questions may be similar to homework questions from the book. You will

be given a choice of questions.

I try to ask questions I think you might hear in a job interview. "I

see you took a class on Operating Systems. What can you tell me about ...?"

Preparation hints:

Read the assigned chapters in the text. Chapter summaries are especially helpful. Chapters 1 and 2 include excellent summaries of each of the later chapters.

Read and consider Practice Exercises and Exercises at the end of each chapter.

Review authors' slides and study guides from the textbook web site

Review previous exams listed above.

See also Dr. Barnard's notes. He was using a different edition of our text, so chapter and section numbers do not match, but his chapter summaries are excellent.

Results on exam 1 suggest you may wish to maintain a vocabulary list.

Questions on projects.

Please sign the Marquette honor pledge:

"I recognize the importance of personal integrity in all aspects of life and work. I commit myself to truthfulness, honor and responsibility, by which I earn the respect of others. I support the development of good character and commit myself to uphold the highest standards of academic integrity as an important aspect of personal integrity. My commitment obliges me to conduct myself according to the Marquette University Honor Code."

Name _____________________________ Date ____________

Read and follow the directions:

- Write on four of the five questions. If you write more than four questions, only the first four will be graded. You do not get extra credit for doing extra problems.

- In the event this exam is interrupted (e.g., fire alarm or bomb threat), students will leave their papers on their desks and evacuate as instructed. The exam will not resume. Papers will be graded based on their current state.

- Read the entire exam. If there is anything you do not understand about a question, please ask at once.

- If you find a question ambiguous, begin your answer by stating clearly what interpretation of the question you intend to answer.

- Begin your answer to each question at the top of a fresh sheet of paper [or -5].

- Be sure your name is on each sheet of paper you hand in [or -5]. That is because I often separate your papers by problem number for grading and re-assemble to record and return.

- Write only on one side of a sheet [or -5]. That is because I scan your exam papers, and the backs do not get scanned.

- No electronic devices of any kind are permitted.

- Be sure I can read what you write.

- If I ask questions with parts

- . . .

- . . .

your answer should show parts

- . . .

- . . .

- The instructors reserve the right to assign bonus points beyond the stated value of the problem for exceptionally insightful answers. Conversely, we reserve the right to assign negative scores to answers that show less understanding than a blank sheet of paper. Both of these are rare events.

- Someone should have explained to you several years ago that "X is when ..." or "Y is where ..." are not suitable definitions (possibly excepting the cases in which X is a time or Y is a location). On Exam 2, "X is when ..." or "Y is where ..." earn -5 points.

The university suggests exam rules:

- Silence all electronics (including cell phones, watches, and tablets) and place in your backpack.

- No electronic devices of any kind are permitted.

- No hoods, hats, or earbuds allowed.

- Be sure to visit the rest room prior to the exam.

In addition, you will be asked to sign the honor pledge at the top of the exam:

"I recognize the importance of personal integrity in all aspects of life and work. I commit myself to truthfulness, honor and responsibility, by which I earn the respect of others. I support the development of good character and commit myself to uphold the highest standards of academic integrity as an important aspect of personal integrity. My commitment obliges me to conduct myself according to the Marquette University Honor Code."

Name ________________________ Date ____________

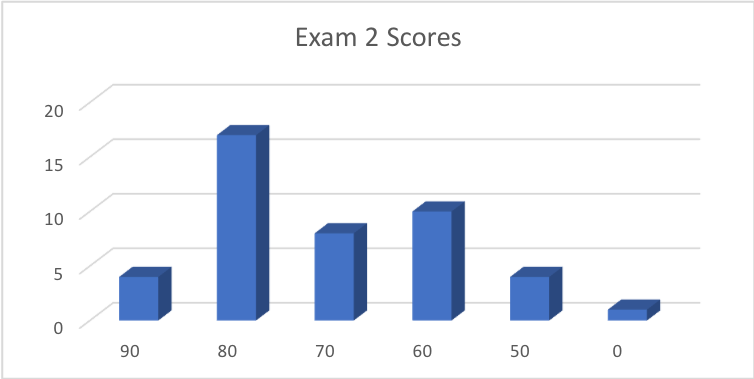

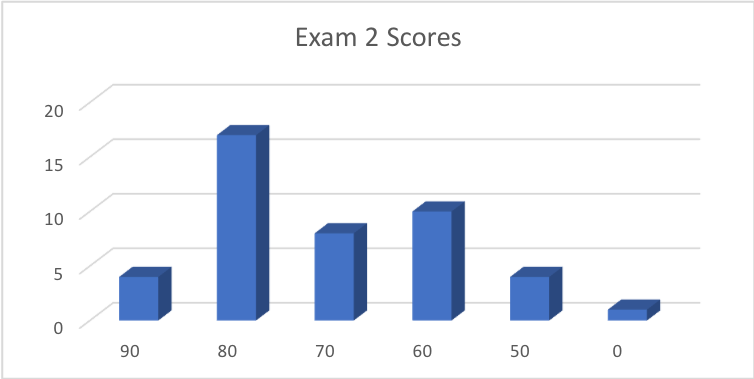

Score Distribution:

Median: 78; Mean: 75.3; Standard Deviation: 20.0

These shaded blocks are intended to suggest solutions; they are not intended to be complete solutions.

Problem 1 Definitions

Problem 2 Effective memory access time

Problem 3 How large should a quantum be?

Problem 4 Ultra-Tiny File System

Problem 5 Tricky C

Problem 1 Definitions

Give careful definitions for each term (in the context of computer operating systems):

- Preemptive scheduling

- Atomic instruction

- Readers-writers problem

- File-System mounting

- Processor affinity

- Load balancing

- External fragmentation

- Direct file access

Hint: A definition is 1 - 2 sentences.

Warning: A definition that starts "Z is when ...," or "Z is where ..." earns -5 points.

Specific questions were chosen from this collection (This list is not exhaustive for future exams):

- Active vs. Working set (in the context of memory management). Both concern memory addresses used by a program. The working set (Section 9.6.2) is the (fuzzy) set of pages the program is "naturally" using at the moment. The active set is the set of pages actually stored in memory at the moment. We hope that the active set contains the working set, more or less. When the working set is significantly larger than the active set, we get thrashing. However, I must cut you some slack, though, because our text makes no mention of the term "active set."

- Address binding (8.1.2) is the tying of a virtual address to a physical address. When do we know the physical address of a particular line of code of variable? Addresses can be bound at language design time, at language implementation time, at compile time, at load time, or during execution. Virtual memory is a technique for binding addresses during execution.

- Atomic execution [p. 210]: An instruction is implemented as one uninterruptable unit.

- Busy waiting [p. 213]. Waiting while holding the CPU, typically waiting by loop, doing nothing. For example

while (TestAndSet(&lock) ; // Does nothing

A process in a Waiting state (in the sense of the Ready-Running-Waiting state diagram) is not busy waiting because it is not holding the CPU.

- Cooperating process [p. 203]: One that can affect or be affected by other processes executing in the system.

- CPU-I/O burst cycle [p. 262]: Processes typically alternate between periods of high CPU activity and periods of high I/O activity

- Critical-Section problem [p. 206] solution must provide mutual exclusion, must allow each process to make progress, and must ensure the time a process must wait to enter its critical section is bounded.

- Deadlock vs. starvation [p. 217]. Both concern indefinite wait. "A set of processes is in a deadlocked state when every process in the set is waiting for an event that can be caused only by another process in the set." Starvation is also an indefinite wait not satisfying the definition of deadlock, often due to an accidental sequence of events. Starvation can occur in scheduling, but it can occur in other contexts.

- Deadlock necessary conditions [p. 318]: Mutual exclusion, Hold and wait, No preemption, Circular wait.

"Wait ON" vs. "Wait FOR." One waits ON superiors, to take care of, or to serve; e.g., a store clerk waits ON customers. One waits FOR peers, e.g., you might wait for a friend to go to the lab. A server process might be said to "wait on" a client process, but process scheduling, mutual exclusion, deadlock, etc., involve processes waiting FOR each other.

- Demand paging (9.2). Pages of virtual memory are loaded into physical memory only as they are needed.

- Error-correcting code [p. 479]: Encoding data is such a way that a certain number of errors can be detected and a (smaller) number of errors can be corrected. (Circular statements such as "Error-correcting codes are codes for correcting errors" received zero points.) Encryption codes protect against unauthorized access.

- File (p. 504). A named collection of related information recorded on secondary storage.

- File access: Sequential vs. direct. Sequential access (11.2.1) processes information in the file strictly in order. Direct access (11.2.2) can access records in a file in no particular order. "Direct file access allows a user to access a file directly" says NOTHING useful. "File access" concerns access to information within a file, not to a list of files. That would be accessing a directory. "File access" is about access to contents of a file, not to finding the file itself. "File access" does not depend on all or a part of the file being in memory.

- File-System mounting (11.4). The operating system places a file system (typically a disk or equivalent) at a specified location within the file system. For example, Windows mounts drives as letters in the MyComputer "folder." Did you ever wonder what Windows would do if you tried to mount more than 26 drives?

- Indexed file access, Similar to direct file access, except that an index is used to record pointers to blocks of a file. To find a record in the file, we search the index and use the pointer to access the file directly. Not the same as direct file access.

A different meaning is to store (index) the contents of many files, to that a desired file can be found quickly.

The first meaning is for accessing records of a single file; the second is for accessing many files.

- Internal vs. external fragmentation [p. 363]. Both are wasted memory. Internal fragmentation is within a page, segment, partition, or disk block. External fragmentation is memory that is free, but it is in such small units that it cannot be allocated effectively. It is wasted memory outside a segment, partition, or disk block. Paging avoids external fragmentation.

- Locality [p. 428]. The concept that if a location in memory (or on disk) is referenced, the probability increases that nearby locations will be referenced soon. Locality makes the entire memory hierarchy effective.

- Logical address space vs. Physical address space (Sect. 8.1.3)

- Memory hierarchy Processor registers > cache levels 1, 2, and 3 > main memory > disk cache > local disk storage > LAN storage > remote storage > off-line storage > remote storage. Trade-offs between speed, capacity, volatility, and cost.

- Memory mapped files [p. 430]. Using virtual memory techniques to treat a sequentially accessed file as routine memory access.

- Monitor vs. semaphore (for mutual exclusion). See Section 5.8 vs. 5.6. Both are means to enforce mutual exclusion. "The monitor construct ensures that only one process at a time can be active within the monitor." In Java, the keyword synchronized enforces a monitor-like discipline. Counting or binary semaphores provide mutual exclusion through indivisible operations wait() and signal().

- Mutual exclusion [p. 206] If one process P1 is executing in its critical section, then no other processes can be executing in their critical sections.

- NAS = Network-Attached Storage [p. 471]; SAN = Storage-Area Network [p. 472].

- Page vs. Page frame [p. 366]. A page is a unit of the virtual (apparent) memory of a process. A page frame is a physical location in memory.

- Page fault [p. 403]. CPU attempts to access a memory location on a page that is not found in the page table.

- Page replacement (9.4): FIFO, Optimal, LRU, approximate LRU, Second-chance.

- Preemption - Taking resources away from a process that holds them. Preempting a running process by a timer interrupt is one example, but in principle, the operating system could preempt any resource. Preemption is more than interruption. Preempting a running process takes away the CPU resource, but not necessarily its other resources.

- Preemptive scheduling (6.1.3).

In non-preemptive scheduling, CPU-scheduling, a process keeps the CPU until the process releases the CPU by terminating or by switching to a waiting state. In preemptive scheduling, the CPU can be taken away, most commonly in response to a timer interrupt. Preemptive scheduling concerns one resource, the CPU.

- Priority inversion [p. 217]: A high priority process is forced to wait for a lower priority process to release some resource(s).

- Priority scheduling [p. 270]: A process scheduling strategy which selects from among processes in the Ready list the one with the highest priority. May be used either with or without preemption.

- Processor affinity vs. load balancing (6.5.2). Both concern multi-processor scheduling. Processor affinity suggests a process should remain on the same processor to exploit caching. Load balancing suggests a process should be moved from an overloaded processor to a lightly loaded processor.

- Proportional share real-time CPU scheduling [p. 289]: Allocate T shares among all applications. An application can receive N shares of time, ensuring that the application is allocated N/T of the total processor time.

- Race condition [p. 205]: Several processes access and manipulate the same data concurrently, and the outcome depends on what happens to be the order of execution.

- RAID [p. 484]. Redundant arrays of independent/inexpensive disks use to provide data redundancy and rapid access.

- Rate-monotonic real-time CPU scheduling [p. 287]: Upon entering the system, each periodic task is given a static priority inversely based on its period. If a lower priority process is running, and a higher priority process becomes available to run, the lower priority process is interrupted.

the execution depends on the particular order in which the accesses take place.

- Readers-writers problem (5.7.2) is one of the classical problems of synchronization. Multiple reading processes and multiple writing processes need to share access to a single shared data resource.

- Resource allocation graph (7.2.2) is a graphical representation of processes i) requesting and ii) holding resources.

- Round-robin scheduling (6.3.4) is a preemptive schedule algorithms in which the Ready List is treated as a FIFO queue. Your answer needed to mention both the order in which processes are scheduled (FIFO) and preemption.

- Semaphore [p. 213]: An integer variable accessed only through the atomic operations wait() and signal().

- Shortest Job Next (SJN) [p. 267]: (or Shortest Job First) A process scheduling strategy which selects from among processes in the Ready list the one expected to take the shortest time to complete its CPU burst, that is, until it next waits for some resource. Requires OS to have a good estimate of how long each process will run. Typically not used with preemption, although we might preempt if estimated time is exceeded.

- Swapping [p. 385]. Similar in concept to paging, except that the units are entire processes, rather than pages.

- Starvation [p. 217]: A process may wait indefinitely. Broader than just scheduling.

- SSTF = Shortest-seek-time-first [p. 474]; C-SCAN [p. 476].

- Thrashing [p. 425]. Behavior of a process that does not have "enough" page frames allocated to it, characterized by frequent page faults.

- Working set [p. 427].

Problem 2 Effective memory access time

Assumptions:

- We are using a virtual memory memory system with paging

- At any point in the execution of our process, some pages are in memory

(and on the paging disk), and some pages are only on the

paging disk

- Part of the page table is kept in a Translation Lookaside Buffer (TLB),

and part is kept in memory. [Don't panic; you do not need to know what a

TLB is to answer this question.]

- It takes 5 nanoseconds to access the TLB

- It takes 50 nanoseconds to access memory

- It takes 100,000 nanoseconds to access the disk

- For each memory reference in our program, we first look

in the TLB (5 ns)

- 80% of the time, we find in the TLB the frame number in memory where the

desired reference can be found, and we access memory to find it (+ 50 ns)

- 20% of the time, we do not find the desired page in the TLB

- In that case, we must access memory to find the page table (+ 50 ns)

- 90% of that 20% (18% of the total), we find the frame number in memory

where the desired reference can be found, and we access memory to find it

(+ 50 ns)

- In the remaining 2% of the time, we have not found the frame number either

in the TLB or in the in-memory page table because the referenced page is

not in memory. We must go to the disk and retrieve it (+ 100,000 ns)

- In 10% of the cases described in assumption 12, before we can bring the

new page in, we must first copy the "victim" page currently holding

that frame back to the disk (+ 100,000 ns)

- Once the new page is copied from the disk into its page frame in memory,

we must access the referenced memory location (+ 50 ns)

- (2 points) What is the event called described in assumption 9?

- (2 points) What is the event called described in assumption 12?

- (2 points) In assumption 13, why must we sometimes write the victim page

back to the disk?

- (2 points) In assumption 13, why don't we always have to write the victim

page back to the disk?

- (8 points) On average, how many nanoseconds are required to make 1,000

memory references in this system?

- (4 points) If you wish to make the average memory access time faster, which

of these steps will you attack? Why? What operating system changes might

improve that part?

- TLB miss or page fault

- Page fault

- Its contents may have changed since it was read (or last written)

- Its contents may not have changed since it was read

(or last written). For example, for a page of code, the copy in memory is the same as the copy on the disk (unless we have self-modifying code), so there is no need to write the in-memory copy back to the disk. That is a common case, which is why the 10% specified in Assumption 13 is so low.

-

| Assumption |

Time each (ns) |

Times of 1000 |

Time total (ns) |

| 7 |

5 |

1000 |

5,000 |

| 8 |

50 |

800 |

40,000 |

| 10 |

50 |

200 |

10,000 |

| 11 |

50 |

180 |

9,000 |

| 12 |

100,000 |

20 |

2,000,000 |

| 13 |

100,000 |

2 |

200,000 |

| 14 |

50 |

20 |

1,000 |

| Total |

|

|

2,265,000 |

If you can do that faster or more accurately on a calculator than in your head, your head needs some practice. I cooked the numbers on purpose for mental calculations.

- Clearly, the cost of disk access dominates the total. You have three

alternatives:

- Make disk access faster (reduce the 100,000), but that's not an OS change.

Replace a mechanical disk by an SSD, but that's not an OS change, either.

A better disk scheduling algorithm MIGHT help a little.

- Fewer page faults (reduce the 20):

Larger memory, but that is not an OS change

Larger working set for this process

Better page replacement strategy

Anticipatory paging could help

- Fewer write-backs (reduce the 2)

Implement "sneaky write" on dirty bit

More frequent write-back to clear dirty bit

Variant: Buffer as-needed writebacks so they happen later, but are hidden

You might also reduce the TLB miss rate:

Larger TLB, but that is not an OS change

Better TLB replacement strategy

Of course, more or faster memory would help, but that's not an OS improvement.

Problem 3 How large should a quantum be?

Suppose we are using preemptive process scheduling. Choosing the correct

quantum size (how long a process is allowed to run) is important to the

effective operation of the operating system. Consider a single processor

operating system that supports a large number of interactive users. Each

time a process gets the processor, the interrupt clock is set to interrupt

after the quantum Q expires. Assume that the same quantum is used for all

processes.

- (5 points) What is the effect of setting the quantum to a very large value, say

ten minutes?

- (5 points) What is the effect if the quantum is set to a very small value, say a

few processor cycles?

- (15 points) Your answers to parts a) and b) should suggest that an appropriate

quantum must be in between these extremes. Suppose you could turn a dial

(or drag a slider bar) and vary the quantum. How would you know when you

had chosen the "right" value? What factors make this value right from

the user's perspective? What factors make it right from the system's

point of view?

Hint: This is a mathematical modeling question. It should remind you of

some calculus homework problems. Be as quantitative as possible.

I expect a mathematical treatment of some kind.

- There is essentially no preemption, so this is like a run-to-completion scheduling, with the running process getting excellent service, and some processes waiting a very long time. System response seems terrible. The advantage is that the CPU spends almost all of its time doing useful work. Throughput is high. If a process finishes in less than Q, surely we would bring in the next process and not do nothing, so a short process should not take its full quantum.

A long quantum does not lead to starvation. In fact, there is little wasted time in context swap, and we make steady progress through our (essentially) FIFO Ready list.

- Almost no useful work would be done. How long does a context swap take?

You could accuse this quantum strategy of starvation. Since no useful work is done, everyone can be forced to wait for an unbounded time.

- Be as quantitative as possible. I expect a mathematical treatment of some kind

(or -10). Even a simple graph may suffice.

State clearly what you will optimize. What are your criteria for "goodness?" Candidates include

- CPU time doing useful work / Total CPU time? a) wins!

- Average wait time

- Average response time

- Average number of jobs completed per hour

- Calls to the help desk

- Median or maximum of any of the above

- Variability in any of the above

You might take Q = 1 / (2 x number of users) so everyone gets two CPU bursts per second.

You might take the max of that with about 100 x cost of context swap, so each process gets a chance to

do something useful while it is executing, even if there are MANY users.

You might take half (or 80%) of the average CPU burst of the job pool.

With both a) and b), one problem is long waiting times, so you might choose Q to minimize the

average waiting time.

With any of these rules of thumb, you need to argue how that might maximize or minimize your preferred metric.

Discussing simulation satisfies the "quantitative" requirement.

Several people talked about processes finishing. In this context, a process moving itself to a wait state by requesting some resource is a far more common way for a process to relinquish the CPU than by terminating.

Problem 4 Ultra-Tiny File System

Describe how you would extend the XINU Ultra-Tiny File System of Project 8 to provide

arbitrary length files.

Hint: I expect 1 - 2 pages with a mixture of pictures/diagrams, English text,

and pseudo-code at a level of detail roughly similar to the explanations Dennis gives in class.

We often ask about the current homework project to reward students who start working on it before the last minute. I'd like you to have a competitive advantage if you started when you should have.

The very few students who attempted this problem received bonus points for preparation.

What is the current limitation that restricts the size of a file?

Size of a block (and number of blocks)

In the spirit of our OS, the block size is a property of the (virtual) hard drive, and the OS cannot change it by fiat.

Solution: Multiple blocks in a file. Any of the allocation methods in 11.3

would work, including contiguous allocation, linked allocation, file allocation table, in increasing order of complexity.

If you said, "We'll just declare how long the file is," assuming that block size is fixed, you are proposing a contiguous allocation. Then, you have to describe how the OS knows a) where the file begins and b) where the file ends. What are the disadvantages of contiguous allocation? What limits remain?

A linked allocation strategy was the most commonly suggested. That works, but storing the links in the block reduces the data block size. In a more advanced operating system, that would interfere with other OS capabilities, e.g., buffering files by block or paging. Also, if one block is lost, all following blocks are lost. It is fine for you to suggest linked allocation as a solution, but you should acknowledge its disadvantages.

Of course, that does not do much good with such a limited number of blocks,

but supporting a larger number of blocks is a different question.

Having the OS declare larger blocks would work in XINU (although with some BAD side effects), but it destroys the concept of hard disk blocks we are trying to understand and simulate.

Problem 5 Tricky C

The code below assumes it is being run in XINU. It models the classical Dining Philosophers problem with MAXFORKS forks (represented by semaphores) and MAXFORKS philosophers (represented by processes). Each philosopher process has an assigned ID (0 <= i < MAXFORKS). This solution assigns to each philosopher process a different priority, equal to the ID value.

void philosopher(int id, semaphore forks[]) {

int myLeft, myRight;

myLeft = id;

myRight = (id + 1) % MAXFORKS;

while (1) {

printf("Philosopher %d thinks\n", id);

sleep(200);

wait(forks[myLeft]);

wait(forks[myRight]);

printf("Philosopher %d eats\n", id);

sleep(200);

signal(forks[myRight]);

signal(forks[myLeft]);

}

}

int main(int argc, char **argv) {

semaphore forks[MAXFORKS];

for (i = 0; i < MAXFORKS; i++) {

forks[i] = newsem(1);

ready(create((void *)philosopher, INITSTK, i, "PHIL",

2, i, forks), RESCHED_NO);

}

resched();

...

}

- Does this instance of the Dining Philosophers potentially deadlock? Explain.

- If not, make a rigorous argument for why not.

- If so, change the philosopher process to prevent deadlock. Explain.

Hint: You are welcome to work on this page of the exam. Be sure your name is on it.

Dr. Brylow writes:

The exam version has most of the output statements removed to make the code shorter,

but if you put a printf() after each wait() and signal(), you get something like:

Philosopher 4 has acquired left fork

Philosopher 3 has acquired left fork

Philosopher 2 has acquired left fork

Philosopher 1 has acquired left fork

Philosopher 0 has acquired left fork

and then deadlock.

Interestingly, as I was capturing the output for you above, I realized

that this question may be even subtler than I thought. I cleaned the output

statements out to make the code shorter for the exam, but that also

eliminates the deadlock. Without a potentially blocking I/O call between the

two wait() calls, nothing can stop the highest priority process from

acquiring both his forks if the scheduler is simple priority-based. Even if

he is preempted, priority scheduling indicates that the highest numbered

philosopher will be ready to run again before any lower priority

philosophers.

If the scheduler uses aging, or if there is any blockable call between

the two wait() calls, then deadlock becomes possible again.

But that's really just a quirky, non-circular property of this particular

deterministic implementation of the Dining Philosophers. If you add random

wait times into the mix, it is still possible for the highest priority

philosopher to get his first fork, but not the other, and then we have

potential circular wait again.

Ahhh, concurrence.

Corliss: I gave full credit to some solutions that said, "Yes," and full credit to some solutions that said, "No." I looked for a careful analysis. Similarly, some "yes" answers and some "no" answers received only a few points.

Several students looked for four conditions of deadlock, but did not apply the search appropriately in this scenario.

We have mutual exclusion. Philosophers do not share forks simultaneously, although you could design a solution that does.

We have no preemption. Preemption would take a fork away from a philosopher who holds it. No philosopher takes a fork away from a neighbor, although you could design a solution that does.

We have hold and wait. Once a philosopher acquired a fork, that fork is held while the holder waits for the other fork, although you could design a solution in which a philosopher releases his first fork if his second is not available.

Hence, the question revolves around circular wait. As formulated, circular wait is possible. To prevent deadlock, you could enable sharing or preemption, or you could force the return of one fork if the other is not available, but the easiest solutions prevent circular wait in some way, e.g., insert a mutex around the two wait() calls.

Release()? But there is no preceding acquire(). Implicitly, wait() acquires a fork, and signal() releases it.

Reverse signals()? No, the deadlock may have already occurred. The problem is with the wait() calls.

Check in advance? If you check whether both forks are available before you pick them up, your solution is susceptible to a race condition, resulting in failure to prevent deadlock.

The calls to sleep() do not matter. Their only affect is to force interrupts to occur in unfortunate places. There is no solution in moving the calls to sleep() around. Even if the wait() calls are close together, there still could be an interrupt between them.

Do the priorities matter?

Another solution has even-numbered philosophers pick up first left, then right, and odd-numbered philosophers pick up first right, then left.

You could put extra forks on the table, but then it is no longer the classical dining philisophers problem.

| |