| |

Your first midterm examination will appear here. It covers Chapters 1 - 5, omitting Sections 3.6 (we'll cover that with networking), 4.3 - 4.7, 5.3, 5.8, 5.9, and 5.10. The strongest emphasis is on Sections 3.1 - 5, 4.1, and 5.1, 5.2, and 5.4-7.

It will be a 50 minute, closed book exam.

Expectations:

- See course goals and objectives

- See Objectives at the beginning of each chapter

- See Summary at the end of each chapter

- Read, understand, explain, and desk-check C programs

-

See previous exams:

Section and page number references in old exams refer to the edition of the text in use when that exam was given. You may have to do a little looking to map to the edition currently in use. Also, which chapters were covered on each exam varies a little from year to year.

Some questions may be similar to homework questions from the book. You will be given a choice of questions. I try to ask questions I think you might hear in a job interview. "I see you took a class on Operating Systems. What can you tell me about ...?"

Preparation hints:

Read the assigned chapters in the text. Chapter summaries are especially helpful. Chapters 1 and 2 include excellent summaries of each of the later chapters.

Read and consider Practice Exercises and Exercises at the end of each chapter.

Review authors' slides and study guides from the textbook web site.

Review previous exams listed above.

See also Dr. Barnard's notes. He was using a different edition of our text, so chapter and section numbers do not match, but his chapter summaries are excellent.

Read and follow the directions:

- Write on four of the five questions. If you write more than four questions, only the first four will be graded. You do not get extra credit for doing extra problems.

- Each problem is worth 25 points.

- In the event this exam is interrupted (e.g., fire alarm or bomb threat), students will leave their papers on their desks and evacuate as instructed. The exam will not resume. Papers will be graded based on their current state.

- Read the entire exam. If there is anything you do not understand about a question, please ask at once.

- If you find a question ambiguous, begin your answer by stating clearly what interpretation of the question you intend to answer.

- Begin your answer to each question at the top of a fresh sheet of paper [or -5].

- Be sure your name is on each sheet of paper you hand in [or -5]. That is because I often separate your papers by problem number for grading and re-assemble to record and return.

- Write only on one side of a sheet [or -5]. That is because I scan your exam papers, and the backs do not get scanned.

- No electronic devices of any kind are permitted.

- Be sure I can read what you write.

- If I ask questions with parts

- . . .

- . . .

your answer should show parts in order

- . . .

- . . .

- The instructors reserve the right to assign bonus points beyond the stated value of the problem for exceptionally insightful answers. Conversely, we reserve the right to assign negative scores to answers that show less understanding than a blank sheet of paper. Both of these are rare events.

The university suggests exam rules:

- Silence all electronics (including cell phones, watches, and tablets) and place in your backpack.

- No electronic devices of any kind are permitted.

- No hoods, hats, or earbuds allowed.

- Be sure to visit the rest room prior to the exam.

In addition, you will be asked to sign the honor pledge at the top of the exam:

"I recognize the importance of personal integrity in all aspects of life and work. I commit myself to truthfulness, honor and responsibility, by which I earn the respect of others. I support the development of good character and commit myself to uphold the highest standards of academic integrity as an important aspect of personal integrity. My commitment obliges me to conduct myself according to the Marquette University Honor Code."

Name _____________________________ Date ____________

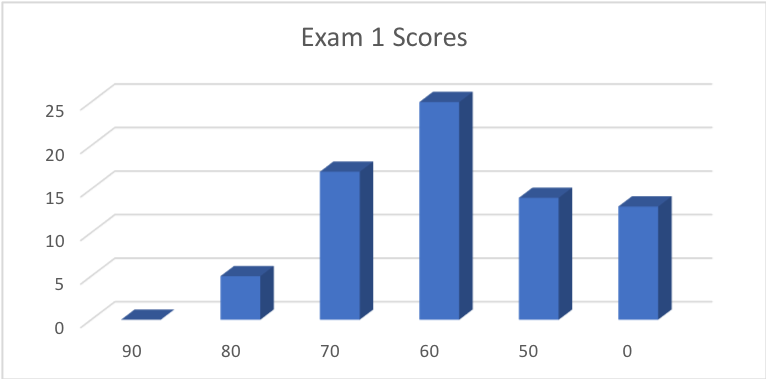

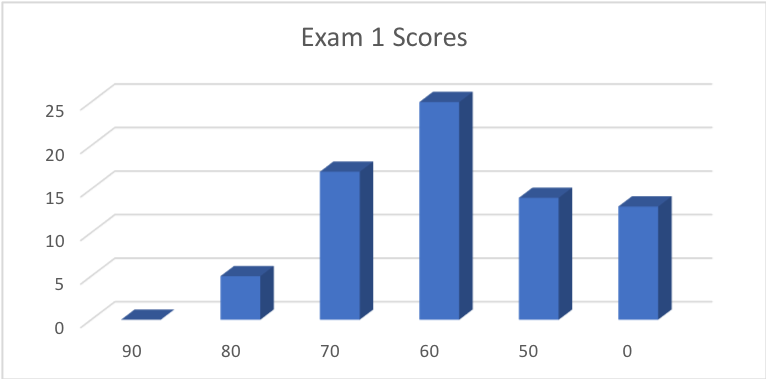

Score Distribution:

Median: 64; Mean: 62.3; Standard Deviation: 17.0. Plus 7 point bonus.

These shaded blocks are intended to suggest solutions; they are not intended to be complete solutions.

Problem 1 Vocabulary

Problem 2 Ready List

Problem 3 Start-up

Problem 4 Producer-Consumer using Semaphores

Problem 5 Synchronization

Problem 1 Vocabulary

Give careful definitions for each term (in the context of computer operating systems):

- Process

- Process Control Block

- Blocking send and Non-blocking send

- Race condition

- Critical-Section problem

- Deadlock

Warning: A definition that starts "Z is when ...," or "Z is where ..." earns -5 points.

- Process: [P. 105] Program in execution, a unit of work in a modern time-sharing system. A process can be in Running, Ready, or any one of several Wait states.

- Process Control Block (PCB): [Section 3.1.3] Representation of a process in the operating system, maintaining attributes such as PID, memory information, saved register values, open files, etc..

- Non-blocking send and blocking send: [Section 3.4.2.2] Send a message.

Non-blocking: Store the message with the OS and return immediately to the sending process.

Blocking: Store the message with the OS. Wait to return until the message is received.

Wait is not necessarily busy waiting. The receiver does not have to send a reply. Spell "receive" correctly.

- Race condition [p. 205]: Several processes access and update the same data concurrently,

and the outcome depends on what happens to be the order of execution.

Two processes writing to a shared location is not necessarily incorrect.

Incorrect results can occur if a process is interrupted while it is updating the shared location.

- Critical-Section problem [Section 5.2] Design a protocol that cooperating processes can use to change common variables. When one process is executing its critical section, no other process is allowed to execute its critical section.

- Deadlock vs. starvation [p. 217]. Both concern indefinite wait.

"A set of processes is in a deadlocked state when every process in the set is waiting for an event that can be caused only by another process in the set."

Waiting for something that never happens is not necessarily deadlock.

Starvation is also an indefinite wait not satisfying the definition of deadlock, often due to an accidental sequence of events.

Vocabulary list. I ask mostly about terms that appear as section, subsection, or subsubsection headings in the text, but any phrase printed in blue in the text is fair game. Here are some of the terms I might ask you to define. This list is not exhaustive:

- Batch system: Typically large, run at off-peak times, with no user present. E.g., overnight accounting runs, daily update of Checkmarq records. Batch mode is an old business data processing mode, still VERY widely used in mainframe business data processing. TABot is a batch system.

- Blocking receive vs. Non-blocking receive: [Section 3.4.2.2.]

- Non-blocking send and blocking send: [Section 3.4.2.2] Send a message.

Non-blocking: Store the message with the OS and return immediately to the sending process.

Blocking: Store the message with the OS. Wait to return until the message is received.

Wait is not necessarily busy waiting. The receiver does not have to send a reply. Spell "receive" correctly.

- Busy waiting [p. 213]. Waiting while holding the CPU, typically waiting by loop, doing nothing. For example

while (TestAndSet(&lock) ; // Does nothing

A process in a Waiting state (in the sense of the Ready-Running-Waiting state diagram) is not busy waiting because it is not holding the CPU.

- Client-Server computing and Peer-to-peer computing: [Section 1.11.4 & 5.] Client-server is a master-slave relationship. Client requests, server answers. Which is the master?

- Clustered systems: [Section 1.3.3] Two or more individual (computer) systems gathered together to accomplish computational work.

- Critical-Section problem [Section 5.2] Design a protocol that cooperating processes can use to change common variables. When one process is executing its critical section, no other process is allowed to execute its critical section.

- Interactive system: Typically, a user is present.

- Embedded system: A dedicated function within a larger mechanical or electrical system, often with real-time computing constraints.[1][2] It is embedded as part of a complete device often including hardware and mechanical parts. Embedded systems control many devices in common use today. -- Wikipedia.

- Deadlock vs. starvation [p. 217]. Both concern indefinite wait.

"A set of processes is in a deadlocked state when every process in the set is waiting for an event that can be caused only by another process in the set."

Waiting for something that never happens is not necessarily deadlock.

Starvation is also an indefinite wait not satisfying the definition of deadlock, often due to an accidental sequence of events.

- IPC: [Sect. 3.4 & 3.5] Interprocess Communication: shared memory and message passing. It

is important to know at least some of the TLAs (Three Letter Abbreviations).

- Kernel: [P. 6] Part of the OS that is running all the time, core OS processes.

- Kernel node vs. user mode: States of the processor. Normally, the processor is in user mode, executing instructions on behalf of the user. The processor must be in kernel mode to execute privileged instructions.

- Kernel thread: [Section 4.3] Created by OS processes.

- Kernighan's Law: [P. 87] "Debugging is twice a hard as writing the code in the first place. Therefore, if you write the code as cleverly as possible, you are, by definition, not smart enough to debug it." You should recognize the name Kernighan as one of the authors of our C textbook

- Message passing systems: [Sect. 3.3.4.2] A mechanism to allow processes to communicate and to synchronize their actions without sharing the same address space. A message-passing facility provides at least two operations: send(message) and receive(message). Be careful to avoid circular definitions: "Message passing systems pass messages" counts zero points. "recieve" earns -2 points.

- Non-blocking receive: [Section 3.4.2.2] Receive a message. If no message is there, return from the receive() function all immediately.

- Non-blocking send and blocking send: [Section 3.4.2.2] Send a message. Non-blocking: Store the message with the OS and return immediately to the sending process. Blocking: Store the message with the OS. Wait to return until the message is received.

- Operating system: [P. 3] A program that manages the computer hardware, provides a basis for application programs, and acts as an intermediary between the computer user and the computer hardware.

- Operating system calls: [Section 2.3] An interface to operating system services made available to applications software by an operating system.

- Priority inversion [p. 217]: A high priority process is forced to wait for a lower priority process to release some resource(s).

- POSIX: Portable Operating System Interface. We looked at POSIX examples for shared memory, message passing, and Pthreads.

- Pipes: [Sect. 3.6.3] A pipe acts as a conduit allowing two processes to communicate. A pipe is usually treated as a special type of file, accessed by ordinary read() and write() system calls.

- Privileged instructions: [P. 22] Some hardware instructions that may cause harm. May be executed only in kernel mode. Examples: Instruction to switch to kernel mode, I/O control, timer management, interrupt management

- Process: [P. 105] Program in execution, a unit of work in a modern time-sharing system. A process can be in Running, Ready, or any one of several Wait states.

- Process Control Block (PCB): [Section 3.1.3] Representation of a process in the operating system, maintaining attributes such as PID, memory information, saved register values, open files, etc..

- Process scheduling: [Section 3.2] Switch between processes so frequently that users can interact with each process. Select a process from the Ready list for execution in the CPU.

- Process State: [Sect. 3.1.2] Defined by the current activity of the process. Each process may be in one of: New, Running, Waiting, Ready, Terminated.

- Process vs. Thread: [P. 105-106, 163] A process is a program in execution. A process may include several threads. A thread is a basic unit of CPU use. It includes a thread ID, a program counter, a register set, and a memory stack.

- Protection vs. Security: [Section 1.9.] Protection - Mechanisms for controlling access to the resources provided by a computer system. Protection controls access to computer system resources. Security defends a system from internal and external attacks. Protection <> Security.

- Race condition [p. 205]: Several processes access and update the same data concurrently,

and the outcome depends on what happens to be the order of execution.

Two processes writing to a shared location is not necessarily incorrect.

Incorrect results can occur if a process is interrupted while it is updating the shared location.

- Real-time system: [Section 1.11.8] Typically time-sensitive interaction with external devices.

- RPC (in context of inter-process communication): [Sect. 3.6.2] Remote Procedure Calls abstract the procedure-call mechanism for use between systems with network connections using message-based communication.

- Shared memory systems: [Sect. 3.4.1] A region of memory residing in the address space of two or more cooperating processes. "Shared memory is memory that is shared" is circular.

Alternative: Separate CPUs that address a common pool of memory

- Shared memory vs. Message passing: [Section 3.4.] Be careful to avoid circular definitions: "Shared memory is memory that is shared" counts zero points.

- Sockets: [Sect. 3.6.1] An endpoint for communication. A pair of processes communicating over a network employs a pair of sockets. A socket is identified by an IP address and a port number.

- Symmetric multiprocessing (SMP): [P. 15] Multiprocessing system in which each processor performs all tasks within the operating system. Does your "definition" hold also for asymmetric?

- Thread cancelation: [Sect. 4.6.3] Task of terminating a thread before it has completed.

- Thread library: [Section 4.4] Provides a programmer with an API for creating and managing threads.

- Thread-Local Storage: [ 4.6.4] Data of which each thread must keep its own copy. Do not confuse with local variables.

- Thread pool: [Sect. 4.5.1] Create a number of threads at process startup and place them in a pool, where they sit and wait for work.

- Time sharing: [P. 20] The CPU executes multiple jobs by switching among them, but the switches happen so frequently that the users can interact with each program while it is running.

- User mode vs. Kernel mode: [Section 1.5.1] User mode is a state of the operating system in which most instructions of user applications are executed. Kernel mode is a state of the operating system in which some critical portions of the operating system is executed. What is it called when a user process makes an operating system call for service? Most operating system code is executed in user mode.

- User thread: [Section 4.3] Created by user processes.

- Virtual machine: [Section 1.11.6] Abstract the hardware of a single computer into several different execution environments, creating the illusion that each computing environment is running its own private computer.

Problem 2. Ready List

In the current project, the Ready List structure is maintained as a doubly-linked queue data structure, conceptually in an array of nodes:

struct queueNode {

int prev;

int next;

};

struct queueNode readyList[?];

int head = ?;

int tail = ?;

We distinguish between the Ready List structure, which is the concept of the code fragment above, from the readyList array.

Initially, Process 0 is running a do-nothing "null" process, and there are no other processes created (yet) for this core. Process 0 is running, so it is not in the Ready List structure.

- What should be the size of the readyList array? Explain why.

- What should be given as the initial values of head and tail? Explain why.

- What should be the initial values of prev and next in each queueNode? Explain why.

- Draw a picture that illustrates this initial empty state of the Ready List structure. Explain anything about your picture that may not be clear to the grader.

- Suppose the following sequence of events occur. Let's agree to insert at the tail and remove from the head.

- Create Process 5 and insert it into the Ready List structure

- Create Process 2 and insert it into the Ready List structure

- Create Process 6 and insert it into the Ready List structure

- The process at the head of the Ready List is made RUNNING. Warning: Tricky.

- That process kill()s Process 6

Draw a picture that illustrates the state of the Ready List structure at this point. Explain anything about your picture that may not be clear to the grader.

- (2 points) Maximum number of processes the system can support. Each process has a slot in this data structure,

whether it is in the Ready List or not, even whether it exists or not. In the assignment, the size in increased by

two because the Head and Tail are stored here, too.

- (1 point) Something to represent logical NULL. Zero is not a good choice, since there is a Process 0. I'd choose -1.

- (1 point) Something to represent logical NULL. Zero is not a good choice, since there is a Process 0.

It really does not matter, since they should be over-written anyway. I'd choose -1.

- (5 points) Initial Ready List structure:

|

|

readyList

| pid | prev | next |

|---|

| 0 | -1 | -1 |

| 1 | -1 | -1 |

| 2 | -1 | -1 |

| ... | ... | ... |

|

|

- (15 points)

- Create Process 5 and insert it into the Ready List structure

|

|

readyList

| pid | prev | next |

|---|

| 0 | -1 | -1 |

| 1 | -1 | -1 |

| 2 | -1 | -1 |

| 3 | -1 | -1 |

| 4 | -1 | -1 |

| 5 | -1 | -1 |

| 6 | -1 | -1 |

| 7 | -1 | -1 |

| ... | ... | ... |

|

|

- Create Process 2 and insert it into the Ready List structure

|

|

readyList

| pid | prev | next |

|---|

| 0 | -1 | -1 |

| 1 | -1 | -1 |

| 2 | 5 | -1 |

| 3 | -1 | -1 |

| 4 | -1 | -1 |

| 5 | -1 | 2 |

| 6 | -1 | -1 |

| 7 | -1 | -1 |

| ... | ... | ... |

|

|

It is OK if your prev and next are reversed.

- Create Process 6 and insert it into the Ready List structure

|

|

readyList

| pid | prev | next |

|---|

| 0 | -1 | -1 |

| 1 | -1 | -1 |

| 2 | 5 | 6 |

| 3 | -1 | -1 |

| 4 | -1 | -1 |

| 5 | -1 | 2 |

| 6 | 2 | -1 |

| 7 | -1 | -1 |

| ... | ... | ... |

|

|

- The process at the head of the Ready List is made RUNNING

Trick: Process 0 was running and must now be inserted into the ready list. If you do not put Process 0 into the Ready List, when all other processes finish, you will have nothing to run.

|

|

readyList

| pid | prev | next |

|---|

| 0 | 6 | -1 |

| 1 | -1 | -1 |

| 2 | -1 | 6 |

| 3 | -1 | -1 |

| 4 | -1 | -1 |

| 5 | -1 | -1 |

| 6 | 2 | 0 |

| 7 | -1 | -1 |

| ... | ... | ... |

|

|

- That process kill()s Process 6

|

|

readyList

| pid | prev | next |

|---|

| 0 | 2 | -1 |

| 1 | -1 | -1 |

| 2 | -1 | 0 |

| 3 | -1 | -1 |

| 4 | -1 | -1 |

| 5 | -1 | -1 |

| 6 | -1 | -1 |

| 7 | -1 | -1 |

| ... | ... | ... |

|

|

- Draw a picture that illustrates the state of the Ready List structure at this point. Explain anything about your picture that may not be clear to the grader.

Above

We highly encourage you to do this sort of desk-checking with your code, print out your Ready List structure, and see if it matches what you expected.

Problem 3. Start-up

What is a likely sequence of events that must happen when you turn on your laptop or desk-top computer?

How does your computer get started? I am looking for roughly 10 steps.

Step 0: Turn on the power switch

. . . < < Your answer happens here

Step n: Ready for you to begin using it - Ready for your first mouse action or key press

Hints: You answer should demonstrate your understanding of the operating system and the hardware it controls. You probably do not know the details, but you should be able to think through the order in which events must occur and where certain critical information must come from. Insights may be drawn from your experiences, what you have seen so far in XINU, the discussion in Section 1.2 of the text, and their logical consequences.

Similar to 2017 final exam

See Section 1.2 of our text. In brief:

- Must start executing code from nonvolatile Read Only Memory [or -4]

- which reads a loader from fixed location on disk or network (using what drivers?)

- which loads the full OS from disk or network

- initializes system tables

- starts various OS processes

- including command processor and/or GUI event handler [or -2]

This level of detail earns C. More detail is expected for scores > 10.

It is critical to tell where to find each loader and the OS itself.

The hardware starts in fetch, decode, execute. Where do the very first instruction come from? What do they do?

If there are 100+ processes running before you log in, what are they? How does the OS know what to start? How does the OS launch them?

You might want to develop a deeper understanding of what is "kernel" and what is not.

Since the problem specifically mentioned "ready for

you to begin using it," you lost 2 points if you did not explicitly mention

something about receiving user input.

If you describe what you see on the screen (external manifestations) vs. the

inner workings, you earn at most 10 of 25 points.

Problem 4. Producer-Consumer using Semaphores

A semaphore S is an integer variable accessed only through two atomic hardware operations defined as

SHARED:

int S; |

| |

wait(S) {

while (S <= 0) ; /* wait */

S --;

} |

signal(S) {

S ++;

} |

Consider this solution to the Producer-Consumer problem:

SHARED:

Semaphore countAvailableItems = 0;

Semaphore countAvailableSpace = BUFFER_SIZE;

double buffer[BUFFER_SIZE]; |

| |

Producer:

int in = 0;

while (true) {

// Produce item into nextProduced

signal(countAvailableItems);

buffer[in] = nextProduced ;

wait(countAvailableSpace);

in = (in + 1) % BUFFER_SIZE;

} |

Consumer:

int out = 0;

while (true) {

signal(countAvailableSpace);

nextConsumed = buffer[out];

wait(countAvailableItems);

out = (out + 1) % BUFFER_SIZE;

// Consume item in nextConsumed

} |

Explain how the semaphores work

- to enforce mutual exclusion

- to allow each process to make progress, and

- to assure bounded waiting.

Warning: This is a test question.

They don't.

The signal() and wait() calls in both processes are reversed, so the operation of the code is simply wrong.

Perhaps the simplest example of incorrect behavior: Suppose the Consumer runs first:

- signal() sets countAvailableSpace = BUFFER_SIZE + 1, which is nonsense

- nextConsumed = buffer[out]; retrieves an item that has not yet been produced - nonsense

- wait(countAvailableItems); prevents Consumer from leaving its critical section.

Besides Consuming what has not been produced, we effectively have reversed Critical Section with NOT Critical section.

I did not expect you to just know the calls were reversed; I expected you to do sample desk-checks

similar to mine above. To make matters harder, if the Producer runs first, it appears to work, at least

for several iterations before its Critical and non-Critical sections are shown to be reversed.

Grading: If you said this works, you received a maximum of 12 points, with more deductions depending on what you missed seeing.

These are the kinds of errors all programmers make. you must practice careful desk-checking

following what your code actually does (not what you think it does), or your projects will go poorly.

Problem 5. Synchronization

Consider code for allocating (creating) and releasing (destroying) processes:

#define MAX_PROCESSES 50

int numberOfProcesses = 0;

/* The implementation of fork() calls this function: */

int AllocateProcess() {

int newPID;

if ( MAX_PROCESSES <= numberOfProcesses ) {

return -1;

} else {

/* allocate necessary process resources */

++ numberOfProcesses;

return newPID;

}

}

/* The implementation of exit() or kill() calls this function: */

void ReleaseProcess() {

/* release processes resources */

-- numberOfProcesses;

}

- Identify the race conditions.

- Assume you have a mutex lock named mutex with the operations acquire() and release(). Indicate where the locking (calls to acquire() and release()) needs to be placed to prevent the race conditions.

- Why did you put acquire() and release() where you did?

- Could we replace the integer variable

int numberOfProcesses = 0;

with the atomic variable

atomic_int numberOfProcesses = 0;

to prevent the race condition? (You may want to explain what you interpret "atomic variable" to mean.)

- If you answered part d) "Yes," explain how that prevents the race condition.

If you answered part d) "No," explain why that does not prevent the race condition.

Derived from Problem 5.20, p. 246 of our textbook.

- (10 points. -8 if you missed including if ( MAX_PROCESSES <= numberOfProcesses ))

int newPID;

if ( MAX_PROCESSES <= numberOfProcesses ) {

return -1;

} else {

/* allocate necessary process resources */

++ numberOfProcesses;

return newPID;

}

and

-- numberOfProcesses;

numberOfProcesses is a shared resource potentially updated by two different processes running functions AllocateProcess() and ReleaseProcess() simultaneously.

- (3 points)

int newPID;

acquire(mutex)

if ( MAX_PROCESSES <= numberOfProcesses ) {

release(mutex)

return -1;

} else {

/* allocate necessary process resources */

++ numberOfProcesses;

release(mutex)

return newPID;

}

and

acquire(mutex)

-- numberOfProcesses;

release(mutex)

In AllocateProcess(), we must release() before each return because the closing brace of the function is never reached (or -2).

Need acquire() and release() pair in each function (or -4).

Thanks to Magnus Fyhr for pointing out the error in my first-posted solution.

- (6 points) If if ( MAX_PROCESSES <= numberOfProcesses ) through ++ numberOfProcesses; and -- numberOfProcesses; are

the critical sections, (part a), then I should acquire the mutex lock before each critical section and release it after.

- (3 points) No.

- (3 points) I do not know what it means for a storage location to be divisible or atomic.

If we interpret "atomic variable" to mean that operations on that variable are atomic,

making atomic_int numberOfProcesses = 0; has exactly the same effect as the code in

part b) - the critical section updates ++ numberOfProcesses;

and -- numberOfProcesses; cannot be interrupted. Without the if ( ... ),

with that definition of "atomic variable" it works. However, the separate

access in the Boolean test can be separated from the update.

If you defined "atomic variable" differently, you may have concluded differently.

| |