| |

Your first midterm examination will appear here. It covers Chapters 1 - 5 (subject to change).

It will be a 50 minute, closed book exam.

Expectations:

- See course goals and objectives

- See Objectives at the beginning of each chapter

- See Summary at the end of each chapter

- Read, understand, explain, and desk-check C programs

-

See previous exams:

Questions will be similar to homework questions from the book. You will

be given a choice of questions.

I try to ask questions I think you might hear in a job interview. "I

see you took a class on Operating Systems. What can you tell me about ...?"

See also Dr. Barnard's notes.

He was using a different edition of our text, so chapter and section numbers

do not match, but his chapter summaries are excellent.

Directions:

Read and follow the directions.

- Write on four of the five questions. If you write more than four questions, only the first four will be graded. You do not get extra credit for doing extra problems.

- Read the entire exam. If there is anything you do not understand about a question, please ask at once.

- If you find a question ambiguous, begin your answer by stating clearly what interpretation of the question you intend to answer.

- Begin your answer to each question at the top of a fresh sheet of paper [or -5].

- Be sure your name is on each sheet of paper you hand in [or -5]. That is because I often separate your papers by problem number for grading and re-assemble to record and return.

- Write only on one side of a sheet [or -5]. That is because I scan your exam papers, and the backs do not get scanned.

- Be sure I can read what you write.

- If I ask questions with parts

- . . .

- . . .

your answer should show parts

- . . .

- . . .

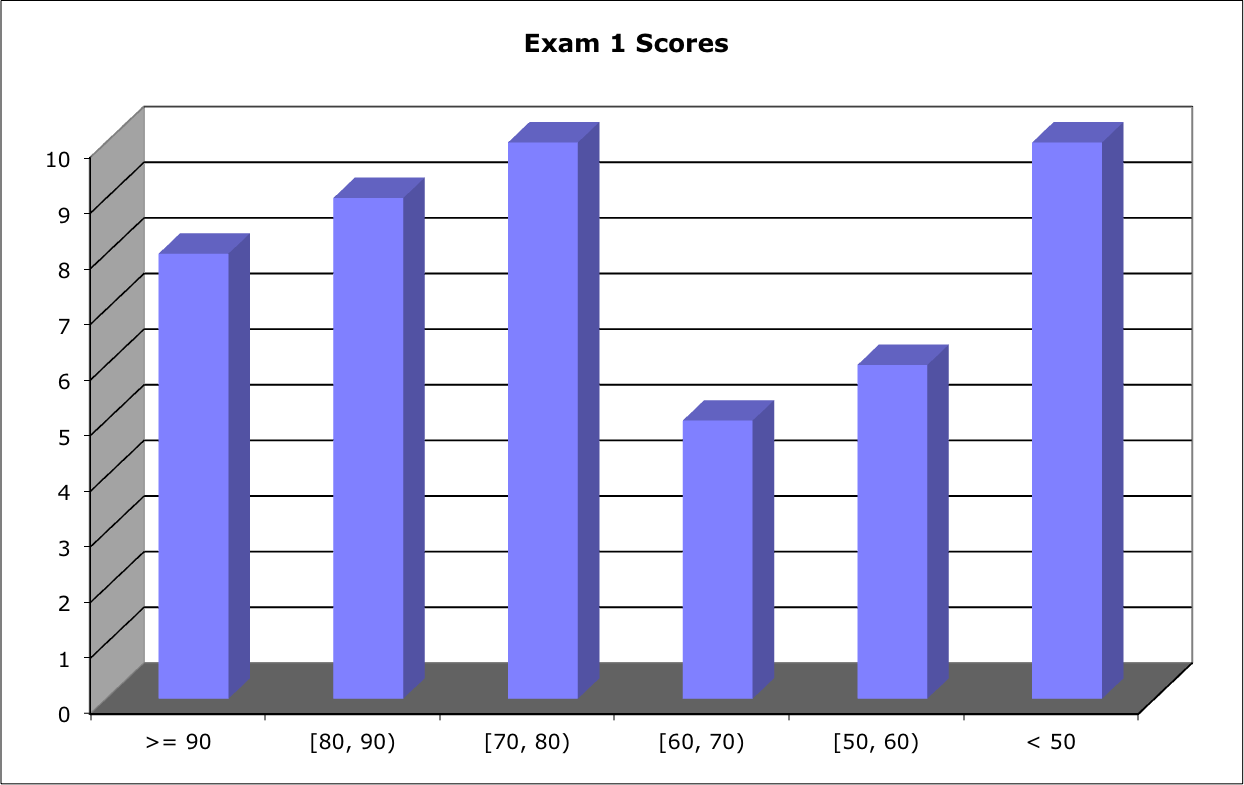

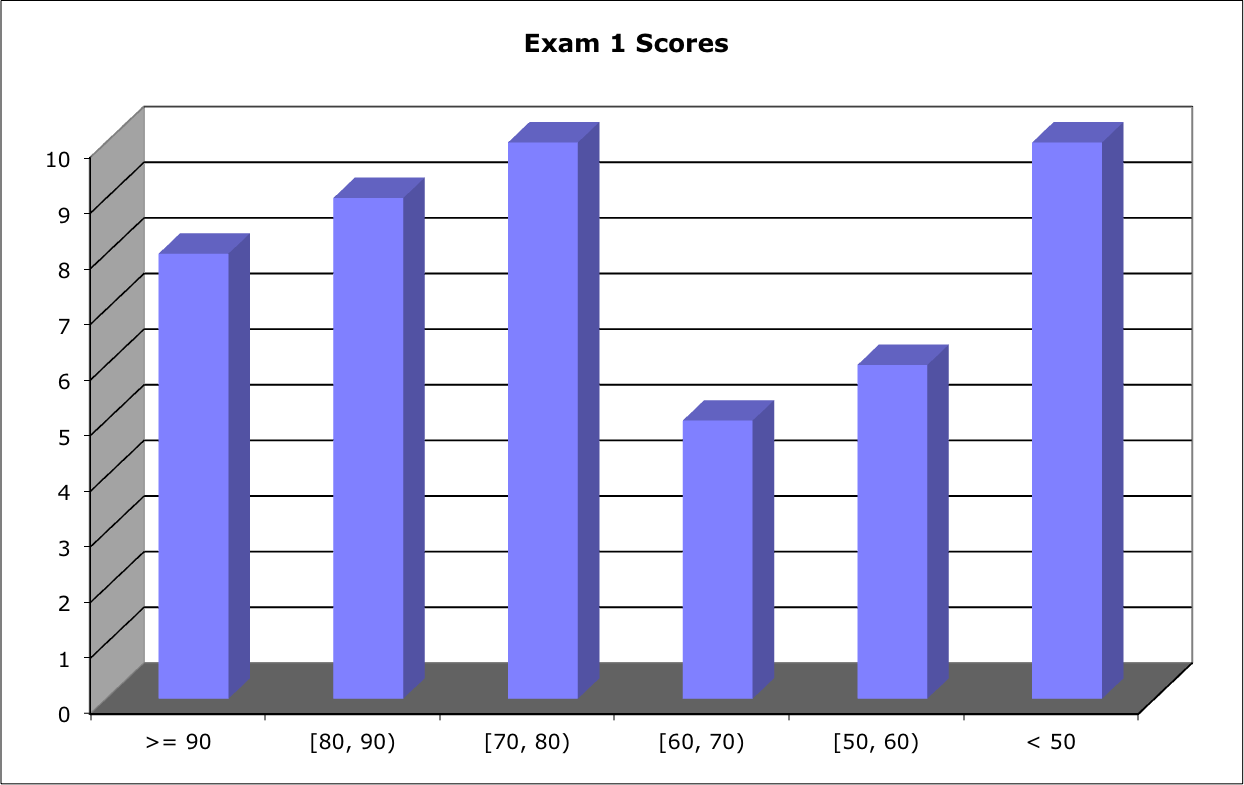

Score Distribution:

Median: 74; Mean: 69; Standard Deviation: 19

These shaded block are intended to suggest solutions; they are not intended to be complete solutions.

Problem 1 Definitions

Problem 2 Tricky C

Problem 3 Interprocess Communication - Shared Memory

Problem 4 Threads

Problem 5 Priority Scheduling with Preemption

Problem 1 Definitions

Give careful definitions for each term (in the context of computer operating systems):

- Operating system

- Operating system calls

- Blocking send vs. Non-blocking send

- CPU burst vs. I/O burst

- Privileged instructions

- Process vs. Thread

- Response time

- Shared memory systems

- Thread pool

- Time quantum

Warning: A definition that starts "Z is when ...," or "Z is where ..." earns -5 points.

- Operating system:

[p. 3] A program that manages the computer hardware, provides a basis for application programs, and acts as an intermediary between the computer user and the computer hardware.

- Operating system calls:

[Section 2.3, p. 55] An interface to operating system services made available to applications software by an operating system.

- Blocking send and Non-blocking send:

[Section 3.4.2.2.] Blocking - Sending process waits for the receiving process to receive. Non-blocking - Does not have to wait. Spell "receive" correctly.

- CPU burst:

[Sect. 5.1.1, P. 184] A process using primarily CPU resources and little I/O.

I/O burst: A process using primarily I/O resources and little CPU.

It is not uncommon for a process to be primarily a CPU-bound process for a while, to become an I/O-bound process for a while, and to go back and forth.

- Privileged instructions:

[P. 22] Some hardware instructions that may cause harm. May be executed only in kernel mode. Privileged instructions are not necessarily higher priority; they manipulate critical operating systems data structures. Examples: Instruction to switch to kernel mode, I/O control, timer management, interrupt management

- Process vs. Thread:

[P. 101-102, 153] A process is a program in execution. A process may include several threads. A thread is a basic unit of CPU use. It includes a thread ID, a program counter, a register set, and a memory stack.

- Response time:

[P. 157] Time to give first response to a user.

- Shared memory systems:

[Sect. 3.4.1, p. 117] A region of memory residing in the address space of two or more cooperating processes. "Shared memory is memory that is shared" is circular.

Alternative: Separate CPUs that address a common pool of memory.

- Thread pool:

[Sect. 4.4.4, P. 168] Create a number of threads at process startup and place them in a pool, where they sit and wait for work.

- Time quantum:

[Sect. 5.3.4, P. 194] In preemptive scheduling, the time quantum is the amount of time given to each process when it is placed into the Running state.

Vocabulary list. I ask mostly about terms that appear as section, subsection, or subsubsection headings in the text, but any phrase printed in blue in the text is fair game. Here are some of the terms I might ask you to define:

- Batch system:

Typically large, no user present. E.g., overnight accounting runs, daily update of Checkmarq records. Batch mode is an old business data processing mode, still VERY widely used in mainframe business data processing.

- Blocking send vs. Non-blocking send:

[Section 3.4.2.2.] Spell "receive" correctly.

- Client-Server computing and Peer-to-peer computing:

[Section 1.12.2.] Client-server is a master-slave relationship. Client requests, server answers. Which is the master?

- Clustered systems:

[P. 16] Two or more individual (computer) systems gathered together to accomplish computational work.

- Interactive system:

Typically, a user is present.

- CPU burst:

[Sect. 5.1.1, P. 184] A process using primarily CPU resources and little I/O.

- IPC:

[Sect. 3.4 & 3.5] Interprocess Communication: shared memory and message passing. It is important to know at least some of the TLAs (Three Letter Abbreviations).

- I/O burst:

[Sect. 5.1.1, P. 184] A process using only slight CPU resources followed by extensive I/O.

- Kernel:

[P. 6] Part of the OS that is running all the time, core OS processes.

- Kernel thread:

[P. 129] Created by OS processes.

- Kernighan's Law:

[P. 85] "Debugging is twice a hard as writing the code in the first place. Therefore, if you write the code as cleverly as possible, you are, by definition, not smart enough to debug it."

- Message passing systems:

[Sect. 3.4.2, p. 119] A mechanism to allow processes to communicate and to synchronize their actions without sharing the same address space. A message-passing facility provides at least two operations: send(message) and receive(message). Be careful to avoid circular definitions: "Message passing systems pass messages" counts zero points.

- Operating system:

[P. 3] A program that manages the computer hardware, provides a basis for application programs, and acts as an intermediary between the computer user and the computer hardware.

- Operating system calls:

[Section 2.3, p. 55] An interface to operating system services made available to applications software by an operating system.

- Pipes:

[Sect. 3.6.3, p. 134] A pipe acts as a conduit allowing two processes to communicate. A pipe is usually treated as a special type of file, accessed by ordinary read() and write() system calls.

- Privileged instructions:

[P. 22] Some hardware instructions that may cause harm. May be executed only in kernel mode. Examples: Instruction to switch to kernel mode, I/O control, timer management, interrupt management

- Process:

[P. 81] Program in execution, a unit of work in a modern time-sharing system.

- Process Control Block (PCB):

[P. 103] Representation of a process in the operating system.

- Process State:

[Sect. 3.1.2, p. 103] Defined by the current activity of the process. Each process may be in one of: New, Running, Waiting, Ready, Terminated.

- Process vs. Thread:

[P. 101-102, 153] A process is a program in execution. A process may include several threads. A thread is a basic unit of CPU use. It includes a thread ID, a program counter, a register set, and a memory stack.

- Protection vs. Security:

[Section 1.9.] Protection - Mechanisms for controlling access to the resources provided by a computer system (Sect 1.9, p. 26; p. 51; Sect. 2.4.6, p. 66; and others). Protection controls access to computer system resources. Security defends a system from internal and external attacks. Protection <> Security.

- Real-time system:

[P. 29] Typically time-sensitive interaction with external devices.

- Response time:

[P. 157] Time to give first response to a user.

- RPC (in context of inter-process communication):

[Sect. 3.6.2, p. 131] Remote Procedure Calls abstract the procedure-call mechanism for use between systems with network connections using message-based communication.

- Shared memory systems:

[Sect. 3.4.1, p. 117] A region of memory residing in the address space of two or more cooperating processes. "Shared memory is memory that is shared" is circular.

Alternative: Separate CPUs that address a common pool of memory

- Shared memory vs. Message passing:

[Section 3.4.] Be careful to avoid circular definitions: "Shared memory is memory that is shared" counts zero points.

- Sockets:

[Sect. 3.6.1, p. 128] An endpoint for communication. A pair of processes communicating over a network employs a pair of sockets. A socket is identified by an IP address and a port number.

- Symmetric multiprocessing (SMP):

[P. 14] Multiprocessing system in which each processor performs all tasks within the operating system. Does your "definition" hold also for asymmetric?

- Throughput:

[P. 157] Work per unit time.

- Thread cancelation:

[Sect. 4.4.2, P. 166] Task of terminating a thread before it has completed.

- Thread pool:

[Sect. 4.4.4, P. 168] Create a number of threads at process startup and place them in a pool, where they sit and wait for work.

- Time quantum:

[Sect. 5.3.4, P. 194] In preemptive scheduling, the time quantum is the amount of time given to each process when it is placed into the Running state.

- Time sharing:

[P. 19] The CPU executes multiple jobs by switching among them, but the switches happen so frequently that the users can interact with each program while it is running.

- User mode vs. Kernel mode:

[Section 1.5.1, p. 21] User mode is a state of the operating system in which most instructions of user applications are executed. Kernel mode is a state of the operating system in which some critical portions of the operating system is executed. What is it called when a user process makes an operating system call for service? Most operating system code is executed in user mode.

- User thread:

[P. 129] Created by user processes.

- Virtual machine:

[P. 76+] Abstract the hardware of a single computer into several different execution environments, creating the illusion that each computing environment is running its own private computer.

Problem 2 Tricky C

Here is a Dynamic Linked List program:

1 #include<stdio.h>

2 #include<stdlib.h>

3

4 typedef struct ele {

5 void *next;

6 int num;

7 void *prev;

8 } node;

9

10 node * addToDLL(int num, node *prevptr) {

11 node *nodeptr;

12 nodeptr = (node*)malloc(sizeof(node)); // Allocate new node

13

14 nodeptr->prev = prevptr; // Initialize new node

15 nodeptr->num = num;

16 prevptr->next = nodeptr;

17

18 return nodeptr; // Pointer to new node

19 }

20

21 void printDLLFwd(node *head) {

22 printf("\nPrinting linked list:\n");

23 while(head->next != NULL) {

24 head = head->next;

25 printf(">> %d\n", head->num);

26 }

27 printf(">> %d\n\n", head->num); // tail num field

28 }

29

30 main() {

31 int i;

32 node head;

33 node *nodeptr;

34

35 head.next = NULL; // Initialize the head node

36 head.num = 0;

37 head.prev = NULL;

38 nodeptr = &head; // Initialize to point to the head node

39

40 for (i = 1; i < 4; i++) { // Start at 1 because head contains 0

41 nodeptr = addToDLL(i, nodeptr);

42 }

43

44 printDLLFwd(&head);

45 exit(0);

46 }

- What does this program print? Are you sure?

- Explain why.

- What changes if line 14 and line 16 are interchanged? Explain.

Warning: You may assume this program compiles and runs. Otherwise, be alert.

- See code directory

When I run it, I get

Printing linked list:

>> 1

>> 2

>> 3

>> 3

You could get an infinite loop, printing all kinds of garbage imaginable!

- It starts at 1 and prints "3" twice because line 24 and line 25 are interchanged from a correct program. Line 24 advances to the next node before printing the contents of the current node.

At line 12, the value returned from malloc() is not checked. Hence, memory allocation could fail, and we'd never know. [or -2]

A serious difference, and one over which you will trip, is that C does not guarantee that variables are initialized. This example includes a line nodeptr->next = NULL; between line 15 and line 16. Without that, nodeptr->next is un-initialized. For most nodes, that does not matter in this example, because that storage location is soon set to point to the next node in the next call to addToDLL(). However, in the last node (with value 3, in this example), nodeptr->next is un-initialized, and that is the location line 23 is counting on to have the value NULL.

Java and C++ are required to initialize all otherwise un-initialized memory to zero, and many C compilers are "nice" and do the same. It happens that NULL has the value 0, so this code usually works. However, it does not have to work, and many cross-compilers for embedded systems do not zero all memory.

If the un-initialized nodeptr->next field in the last node happens to have non-zero (likely if not set to 0), line 24 will take you who knows where, and you'll continue to interpret whatever garbage happens to be there as a node value and a link to the next node.

Score: -10 if you failed to recognize that nodeptr->next was not initialized. I said this was a tricky C program. I didn't lie.

- No change. Lines 14 - 16 can be written in any order (which means they could be executed in parallel).

No matter how many points you lost, I awarded 8 of 25 points if you said anything intelligent.

It is quite likely there will be a tricky C program on Exam 2.

Two years ago, I had a student report after a job interview. "What is your level of C coding skill." She said she responded, "Well, I attempted each of Drs. Brylow & Corliss Tricky C exam questions!" She got the job :-)

A good programmer walks with a bit of a swagger.

Problem 3 Interprocess Communication - Shared Memory

The producer-consumer problem is a common example of cooperating processes. A producer process produces information (e.g., a sensor reading) that is consumed by a consumer process (e.g., detect sensor reading out of range). Often, the producer process and the consumer process communication using a bounded buffer implemented in shared memory.

| Producer Process | | Consumer Process |

|---|

Memory region shared by both processes:#define BUFFER_SIZE 10

typedef struct {

. . .

} item;

item buffer[BUFFER_SIZE];

int in = 0;

int out = 0;

|

|

1:

2:

3:

4:

5:

6:

7:

8:

9: | |

item nextProduced;

while (1) {

/* produce an item in nextProduced */

while (((in + 1) % BUFFERSIZE) == out)

; /* do nothing */

buffer[in] = nextProduced;

in = (in + 1) % BUFFER_SIZE;

}

| | 1:

2:

3:

4:

5:

6:

7:

8:

9: | |

item nextConsumed;

while (1) {

while (in == out)

; /* do nothing */

nextConsumed = buffer[out];

out = (out + 1) % BUFFER_SIZE;

/* consume the item in nextConsumed */

} |

You may assume

- these two processes are sharing a single processor/core,

- there may be many other processes contending for the same processor/core,

- you know nothing about the process scheduling algorithm being used, and

- the machinery for sharing the memory locations for buffer, in, and out has been accomplished correctly by appropriate operating systems calls.

Questions:

- Assume that it happens that the Consumer Process happens to be the first to run. Assume that the Consumer Process is allowed to run for about 1000 machine cycles (the exact number is not relevant, but I want to quantify "It gets to run for a long time."). Explain what happens. Your explanation should address

any changes to any of the shared variables, and

the flow of control (which statements in the Consumer Process execute).

- Assume that the Consumer Process eventually is swapped out, and the Producer Process gets its chance to run. Assume that the Producer Process is allowed to run for about 1000 machine cycles (the exact number is not relevant, but I want to quantify "It gets to run for a long time, plenty of time to full the buffer"). Explain what happens. Your explanation should address

any changes to any of the shared variables, and

the flow of control (which statements in the Producer Process execute)

- Your answers to both parts a) and b) should have described a significant waste of computer resources we call "busy waiting." For this part of the problem, assume we have re-started both processes, so they are just ready to start, with the shared memory variable having their values as initialized in the code. Describe one very fortunate sequence of executions which does not suffer from busy waiting. Your answer might take the form:

____ process runs until ___

____ process runs until ___

____ process runs until ___

etc.

Your answer should demonstrate clearly that you understand how it would be possible to produce and consume thousands of items with no busy waiting. You are not expected to explain how one could be so fortunate.

Warning: This code is vulnerable to several race conditions, but this question has nothing to do with that shortcoming. Correct answers to this question should not mention "race condition." The intent of this question is to emphasize the process scheduling and the use of shared memory in an application.

See p. 118 in our text.

- [7 points] If the Consumer process happens to run first, it will get to its line 4 and find in == out == 0, so the condition in line 4 is TRUE, and the Consumer Process will be in an indefinite loop at lines 4 & 5. "Nothing happens" is not a correct answer [-3 points]; while (in == out) is executed repeatedly. I agree that nothing useful happens. There is a difference.

- [7 points] If the Consumer Process is interrupted and the Producer process gets its chance to run (you are not responsible for describing how that might happen), the Producer Process fills locations 0, ..., 8 in the buffer. When line 7 fills location 8, line 8 sets in to 9, the condition in line 5 becomes TRUE, and the Producer Process is stuck busy waiting at lines 5 & 6. One clock cycle is not the same as one loop iteration [-3 points].

- [11 points] Any sequence of precesses activations that keep the buffer non-empty and non-full will work. The easiest is

Produce one item

Consume one item

Produce one item

Consume one item

etc.

You are not responsible for explaining how that might occur.

Problem 4 Threads

Discuss the notion of a thread.

Your answer is at most one page, one side. Answer as you might in a job interview.

Hint: This is not a BS "gimme" question. It tests whether you know what is important to understand, as well as whether you understand it.

Chapter 4. If you wish to convince a job interviewer you understand threads,

you might address:

- Purpose: Facilitate apparent simultaneous execution of tasks.

- Role: Threads provide one means for a computer operating system to manage

the resource of CPU time. One process may consist of multiple threads.

- Threads are similar to processes. Tell how. E.g., scheduling.

- Threads are different from processes. Tell how. E.g., memory. Processes

are copies; threads share. If you say a thread is smaller or lighter weight,

you should tell what is missing.

- Give an example in which treads might be used. Tell why.

-5 if you do not explain how a thread is different from a process.

-5 if you do not tell what threads are good for or give an example application.

-5 if you do not say something about scheduling threads.

I expect enough detail to demonstrate more than superficial understanding. I allowed a page. You should use most of it.

I wonder whether the scientists or the engineers are more likely to ask, "What is this good for?"

Problem 5 Priority Scheduling with Preemption

Desk-check a priority scheduling algorithm with preemption using the following scenario.

- Suppose we have five processes:

P0 - priority 0; P10 and P11 - priority 1; P20 and P21 - priority 2.

- Assume higher priority processes should run before lower priority processes.

- Assume processes with the same priority should run FCFS.

- Assume that when a process is placed into its Running state, it is given a time quantum of 5 time units.

- A process may run for its allotted 5 time units, or it may block itself for I/O before its time expires.

- A low priority process is not preempted by a newly-ready high priority process until the quantum of the Running process expires.

- The scheduler does not apply "aging".

- At time t = 0, the scheduler resched() is called, and the Ready list looks like:

| Tail --> |

P0 (1000, 0) |

P10 (8, 1) |

P11 (3, 1) |

P20 (1, 2) |

P21 (10, 2) |

<-- Head |

Each entry means: | Process ID (desired CPU burst time units, Priority) |

- The desired CPU burst / I/O burst patterns of each process are:

| P0 (0): | CPU 1000 units |

| P10 (1): | CPU 8 u | I/O 63 | CPU 8 | I/O 63 | Continue in same pattern |

| P11 (1): | CPU 3 u | I/O 12 |

CPU 3 u | I/O 12 |

CPU 3 u | I/O 12 |

HALT |

| P20 (2): | CPU 1 u | I/O 17 |

CPU 1 u | I/O 17 |

CPU 1 u | I/O 17 |

CPU 1 u | I/O 17 |

HALT |

| P21 (2): | CPU 10 u | I/O 2 |

CPU 10 u | I/O 2 |

CPU 10 u | I/O 2 |

CPU 20 u | I/O 2 |

HALT |

The time units are in terms of time each process is actually running, but in terms of any measure of elapsed time. For example, process P0 will run whenever its low priority allows it to do so until it has accumulated 1000 time units of CPU time, never waiting for I/O. Process P10 alternates bursts of 8 time units CPU use (which will be interrupted by the 5 time unit quantum) and 63 time unit I/O bursts.

Complete the following table through t = 30:

Elapsed

time | Event | Running

Process | Time

needed |

Ready list | Waiting for I/O completion |

| t = 0 | Start | P21 | 10 u |

P0 (1000, 0) | P10 (8, 1) | P11 (3, 1) | P20 (1, 2) | |

| | |

| t = 5 | Quantum | P20 | 1 u |

P0 (1000, 0) | P10 (8, 1) | P11 (3, 1) | P21 (5, 2) | |

| | |

| t = 6 | P20 wait | P21 | 5 u |

P0 (1000, 0) | P10 (8, 1) | P11 (3, 1) | | |

P20 (17, 2) | | |

| t = 11 | P21 wait | P11 | 3 u |

P0 (1000, 0) | P10 (8, 1) | | | |

P20 (12, 2) | P21 (2, 2) | |

| t = 13 | P21 I/O | P11 | 1 u |

P0 (1000, 0) | P10 (8, 1) | P21 (10, 2) | | |

P20 (10, 2) | | |

| t = | | | |

| | | | |

| | |

| t = | | | |

| | | | |

| | |

Here is what I get:

Elapsed

time | Event | Running

Process | Time

needed |

Ready list | Waiting for I/O completion |

Notes |

| t = 0 | Start | P21 | 10 u |

P0 (1000, 0) | P10 (8, 1) | P11 (3, 1) | P20 (1, 2) |

| | |

|

| t = 5 | Quantum | P20 | 1 u |

P0 (1000, 0) | P10 (8, 1) | P11 (3, 1) | P21 (5, 2) |

| | |

|

| t = 6 | P20 wait | P21 | 5 u |

P0 (1000, 0) | P10 (8, 1) | P11 (3, 1) | |

P20 (17, 2) | | |

|

| t = 11 | P21 wait | P11 | 3 u |

P0 (1000, 0) | P10 (8, 1) | | |

P20 (12, 2) | P21 (2, 2) | |

|

| t = 13 | P21 I/O | P11 | 1 u |

P0 (1000, 0) | P10 (8, 1) | P21 (10, 2) | |

P20 (10, 2) | | |

|

| t = 14 | P11 wait | P21 | 10 u |

P0 (1000, 0) | P10 (8, 1) | | |

P20 (9, 2) | P11 (12, 1) | |

|

| t = 19 | Quantum | P21 | 5 u |

P0 (1000, 0) | P10 (8, 1) | | |

P20 (4, 2) | P11 (7, 1) | |

P21 is returned to RUN |

| t = 23 | P20 I/O | P21 | 1 u |

P0 (1000, 0) | P10 (8, 1) | P20 (1, 2) | |

| P11 (3, 1) | |

|

| t = 24 | P21 wait | P20 | 1 u |

P0 (1000, 0) | P10 (8, 1) | | |

| P11 (2, 1) | P21 (2, 2) |

|

| t = 25 | P20 wait | P10 | 8 u |

P0 (1000, 0) | | | |

P20 (17, 2) | P11 (1, 1) | P21 (1, 2) |

|

| t = 26 | P11 I/O

P21 I/O | P10 | 7 u |

P0 (1000, 0) | P11 (3, 1) | P21 (10, 2) | |

P20 (16, 2) | | |

P11 & P21 both finish I/O |

| t = 30 |

Quantum |

P21 | 10 u |

P0 (1000, 0) | P10 (3, 1) | P11 (3, 1) | |

P20 (12, 2) | | |

|

| t = 35 |

Quantum |

P21 | 5 u |

P0 (1000, 0) | P10 (3, 1) | P11 (3, 1) | |

P20 (7, 2) | | |

P21 is returned to RUN |

| t = 40 | P21 wait | P11 | 3 u |

P0 (1000, 0) | P10 (3, 1) | | |

P20 (2, 2) | | P21 (2, 2) |

|

| t = 42 | P20 I/O

P21 I/O | P11 | 1 u |

P0 (1000, 0) | P10 (3, 1) | P21 (10, 2) | P20 (1, 2) |

| | |

Order of P20 & 21 is arb. |

| t = 43 | P11 wait | P20 | 1 u |

P0 (1000, 0) | P10 (3, 1) | P21 (10, 2) | |

P11 (12, 1) | | |

|

| t = | | | |

| | | |

| | |

|

If you once get the ready list out of step with mine, your solution rapidly diverges.

Yes, careful desk-checking is tedious, but often it is the only way you have to determine what your program really should be doing.

Do you notice a resemblance to your next homework project?

If you fail to return P21 to Running at t = 19, you should get

Elapsed

time | Event | Running

Process | Time

needed |

Ready list | Waiting for I/O completion |

Notes |

| t = 0 | Start | P21 | 10 u |

P0 (1000, 0) | P10 (8, 1) | P11 (3, 1) | P20 (1, 2) |

| | |

|

| . . . |

|

| t = 14 | P11 wait | P21 | 10 u |

P0 (1000, 0) | P10 (8, 1) | | |

P20 (9, 2) | P11 (12, 1) | |

|

| t = 19 | Quantum | P10 | 8 u |

P0 (1000, 0) | P21 (5, 2) | | |

P20 (4, 2) | P11 (7, 1) | |

|

| t = 23 | P20 I/O | P10 | 4 u |

P0 (1000, 0) | P20 (1, 2) | P21 (5, 2) | |

| P11 (3, 1) | |

|

| t = 24 | Quantum | P21 | 5 u |

P0 (1000, 0) | P10 (3, 1) | P20 (1, 2) | |

| P11 (2, 1) | |

|

| t = 26 | P11 I/O | P21 | 3 u |

P0 (1000, 0) | P11 (3, 1) | P10 (3, 1) | P20 (1, 2) |

| | |

|

| t = 29 | P21 wait | P20 | 1 u |

P0 (1000, 0) | P11 (3, 1) | P10 (3, 1) | |

P21(2, 2) | | |

|

| t = 30 | P20 wait | P10 | 3 u |

P0 (1000, 0) | P11 (3, 1) | | |

P21(2, 2) | P20(17, 2) | |

|

| |