| |

Your first midterm examination will appear here. It covers Chapters 1 - 4 (subject to change).

It will be a 50 minute, closed book exam.

Expectations:

- See course goals and objectives

- See Objectives at the beginning of each chapter

- See Summary at the end of each chapter

- Read, understand, explain, and desk-check C programs

-

See previous exams:

Section and page number references in old exams refer to the edition of the text in use when that exam was given. You may have to do a little looking to map to the edition currently in use. Also, which chapters were covered on each exam varies a little from year to year.

Questions will be similar to homework questions from the book. You will

be given a choice of questions.

I try to ask questions I think you might hear in a job interview. "I

see you took a class on Operating Systems. What can you tell me about ...?"

Preparation hints:

Read the assigned chapters in the text. Chapter summaries are especially helpful. Chapters 1 and 2 include excellent summaries of each of the later chapters.

Read and consider Practice Exercises and Exercises at the end of each chapter.

Review authors' slides and study guides from the textbook web site.

Review previous exams listed above.

See also Dr. Barnard's notes. He was using a different edition of our text, so chapter and section numbers do not match, but his chapter summaries are excellent.

Read and follow the directions:

- Write on four of the five questions. If you write more than four questions, only the first four will be graded. You do not get extra credit for doing extra problems.

- In the event this exam is interrupted (e.g., fire alarm or bomb threat), students will leave their papers on their desks and evacuate as instructed. The exam will not resume. Papers will be graded based on their current state.

- Read the entire exam. If there is anything you do not understand about a question, please ask at once.

- If you find a question ambiguous, begin your answer by stating clearly what interpretation of the question you intend to answer.

- Begin your answer to each question at the top of a fresh sheet of paper [or -5].

- Be sure your name is on each sheet of paper you hand in [or -5]. That is because I often separate your papers by problem number for grading and re-assemble to record and return.

- Write only on one side of a sheet [or -5]. That is because I scan your exam papers, and the backs do not get scanned.

- No electronic devices of any kind are permitted.

- Be sure I can read what you write.

- If I ask questions with parts

- . . .

- . . .

your answer should show parts

- . . .

- . . .

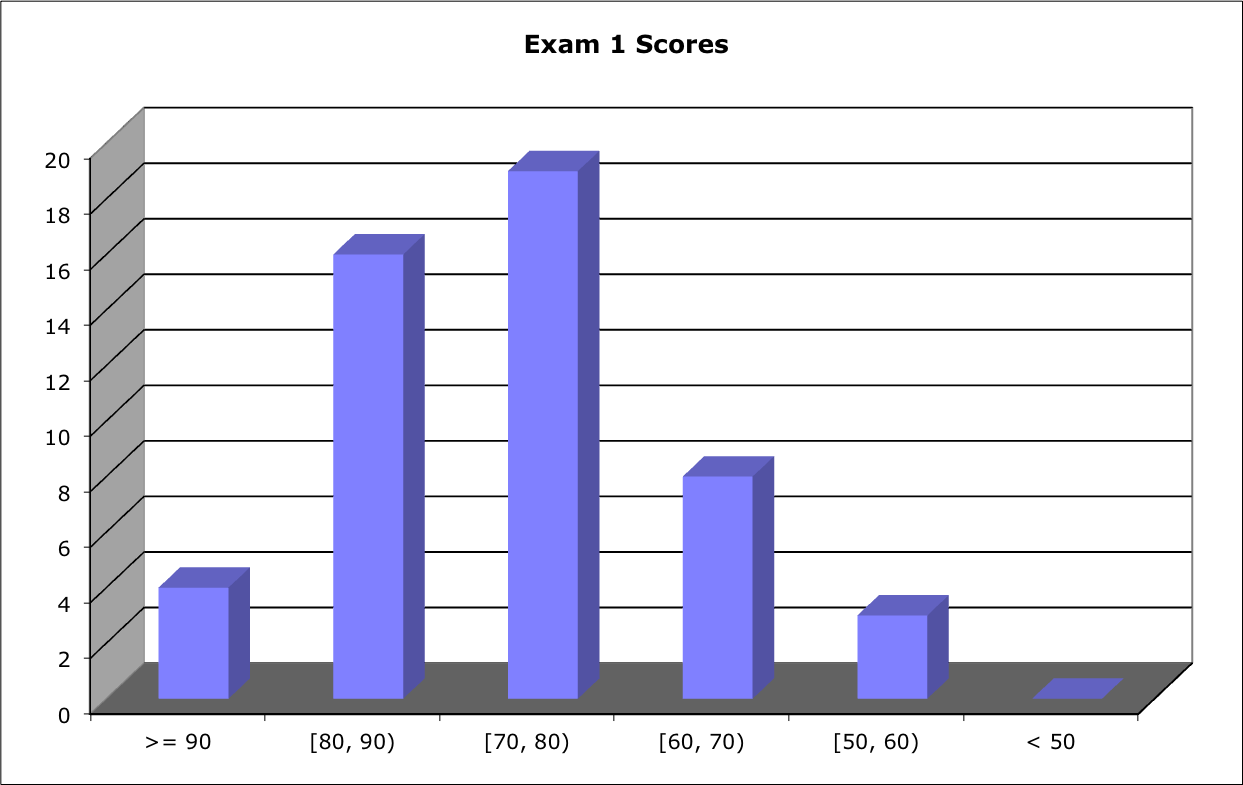

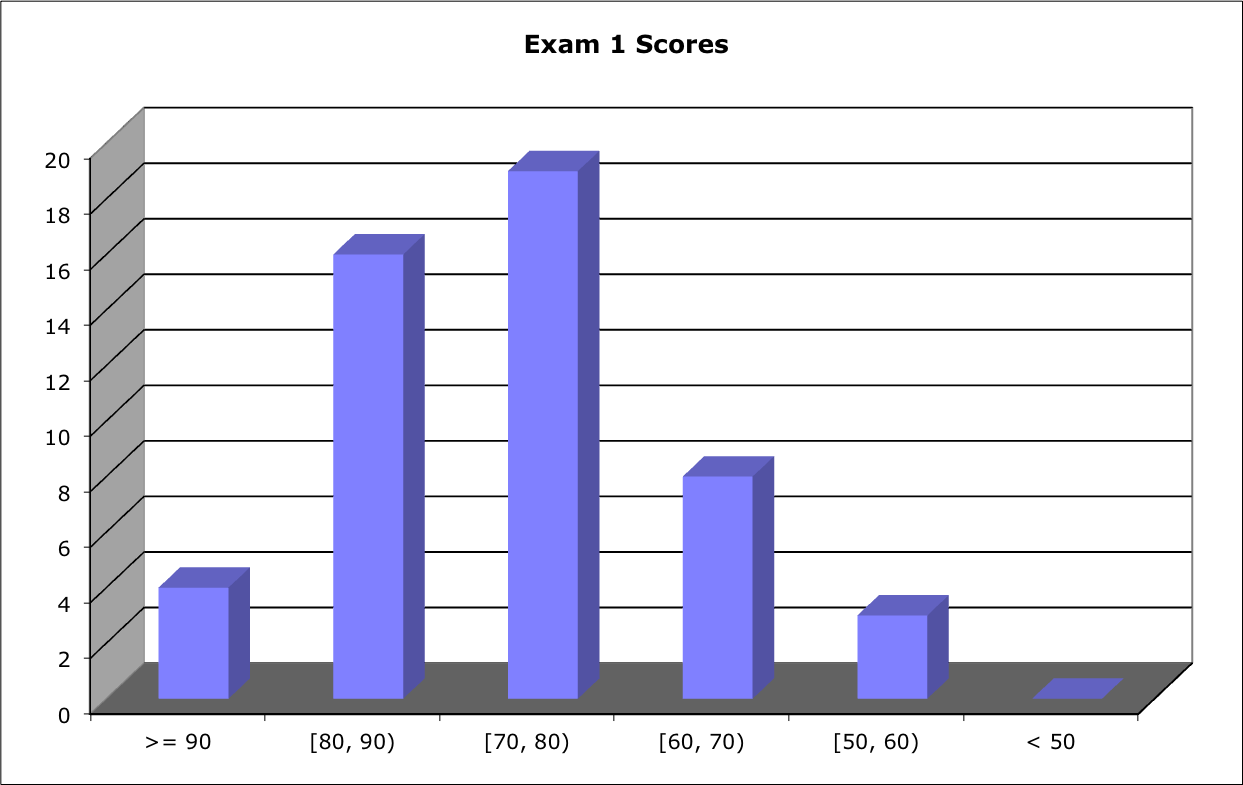

Score Distribution:

Median: 77; Mean: 76.3; Standard Deviation: 17.6

These shaded block are intended to suggest solutions; they are not intended to be complete solutions.

Problem 1 Definition

Problem 2 Tricky C

Problem 3 Process Attributes or Operations

Problem 4 Process Context Switch

Problem 5 User Threads vs. Kernel Threads

Problem 1 Definitions

Give careful definitions for each term (in the context of computer operating systems):

- Process

- Process scheduling

- Process state

- IPC

- Non-blocking receive

- Kernighan's Law

- POSIX

- Thread library

- Thread pool

- Thread-local storage

Warning: A definition that starts "Z is when ...," or "Z is where ..." earns -5 points.

- Process: [P. 105] Program in execution, a unit of work in a modern time-sharing system.

- Process scheduling: [Section 3.2] Switch between processes so frequently that users can interact with each process. Select a process from the Ready list for execution in the CPU.

- Process state: [Sect. 3.1.2] Defined by the current activity of the process. Each process may be in one of: New, Running, Waiting, Ready, Terminated.

- IPC: [Sect. 3.4 & 3.5] Interprocess Communication: shared memory and message passing. It is important to know at least some of the TLAs (Three Letter Abbreviations).

- Non-blocking receive: [Section 3.4.2.2] Receive a message. If no message is there, return from the receive() function all immediately. "recieve" incurs (-2).

- Kernighan's Law: [P. 87] "Debugging is twice a hard as writing the code in the first place. Therefore, if you write the code as cleverly as possible, you are, by definition, not smart enough to debug it." You should recognize the name Kernighan as one of the authors of our C textbook

- POSIX: Portable Operating System Interface. We looked at POSIX examples for shared memory, message passing, and Pthreads.

- Thread library: [Section 4.4] Provides a programmer with an API for creating and managing threads.

- Thread pool: [Sect. 4.5.1] Create a number of threads at process startup and place them in a pool, where they sit and wait for work.

- Thread-Local Storage: [Section 4.6.4] Data of which each thread must keep its own copy. Do not confuse with local variables.

Vocabulary list. I ask mostly about terms that appear as section, subsection, or subsubsection headings in the text, but any phrase printed in blue in the text is fair game. Here are some of the terms I might ask you to define. This list is not exhaustive:

- Batch system: Typically large, no user present. E.g., overnight accounting runs, daily update of Checkmarq records. Batch mode is an old business data processing mode, still VERY widely used in mainframe business data processing.

- Blocking send vs. Non-blocking send: [Section 3.4.2.2.] Spell "receive" correctly.

- Client-Server computing and Peer-to-peer computing: [Section 1.11.4 & 5.] Client-server is a master-slave relationship. Client requests, server answers. Which is the master?

- Clustered systems: [Section 1.3.3] Two or more individual (computer) systems gathered together to accomplish computational work.

- Interactive system: Typically, a user is present.

- IPC: [Sect. 3.4 & 3.5] Interprocess Communication: shared memory and message passing. It is important to know at least some of the TLAs (Three Letter Abbreviations).

- Kernel: [P. 6] Part of the OS that is running all the time, core OS processes.

- Kernel thread: [Section 4.3] Created by OS processes.

- Kernighan's Law: [P. 87] "Debugging is twice a hard as writing the code in the first place. Therefore, if you write the code as cleverly as possible, you are, by definition, not smart enough to debug it." You should recognize the name Kernighan as one of the authors of our C textbook

- Message passing systems: [Sect. 3.3.4.2] A mechanism to allow processes to communicate and to synchronize their actions without sharing the same address space. A message-passing facility provides at least two operations: send(message) and receive(message). Be careful to avoid circular definitions: "Message passing systems pass messages" counts zero points.

- Non-blocking receive: [Section 3.4.2.2] Receive a message. If no message is there, return from the receive() function all immediately.

- Operating system: [P. 3] A program that manages the computer hardware, provides a basis for application programs, and acts as an intermediary between the computer user and the computer hardware.

- Operating system calls: [Section 2.3] An interface to operating system services made available to applications software by an operating system.

- POSIX: Portable Operating System Interface. We looked at POSIX examples for shared memory, message passing, and Pthreads.

- Pipes: [Sect. 3.6.3] A pipe acts as a conduit allowing two processes to communicate. A pipe is usually treated as a special type of file, accessed by ordinary read() and write() system calls.

- Privileged instructions: [P. 22] Some hardware instructions that may cause harm. May be executed only in kernel mode. Examples: Instruction to switch to kernel mode, I/O control, timer management, interrupt management

- Process: [P. 105] Program in execution, a unit of work in a modern time-sharing system.

- Process Control Block (PCB): [Section 3.1.3] Representation of a process in the operating system.

- Process scheduling: [Section 3.2] Switch between processes so frequently that users can interact with each process. Select a process from the Ready list for execution in the CPU.

- Process State: [Sect. 3.1.2] Defined by the current activity of the process. Each process may be in one of: New, Running, Waiting, Ready, Terminated.

- Process vs. Thread: [P. 105-106, 163] A process is a program in execution. A process may include several threads. A thread is a basic unit of CPU use. It includes a thread ID, a program counter, a register set, and a memory stack.

- Protection vs. Security: [Section 1.9.] Protection - Mechanisms for controlling access to the resources provided by a computer system. Protection controls access to computer system resources. Security defends a system from internal and external attacks. Protection <> Security.

- Real-time system: [Section 1.11.8] Typically time-sensitive interaction with external devices.

- RPC (in context of inter-process communication): [Sect. 3.6.2] Remote Procedure Calls abstract the procedure-call mechanism for use between systems with network connections using message-based communication.

- Shared memory systems: [Sect. 3.4.1] A region of memory residing in the address space of two or more cooperating processes. "Shared memory is memory that is shared" is circular.

Alternative: Separate CPUs that address a common pool of memory

- Shared memory vs. Message passing: [Section 3.4.] Be careful to avoid circular definitions: "Shared memory is memory that is shared" counts zero points.

- Sockets: [Sect. 3.6.1] An endpoint for communication. A pair of processes communicating over a network employs a pair of sockets. A socket is identified by an IP address and a port number.

- Symmetric multiprocessing (SMP): [P. 15] Multiprocessing system in which each processor performs all tasks within the operating system. Does your "definition" hold also for asymmetric?

- Thread cancelation: [Sect. 4.6.3] Task of terminating a thread before it has completed.

- Thread library: [Section 4.4] Provides a programmer with an API for creating and managing threads.

- Thread-Local Storage: [Section 4.6.4] Data of which each thread must keep its own copy. Do not confuse with local variables.

- Thread pool: [Sect. 4.5.1] Create a number of threads at process startup and place them in a pool, where they sit and wait for work.

- Time sharing: [P. 20] The CPU executes multiple jobs by switching among them, but the switches happen so frequently that the users can interact with each program while it is running.

- User mode vs. Kernel mode: [Section 1.5.1] User mode is a state of the operating system in which most instructions of user applications are executed. Kernel mode is a state of the operating system in which some critical portions of the operating system is executed. What is it called when a user process makes an operating system call for service? Most operating system code is executed in user mode.

- User thread: [Section 4.3] Created by user processes.

- Virtual machine: [Section 1.11.6] Abstract the hardware of a single computer into several different execution environments, creating the illusion that each computing environment is running its own private computer.

Problem 2 Tricky C

The following C code is adapted from Figures 3.33 and 3.35 in our text. The program compiles with no errors or warnings, and it runs to completion.

#include <sys/types.h>

#include <stdio.h>

#include <unistd.h>

#include <sys/wait.h>

#define SIZE 5

int nums[SIZE] = {0, 1, 2, 3, 4};

int main() {

int i;

pid_t pid = fork();

if (0 == pid) {

printf("In CHILD, pid = %d\n", pid);

for (i = 0; i < SIZE; i++) {

nums[i] *= -i;

printf(" CHILD : %d \n", nums[i]); /* LINE X */

}

execlp("/bin/ls", "ls", NULL);

printf("LINE Y"); /* LINE Y */

} else if (0 < pid) {

printf("In PARENT, pid = %d\n", pid);

wait(NULL);

for (i = 0; i < SIZE; i++) {

printf(" PARENT: %d \n", nums[i]); /* LINE Z */

}

}

return 0;

}

Questions:

- What is printed at LINE X? Explain your answer.

- What is printed at LINE Y? Explain your answer.

- What is printed at LINE Z? Explain your answer.

a. (8 pts.) When I compile this program and run it, I get:

cc fork-question-3-17.c -o fork-question-3-17

fork-question-3-17

In PARENT, pid = 2375

In CHILD, pid = 0

CHILD : 0

CHILD : -1

CHILD : -4

CHILD : -9

CHILD : -16

DateClient.java fork-question-3-17 shm-posix-producer.c

DateServer.java fork-question-3-17.c simple-shell.c

HelloWorld.java forkbomb.c unix_pipe.c

Makefile forkbomb2.c win32-pipe-child.c

fig3-09_myshell.c newproc-posix.c win32-pipe-parent.c

fork-question-1.c newproc-win32.c

fork-question-2.c shm-posix-consumer.c

PARENT: 0

PARENT: 1

PARENT: 2

PARENT: 3

PARENT: 4

We said it was tricky.

b. (8 pts.) execlp() never returns

c. (8 pts.) Although nums[] is a global variable, it is not shared between processes; the child process receives its own copy. If you did not consider explaining why child's num[] and parent's num[] are separate, (-2 pts.).

d. [NOT on the exam] If instead, fork() were replaced with the machinery to create a thread, LINE Z would produce the same output as LINE X.

A few years ago, a veteran of this class reported a job interview for an entry level programmer position. The interviewer asked about her level of confidence in her programming skills. She thought a moment and replied, "I attempted the Tricky C problem of each of Brylow/Corliss's exams." She got the job.

Problem 3 Process Attributes or Operations

Choose either a) or b)

-

List attributes of a process. For each, give

- a name suggestive of its purpose,

- a likely data type, and

- a description.

- When appropriate, tell what values the attribute might assume.

For example

| Name |

Type |

Description |

Values |

| ID |

integer |

Process identifier |

0 : MAX_INT |

| | | | |

OR

-

List operations on a process. For each, give

- an appropriate name,

- a description of what it does, and

- circumstances under which it might be called.

- When appropriate, tell what parameters might be passed.

Hint: Pseudo-English is fine. I am not looking for near-C code. You do not need to list every possible attribute/operation, but to receive full credit, you should list at least 10 attributes or 6 operations, including the ones without which the operating system will not work.

- See Section 3.1.3, p. 107, or class notes for Chapter 3 PCB

Need to store Registers when switched out? Integer and floating point. Could be stored in stack space?

Need to know process state? Could be deduced from which queue it is in?

- See Section 3.3.

Your O-O training should help you distinguish between operations on a process (methods provided by class Process) from methods called from within a process on other classes. For example, send() and receive() might be operations in a class Message, but probably not methods in class Process. However, send_message_to() or receive_message_from() might belong to class Process.

There is no constructor in the usual sense. fork() is a class method; it is a little like a clone() method, except that it does not return the clone to the parent. fork() is somewhat like a constructor. Perhaps a good design would have a private constructor called by fork(), except that most OO languages require a public constructor.

Getter methods for most attributes. If you resorted to more than two getter methods, I penalized you.

In principle, setter methods for most attributes. In practice, most attributes are set indirectly, so there should be few explicit setter methods.

kill(), certainly.

wait() is not really a method in class Process. Moving the process to a blocked queue is a side effect of many OS calls the process might make. However, it could be that the OS code effects the move by calling a wait() method, which might belong either to class Process or to class BlockedQueue.

exit() is called by a process. If exit() is a method of class Process, it logically would be called as this.exit(), which certainly can be done, but seems a little odd.

One could design an operating system in which all operating systems calls belong to class Process, but I doubt anyone would consider that a "good" design.

Does this part suggest that class Process is not an especially good fit for "good" object-oriented design?

In the context of scheduling and dispatching of processes on a single-core processor,

- (5 points) What is the purpose of a context switch?

- (20 points) Describe the steps, in order, taken by a kernel to context-switch between processes.

See Section 3.2.3. This question reflects what you did in Homework 4.

Context switch changes which process holds the CPU. It does not interrupt

a running process, nor does it do the work of the scheduler to determine

which is the next process to run.

At a high level, the

assignment saves state in the PCB. To

paraphrase the current comments in the TODO section of the code:

* save callee-save ("non-volatile") registers

* save outgoing stack pointer

* load incoming stack pointer

* restore callee-save ("non-volatile") registers

* restore argument registers (for first time process is run)

* jump or return to destination address

How is control handed over to the incoming process? (or -5)

Think for a moment. Can you write code to save or to restore the Program

Counter? No. Why not? In our design, the last instruction executed by the

context switch is RETURN, as if from a function call.

Could the use of "kernel" in this question offer a hint for parts of Problem 1?

Problem 5 User Threads vs. Kernel Threads

Assume

- We are running on a four core computer system;

- Program M is running as a process, with 20 threads;

- The operating system is using a many-to-many threading model;

- No other processes affect the operation of Program M.

Describe the performance implications of each of the following scenarios:

- The number of kernel threads allocated to Program M is fewer than the number of processing cores (4).

- The number of kernel threads allocated to Program M is equal to the number of processing cores.

- The number of kernel threads allocated to Program M is greater than the number of processing cores, but fewer than the number of user threads of Program M (20).

- The number of kernel threads allocated to Program M is greater than the number of user threads of Program M (20).

- How do your answers change if Assumption 4 is replaced by Assumption 4':

There are 10 other user processes running 30 operating systems processes running and competing equally for CPU time.

This question is adapted from Problem 4.18, p. 193.

- The number of user threads running literally simultaniously is the same as the number of kernel threads allocated to Program M.

- The number of user threads running literally simultaniously is four, one in each kernel thread, and each kernel thread is running on its own core. Performance is better than in case a. Performance may be compromised by the overhead of switching user threads within the same kernel thread. Performance may be compromised by cores needing data which resides in a different core.

- The number of user threads running literally simultaniously is four, with one user thread running on each core. Performance is better than in case a. Performance may be a little slower than case b because of the additional overhead of swapping threads. Or, performance may be a little faster than b because thread switching is at the kernel thread level, which is likely to be faster than switching user threads within the same kernel thread.

- Nonsense.

- Answers are essentially similar, except that Program M may not get spread across all four cores. In some cases, competition for individual cores may save time for Program M by reducing data transfer. If only the OS could read the programmer's mind (error message: "None found.")

Some students appeared to think user thread compete with kernel threads. As out text explains them, kernel threads are the means by which user threads are implemented. User thread run within kernel threads.

| |