| |

Your second midterm examination will appear here. It covers Chapters 5 - 12.

It will be a 50 minute, closed book exam.

Expectations:

- See course goals and objectives

- See Objectives at the beginning of each chapter

- See Summary at the end of each chapter

- Read, understand, explain, and desk-check C programs

-

See previous exams:

Section and page number references in old exams refer to the edition of the text in use when that exam was given. You may have to do a little looking to map to the edition currently in use. Also, which chapters were covered on each exam varies a little from year to year.

Questions may be similar to homework questions from the book. You will

be given a choice of questions.

I try to ask questions I think you might hear in a job interview. "I

see you took a class on Operating Systems. What can you tell me about ...?"

Preparation hints:

Read the assigned chapters in the text. Chapter summaries are especially helpful. Chapters 1 and 2 include excellent summaries of each of the later chapters.

Read and consider Practice Exercises and Exercises at the end of each chapter.

Review authors' slides and study guides from the textbook web site

Review previous exams listed above.

See also Dr. Barnard's notes. He was using a different edition of our text, so chapter and section numbers do not match, but his chapter summaries are excellent.

Results on exam 1 suggest you may wish to maintain a vocabulary list.

Questions on projects.

Read and follow the directions:

- Write on four of the five questions. If you write more than four questions, only the first four will be graded. You do not get extra credit for doing extra problems.

- In the event this exam is interrupted (e.g., fire alarm or bomb threat), students will leave their papers on their desks and evacuate as instructed. The exam will not resume. Papers will be graded based on their current state.

- Read the entire exam. If there is anything you do not understand about a question, please ask at once.

- If you find a question ambiguous, begin your answer by stating clearly what interpretation of the question you intend to answer.

- Begin your answer to each question at the top of a fresh sheet of paper [or -5].

- Be sure your name is on each sheet of paper you hand in [or -5]. That is because I often separate your papers by problem number for grading and re-assemble to record and return.

- Write only on one side of a sheet [or -5]. That is because I scan your exam papers, and the backs do not get scanned.

- No electronic devices of any kind are permitted.

- Be sure I can read what you write.

- If I ask questions with parts

- . . .

- . . .

your answer should show parts

- . . .

- . . .

- The instructors reserve the right to assign bonus points beyond the stated value of the problem for exceptionally insightful answers. Conversely, we reserve the right to assign negative scores to answers that show less understanding than a blank sheet of paper. Both of these are rare events.

- Someone should have explained to you several years ago that "X is when ..." or "Y is where ..." are not suitable definitions (possibly excepting the cases in which X is a time or Y is a location). On Exam 2, "X is when ..." or "Y is where ..." earn -5 points.

The university suggests exam rules:

- Silence all electronics (including cell phones, watches, and tablets) and place in your backpack.

- No electronic devices of any kind are permitted.

- No hoods, hats, or earbuds allowed.

- Be sure to visit the rest room prior to the exam.

In addition, you will be asked to sign the honor pledge at the top of the exam:

"I recognize the importance of personal integrity in all aspects of life and work. I commit myself to truthfulness, honor and responsibility, by which I earn the respect of others. I support the development of good character and commit myself to uphold the highest standards of academic integrity as an important aspect of personal integrity. My commitment obliges me to conduct myself according to the Marquette University Honor Code."

Name ________________________ Date ____________

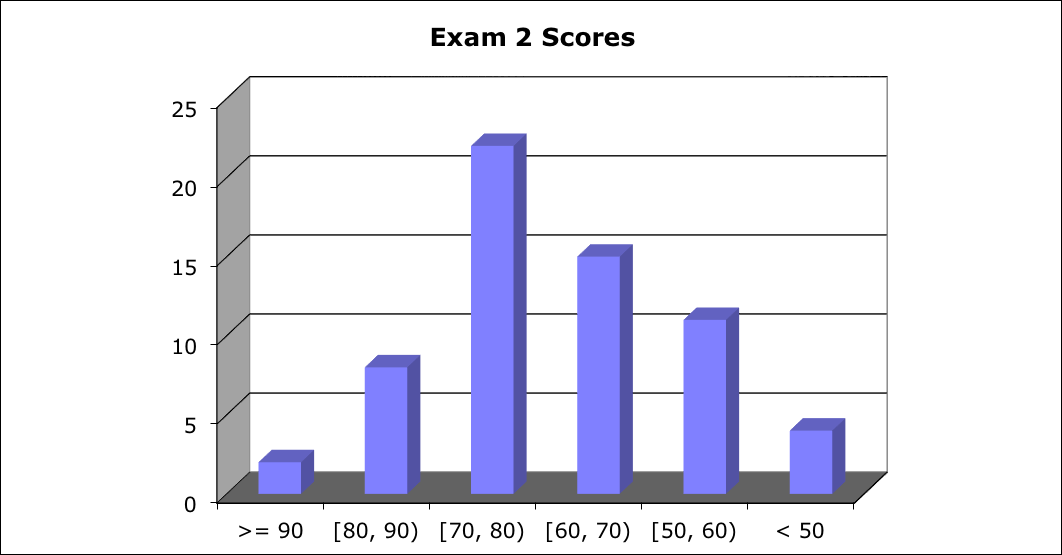

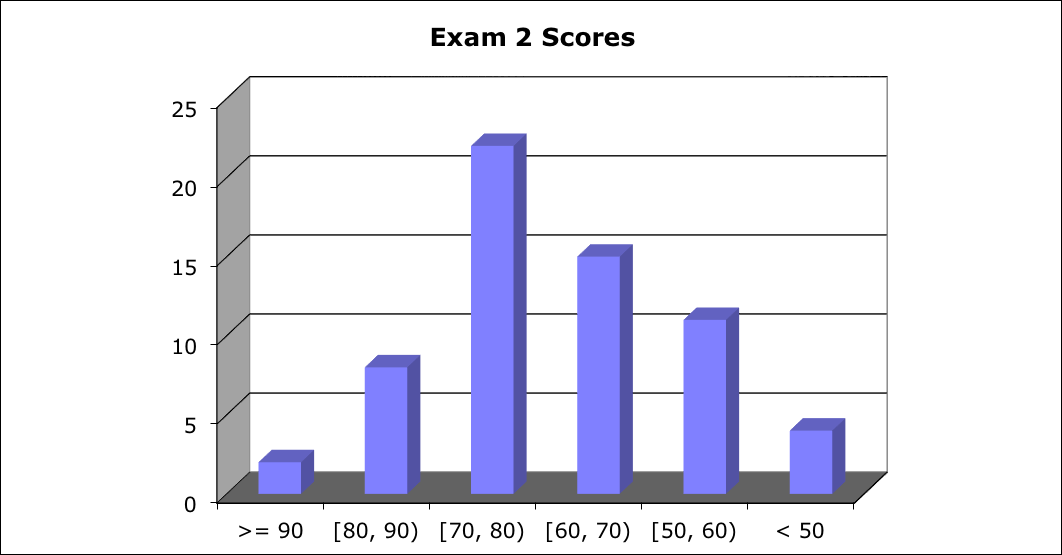

Score Distribution:

Median: 70; Mean: 68.3; Standard Deviation: 15.6

These shaded blocks are intended to suggest solutions; they are not intended to be complete solutions.

Problem 1 Definitions

Problem 2 Disk Block Allocation

Problem 3 Virtual Memory

Problem 4 Mailboxes

Problem 5 Tricky C

Problem 1 Definitions

Compare and contrast each pair of terms (in the context of computer operating systems):

- SJN and Priority for process scheduling

- Deadlock and starvation

- Memory locality and disk locality

- Rotating mechanical drives and solid state drives

- Thrashing and page fault (in the context of memory management)

- Contiguous, linked, and indexed disk block allocation

Hint: "Compare and contrast A and B" should evoke a template for your answer:

- Define A

- Define B

- How are they similar?

- How are they different?

- Anything else to say?

If you did not follow the template, you probably were penalized 8 points, not quite 2 points per part.

Specific questions were chosen from this collection (This list is not exhaustive for future exams):

- Active vs. Working set (in the context of memory management). Both concern memory addresses used by a program. The working set (Section 9.6.2) is the (fuzzy) set of pages the program is "naturally" using at the moment. The active set is the set of pages actually stored in memory at the moment. We hope that the active set contains the working set, more or less. When the working set is significantly larger than the active set, we get thrashing. However, I must cut you some slack, though, because our text makes no mention of the term "active set."

- Address binding (8.1.2) is the tying of a virtual address to a physical address. When do we know the physical address of a particular line of code of variable? Addresses can be bound at language design time, at language implementation time, at compile time, at load time, or during execution. Virtual memory is a technique for binding addresses during execution.

- Atomic execution [p. 210]: An instruction is implemented as one uninterruptable unit.

- Busy waiting [p. 213]. Waiting while holding the CPU, typically waiting by loop, doing nothing. For example

while (TestAndSet(&lock) ; // Does nothing

A process in a Waiting state (in the sense of the Ready-Running-Waiting state diagram) is not busy waiting because it is not holding the CPU.

- Cooperating process [p. 203]: One that can affect or be affected by other processes executing in the system.

- CPU-I/O burst cycle [p. 262]: Processes typically alternate between periods of high CPU activity and periods of high I/O activity

- Critical-Section problem [p. 206] solution must provide mutual exclusion, must allow each process to make progress, and must ensure the time a process must wait to enter its critical section is bounded.

- Deadlock vs. starvation [p. 217]. Both concern indefinite wait. "A set of processes is in a deadlocked state when every process in the set is waiting for an event that can be caused only by another process in the set." Starvation is also an indefinite wait not satisfying the definition of deadlock, often due to an accidental sequence of events. Starvation can occur in scheduling, but it can occur in other contexts.

- Deadlock necessary conditions [p. 318]: Mutual exclusion, Hold and wait, No preemption, Circular wait.

"Wait ON" vs. "Wait FOR." One waits ON superiors, to take care of, or to serve; e.g., a store clerk waits ON customers. One waits FOR peers, e.g., you might wait for a friend to go to the lab. A server process might be said to "wait on" a client process, but process scheduling, mutual exclusion, deadlock, etc., involve processes waiting FOR each other.

- Demand paging (9.2). Pages of virtual memory are loaded into physical memory only as they are needed.

- Error-correcting code [p. 479]: Encoding data is such a way that a certain number of errors can be detected and a (smaller) number of errors can be corrected. (Circular statements such as "Error-correcting codes are codes for correcting errors" received zero points.) Encryption codes protect against unauthorized access.

- File (p. 504). A named collection of related information recorded on secondary storage.

- File access: Sequential vs. direct. Sequential access (11.2.1) processes information in the file strictly in order. Direct access (11.2.2) can access records in a file in no particular order. "File access" concerns access to information within a file, not to a list of files. That would be accessing a directory. "File access" does not depend on all or a part of the file being in memory.

- File-System mounting (11.4). The operating system places a file system (typically a disk or equivalent) at a specified location within the file system. For example, Windows mounts drives as letters in the MyComputer "folder." Did you ever wonder what Windows would do if you tried to mount more than 26 drives?

- Indexed file access, Similar to direct file access, except that an index is used to record pointers to blocks of a file. To find a record in the file, we search the index and use the pointer to access the file directly. Not the same as direct file access.

A different meaning is to store (index) the contents of many files, to that a desired file can be found quickly.

The first meaning is for accessing records of a single file; the second is for accessing many files.

- Internal vs. external fragmentation [p. 363]. Both are wasted memory. Internal fragmentation is within a page, segment, partition, or disk block. External fragmentation is memory that is free, but it is in such small units that it cannot be allocated effectively. It is wasted memory outside a segment, partition, or disk block. Paging avoids external fragmentation.

- Locality [p. 428]. The concept that if a location in memory (or on disk) is referenced, the probability increases that nearby locations will be referenced soon. Locality makes the entire memory hierarchy effective.

- Logical address space vs. Physical address space (Sect. 8.1.3)

- Memory hierarchy Processor registers > cache levels 1, 2, and 3 > main memory > disk cache > local disk storage > LAN storage > remote storage > off-line storage > remote storage. Trade-offs between speed, capacity, volatility, and cost.

- Memory mapped files [p. 430]. Using virtual memory techniques to treat a sequentially accessed file as routine memory access.

- Monitor vs. semaphore (for mutual exclusion). See Section 5.8 vs. 5.6. Both are means to enforce mutual exclusion. "The monitor construct ensures that only one process at a time can be active within the monitor." In Java, the keyword synchronized enforces a monitor-like discipline. Counting or binary semaphores provide mutual exclusion through indivisible operations wait() and signal().

- Mutual exclusion [p. 206] If one process P1 is executing in its critical section, then no other processes can be executing in their critical sections.

- NAS = Network-Attached Storage [p. 471]; SAN = Storage-Area Network [p. 472].

- Page vs. Page frame [p. 366]. A page is a unit of the virtual (apparent) memory of a process. A page frame is a physical location in memory.

- Page fault [p. 403]. CPU attempts to access a memory location on a page that is not found in the page table.

- Page replacement (9.4): FIFO, Optimal, LRU, approximate LRU, Second-chance.

- Preemption - Taking resources away from a process that holds them. Preempting a running process by a timer interrupt is one example, but in principle, the operating system could preempt any resource. Preemption is more than interruption. Preempting a running process takes away the CPU resource, but not necessarily its other resources.

- Preemptive scheduling (6.1.3).

In non-preemptive scheduling, CPU-scheduling, a process keeps the CPU until the process releases the CPU by terminating or by switching to a waiting state. In preemptive scheduling, the CPU can be taken away, most commonly in response to a timer interrupt. Preemptive scheduling concerns one resource, the CPU.

- Priority inversion [p. 217]: A high priority process is forced to wait for a lower priority process to release some resource(s).

- Priority scheduling [p. 270]: A process scheduling strategy which selects from among processes in the Ready list the one with the highest priority. May be used either with or without preemption.

- Processor affinity vs. load balancing (6.5.2). Both concern multi-processor scheduling. Processor affinity suggests a process should remain on the same processor to exploit caching. Load balancing suggests a process should be moved from an overloaded processor to a lightly loaded processor.

- Proportional share real-time CPU scheduling [p. 289]: Allocate T shares among all applications. An application can receive N shares of time, ensuring that the application is allocated N/T of the total processor time.

- Race condition [p. 205]: Several processes access and manipulate the same data concurrently, and the outcome depends on what happens to be the order of execution.

- RAID [p. 484]. Redundant arrays of independent/inexpensive disks use to provide data redundancy and rapid access.

- Rate-monotonic real-time CPU scheduling [p. 287]: Upon entering the system, each periodic task is given a static priority inversely based on its period. If a lower priority process is running, and a higher priority process becomes available to run, the lower priority process is interrupted.

the execution depends on the particular order in which the accesses take place.

- Readers-writers problem (5.7.2) is one of the classical problems of synchronization. Multiple reading processes and multiple writing processes need to share access to a single shared data resource.

- Resource allocation graph (7.2.2) is a graphical representation of processes i) requesting and ii) holding resources.

- Round-robin scheduling (6.3.4) is a preemptive schedule algorithms in which the Ready List is treated as a FIFO queue. Your answer needed to mention both the order in which processes are scheduled (FIFO) and preemption.

- Semaphore [p. 213]: An integer variable accessed only through the atomic operations wait() and signal().

- Shortest Job Next (SJN) [p. 267]: (or Shortest Job First) A process scheduling strategy which selects from among processes in the Ready list the one expected to take the shortest time to complete its CPU burst, that is, until it next waits for some resource. Requires OS to have a good estimate of how long each process will run. Typically not used with preemption, although we might preempt if estimated time is exceeded.

- Swapping [p. 385]. Similar in concept to paging, except that the units are entire processes, rather than pages.

- Starvation [p. 217]: A process may wait indefinitely. Broader than just scheduling.

- SSTF = Shortest-seek-time-first [p. 474]; C-SCAN [p. 476].

- Thrashing [p. 425]. Behavior of a process that does not have "enough" page frames allocated to it, characterized by frequent page faults.

- Working set [p. 427].

Problem 2. Disk Block Allocation

Consider a contiguous disk block allocation scheme. Our disk has 20 blocks of memory. All blocks are the same size. The operating system occupies disk blocks 0 - 5, so user files use blocks 6 - 19. When a new user file is created, it requests the number of disk blocks it needs. Each file can use only contiguous blocks. A user file may never ask for more blocks, and it releases disk blocks only when the file is deleted.

Initially, at time t = 0, the only user file stored on the disk is file P1, and it occupies disk blocks 10 - 14. Additional files appear at the times shown, with disk block requests as shown:

| time | operation | # blocks | | time | operation | # blocks |

| 3 | create P2 | 4 | | 14 | delete P2 | |

| 4 | create P3 | 2 | | 17 | create P7 | 1 |

| 5 | create P4 | 1 | | 20 | delete P3 | |

| 10 | delete P1 | | | 22 | create P8 | 3 |

| 11 | create P5 | 2 | | 33 | create P9 | 4 |

| 13 | create P6 | 2 | | 36 | delete P8 | |

Hint: You are welcome to work on the exam pages.

- Describe the first-fit strategy for file allocation.

Show where the first-fit strategy places each file:

| Block | t = 0 | 3 | 4 | 5 | 10 | 11 | 13 | 14 | 17 | 20 | 22 | 33 | 36 |

| 0 - 5 | O P E R A T I N G S Y S T E M |

| 6 | | | | | | | | | | | | | |

| 7 | | | | | | | | | | | | | |

| 8 | | | | | | | | | | | | | |

| 9 | | | | | | | | | | | | | |

| 10 | P1 | | | | | | | | | |

| 11 | | | | | | | | | |

| 12 | | | | | | | | | |

| 13 | | | | | | | | | |

| 14 | | | | | | | | | |

| 15 | | | | | | | | | | | | | |

| 16 | | | | | | | | | | | | | |

| 17 | | | | | | | | | | | | | |

| 18 | | | | | | | | | | | | | |

| 19 | | | | | | | | | | | | | |

Explain any non-obvious choices you made.

- Describe the best-fit strategy for file allocation.

Show where the best-fit strategy places each file:

Explain any non-obvious choices you made.

- [NOT included on exam] Describe the worst-fit strategy for file allocation.

Show where the worst-fit strategy places each file:

Explain any non-obvious choices you made.

- Which is the best strategy for contiguous disk block allocation? Why?

(Hint: The best strategy might be another strategy I did not ask you to simulate.)

- First fit:

| Block | |

0 |

3 |

4 |

5 |

10 |

11 |

13 |

14 |

17 |

20 |

22 |

33 |

36 |

| 6 | |

|

|

|

|

|

|

|

|

P7 |

|

|

|

|

| 7 | |

|

P2 |

|

|

|

|

|

|

|

|

|

|

|

| 8 | |

|

|

|

|

|

|

|

|

|

|

P8 |

|

|

| 9 | |

|

|

|

|

|

|

|

|

|

|

|

|

|

| 10 | |

|

|

|

|

|

|

|

|

|

|

|

|

|

| 11 | |

|

|

|

|

|

P5 |

|

|

|

|

|

|

|

| 12 | |

P1 |

|

|

|

|

|

|

|

|

|

|

|

|

| 13 | |

|

|

|

|

|

|

P6 |

|

|

|

|

|

|

| 14 | |

|

|

|

|

|

|

|

|

|

|

|

|

|

| 15 | |

|

|

|

|

|

|

|

|

|

|

|

|

|

| 16 | |

|

|

P3 |

|

|

|

|

|

|

|

|

|

|

| 17 | |

|

|

|

P4 |

|

|

|

|

|

|

|

|

|

| 18 | |

|

|

|

|

|

|

|

|

|

|

|

|

|

| 19 | |

|

|

|

|

|

|

|

|

|

|

|

|

|

P9 does not fit

- Best fit:

| Block | |

0 |

3 |

4 |

5 |

10 |

11 |

13 |

14 |

17 |

20 |

22 |

33 |

36 |

| 6 | |

|

|

|

|

|

|

|

|

|

|

|

|

|

| 7 | |

|

P2 |

|

|

|

|

|

|

|

|

P8 |

|

|

| 8 | |

|

|

|

|

|

|

|

|

|

|

|

|

|

| 9 | |

|

|

|

|

|

|

|

|

|

|

|

|

|

| 10 | |

|

|

|

|

|

|

|

|

|

|

|

|

|

| 11 | |

|

|

|

|

|

|

P6 |

|

|

|

|

|

|

| 12 | |

P1 |

|

|

|

|

|

|

|

P7 |

|

|

|

|

| 13 | |

|

|

|

|

|

|

|

|

|

|

|

|

|

| 14 | |

|

|

|

|

|

|

|

|

|

|

|

P9 |

|

| 15 | |

|

|

|

|

|

|

|

|

|

|

|

|

|

| 16 | |

|

|

P3 |

|

|

|

|

|

|

|

|

|

|

| 17 | |

|

|

|

P4 |

|

|

|

|

|

|

|

|

|

| 18 | |

|

|

|

|

|

|

|

|

|

|

|

|

|

| 19 | |

|

|

|

|

|

P5 |

|

|

|

|

|

|

|

What if there are several holes equally good? Most assume allocation from the top of an available block.

Many students said, "closest in size." Suppose a process needs 5 blocks, and there are blocks of size 4 and 8 available. 4 is closer to 5 than 8. Especially under time pressure, you must be wary of the Law of Unintended Consequences. Is there a valid interpretation of your words that is different from what you intend?

- Worst fit:

| Block | |

0 |

3 |

4 |

5 |

10 |

11 |

13 |

14 |

17 |

20 |

22 |

33 |

36 |

| 6 | |

|

|

|

|

|

|

|

|

|

|

|

|

|

| 7 | |

|

|

P3 |

|

|

|

|

|

|

|

|

|

|

| 8 | |

|

|

|

P4 |

|

|

|

|

|

|

|

|

|

| 9 | |

|

|

|

|

|

|

|

|

|

|

|

|

|

| 10 | |

|

|

|

|

|

P5 |

|

|

|

|

|

|

|

| 11 | |

|

|

|

|

|

|

|

|

|

|

|

|

|

| 12 | |

P1 |

|

|

|

|

|

P6 |

|

|

|

|

|

|

| 13 | |

|

|

|

|

|

|

|

|

P7 |

|

|

|

|

| 14 | |

|

|

|

|

|

|

|

|

|

|

|

|

|

| 15 | |

|

|

|

|

|

|

|

|

|

|

P8 |

|

|

| 16 | |

|

P2 |

|

|

|

|

|

|

|

|

|

|

|

| 17 | |

|

|

|

|

|

|

|

|

|

|

|

|

|

| 18 | |

|

|

|

|

|

|

|

|

|

|

|

|

|

| 19 | |

|

|

|

|

|

|

|

|

|

|

|

|

|

What if there are several holes equally good?

P9 does not fit

- Depends. What is "best?"

In this example, Best fit is best because it could handle Process P9. That does NOT mean best fit is always best. Besides, best fit is more expensive to run. Often, Worst Fit is best.

Problem 3. Virtual Memory

Explain how virtual memory makes it possible for you to run a program that requires 8 Gb for code and data on a computer with 4 Gb of physical memory, while running the operating systems and 10's of other processes.

Hint: Picture(s) are welcome.

Very few students provided any detail on "How virtual memory makes it possible ...". The question asks you to describe how virtual memory works, not to say simply, "We use virtual memory." Points include:

- 8 Gb program requires 64 bit OS

- Program is stored on disk

- Composed of logical pages

- Virtual addresses limited only by 264 possible addresses

- Page size plays nicely with disk block size

- Physical memory is divided into logical page frames

- Page table contains in-memory page frame and on-disk address of each page

- When CPU needs to access memory, address is separated into <page number> and <offset>

- CPU asks page table for <page number>

- If page is present in memory (page hit), page table returns <page frame number>

- Address register combines <page frame number> with <offset> to access memory

- If page is not present in memory (page fault), send <disk address> to disk controller

- Move process to a wait state

- OS selects a victim page in memory to be replaced by the new page

- There are several page replacement strategies

- If page is "dirty" (contents changed), it must be written back to disk before it is replaced

- Disk controller writes page from disk into the victim's page frame in physical memory

- Sleeping process is awakened into READY list

- When it runs, its requested memory access completes

- Process repeats for each memory access

- Strong need for good hardware support

In principle, our 8 Gb program could run with only one page frame (perhaps 1 Kb), although it will run faster with several ten's of physical page frames. Hence, the 4 Gb physical memory is shared among tens of processes sharing time in the CPU.

Virtual memory is no more or less about pointers than is physical memory. Virtual memory does not introduce an extra level of memory indirection.

Virtual memory does not impact malloc() and free(). Malloc() and free() work in the virtual address space, and virtual memory maps the space they manage to physical memory blocks. Virtual memory knows nothing of malloc() and free(), and malloc() and free() know nothing of virtual memory.

We do not use virtual memory when physical memory is exhausted. If the hardware and operating system supports virtual memory, (almost) everything is done in virtual memory.

Caching? Virtual memory uses physical memory as a cache for disk storage. In modern systems, the principles of virtual memory are repeated for each level of a memory caches, but I did not require any mention of caching in the answer to this question.

The answer is not to add an SSD or to connect to network storage. Virtual memory is not accessed over a network connection.

In answering this question, you are not required to say anything about multiple processes. Nor is anything necessarily multi-core about this question. Paging is significantly more subtile if pages are shared by multiple cores.

Credit award was somewhat subjective, depending on the level of detail. Lower point totals were not necessarily wrong, but less complete. It did not count much to say, "We use virtual memory or paging", because that was given as the title of the question.

Problem 4. Mailboxes

In Project 7, you implemented a simple mailbox system. List TODOs that would be necessary to support

- A large number of messages waiting to be delivered

- One message addressed to several processes process

- Multiple message queues

- Large number of messages waiting to be delivered.

TODO:

- Add data structure to PCB to store multiple waiting messages

- Modify send() and recv() to enforce queue discipline for arriving and departing messages

- One message sent to multiple processes.

TODO:

- How do we specify multiple recipients? For example, create sendmult() that takes an array of destination pids or variable list of destination pids

- Design error strategy if message can only be delivered to subset of destinations

- Multiple message queues.

TODO:

- To which queue do we wish to send? Modify send() to take a queue ID

- Modify PCB to include array of message queues

- From which queue do we want to receive? Modify recv() to read a message from any queue

- Create recvfrom() that takes a queue ID parameters

Many variants are possible and correct.

Most responses were too vague. By asking "List TODOs ...", we asked for specific modifications to which functions. By asking "List TODOs ...", we hoped you would give a level of detail you wished to see in your assignments. Be specific.

Point awards were somewhat subjective. Generally, if you outlined the concept of queues with modest detail, you earned 15 points.

Many students seemed to mention every concept we have studied, although most have little to do with message passing.

There is no need for any concept of priorities. In practice, while one can assign priorities to messages (how are the assignments made?), it is cleaner to use different queues for different priorities.

If you wanted to talk about messages having priorities, you needed to address how the priorities get assigned and how they are managed. There is no need for aging in a queue of messages.

Messages have almost nothing to do with premption.

Messages are not scheduled.

If a message is sent and not received, is that starvation? I don't think so.

Problem 5. Tricky C

The code below assumes it is being run in XINU. It models the classic Dining Philosophers problem with MAXFORKS forks (represented by semaphores) and MAXFORKS philosophers (represented by processes). Each philosopher process has an assigned ID (0 <= i < MAXFORKS). This solution assigns to each philosopher process a different priority, equal to the ID value.

void philosopher(int id, semaphore forks[]) {

int myLeft, myRight;

myLeft = id;

myRight = (id + 1) % MAXFORKS;

while (1) {

printf("Philosopher %d thinks\n", id);

sleep(200);

wait(forks[myLeft]);

wait(forks[myRight]);

printf("Philosopher %d eats\n", id);

sleep(200);

signal(forks[myRight]);

signal(forks[myLeft]);

}

}

int main(int argc, char **argv) {

semaphore forks[MAXFORKS];

for (i = 0; i < MAXFORKS; i++) {

forks[i] = newsem(1);

ready(create((void *)philosopher, INITSTK, i, "PHIL",

2, i, forks), RESCHED_NO);

}

resched();

...

}

- Does this instance of the Dining Philosophers potentially deadlock? Explain.

- If not, make a rigorous argument for why not.

- If so, change the philosopher process to prevent deadlock. Explain.

Hint: You are welcome to work on this page of the exam. Be sure your name is on it.

Dr. Brylow writes:

The exam version has most of the output statements removed to make the code shorter,

but if you put a printf() after each wait() and signal(), you get something like:

Philosopher 4 has acquired left fork

Philosopher 3 has acquired left fork

Philosopher 2 has acquired left fork

Philosopher 1 has acquired left fork

Philosopher 0 has acquired left fork

and then deadlock.

Interestingly, as I was capturing the output for you above, I realized

that this question may be even subtler than I thought. I cleaned the output

statements out to make the code shorter for the exam, but that also

eliminates the deadlock. Without a potentially blocking I/O call between the

two wait() calls, nothing can stop the highest priority process from

acquiring both his forks if the scheduler is simple priority-based. Even if

he is preempted, priority scheduling indicates that the highest numbered

philosopher will be ready to run again before any lower priority

philosophers.

If the scheduler uses aging, or if there is any blockable call between

the two wait() calls, then deadlock becomes possible again.

But that's really just a quirky, non-circular property of this particular

deterministic implementation of the Dining Philosophers. If you add random

wait times into the mix, it is still possible for the highest priority

philosopher to get his first fork, but not the other, and then we have

potential circular wait again.

Ahhh, concurrence.

Corliss: I gave full credit to some solutions that said, "Yes," and full credit to some solutions that said, "No." I looked for a careful analysis.

Several students looked for four conditions of deadlock, but did not apply the search appropriately in this scenario.

We have mutual exclusion. Philosophers do not share forks simultaneously, although you could design a solution that does.

We have no preemption. Preemption would take a fork away from a philosopher who holds it. No philosopher takes a fork away from a neighbor, although you could design a solution that does.

We have hold and wait. Once a philosopher acquired a fork, that fork is held while the holder waits for the other fork, although you could design a solution in which a philosopher releases his first fork if his second is not available.

Hence, the question revolves around circular wait. As formulated, circular wait is possible. To prevent deadlock, you could enable sharing or preemption, or you could force the return of one fork if the other is not available, but the easiest solutions prevent circular wait in some way, e.g., insert a mutex around the two wait() calls.

Release()? But there is no preceding acquire(). Implicitly, wait() acquires a fork, and signal() releases it.

Reverse signals()? No, the deadlock may have already occurred. The problem is with the wait() calls.

Check in advance? If you check whether both forks are available before you pick them up, your solution is susceptible to a race condition, resulting in failure to prevent deadlock.

The calls to sleep() do not matter. Their only affect is to force interrupts to occur in unfortunate places. There is no solution in moving the calls to sleep() around. Even if the wait() calls are close together, there still could be an interrupt between them.

Problem NOT USED. Deadlock prevention

Describe four strategies for preventing deadlock in an operating system. For each strategy,

describe what that strategy would mean in the Dining Philosophers problem and explain how

that strategy is applied in one example from a modern operating system.

Hint: You answer should have the form:

- Name of strategy

- Description

- In the Dining Philosophers problem, this strategy would mean ...

- Windows/Mac/Linux/... uses this strategy for ...

See Section 7.4, p. 323 - 327

- Deny mutual exclusion - Allow processes to share, e.g., memory or screen. Philosophers share chopsticks.

- Deny hold and wait - If a request from a process for resources is denied, the process relinquishes the resources it holds, e.g., a process blocking itself on some resource relinquishes the CPU.

If a philosopher cannot pick up both chopsticks, he must relinquish any he holds. Alternatively,

request and acquire both chopsticks before he starts.

- Deny no preemption - Allow a process to preempt a resource from a process that holds it, e.g., preemptive scheduling of the CPU. A philosopher can take a chopstick away from his neighbor.

- Deny circular wait - Request resources in some pre-determined order, e.g., a process must be allocated at least a little memory before it can run and request other resources. Either philosophers are numbered and

pick up chopsticks in order of their numbers, or chopsticks are numbered and must be picked up in order.

Many students were confused by the implied double negatives. For example,

NOT(mutual exclusion) means share. If anyone should be able to parse NOT(NOT(.)), it should be us.

Prevention is one of four strategies our book gives for handling deadlocks. Avoidance, detection, recovery, and doing nothing are not prevention strategies.

| |