|

Your second midterm examination will appear here. It covers through page 342.

It will be a 50 minute, closed book exam.

Expectations:

- See course goals and objectives

- See Objectives at the beginning of each chapter

- See Summary at the end of each chapter

- Read, understand, explain, and desk-check C programs

-

See previous exams:

Questions will be similar to homework questions from the book. You will

be given a choice of questions.

I try to ask questions I think you might hear in a job interview. "I

see you took a class on Operating Systems. What can you tell me about ...?"

See also Dr. Barnard's notes.

He was using a different edition of our text, so chapter and section numbers

do not match, but his chapter summaries are excellent.

Results on exam 1 suggest you may wish to maintain a vocabulary list.

Directions:

Read and follow the directions.

- Write on four of the five questions. If you write more than four questions, only the first four will be graded. You do not get extra credit for doing extra problems.

- Read the entire exam. If there is anything you do not understand about a question, please ask at once.

- If you find a question ambiguous, begin your answer by stating clearly what interpretation of the question you intend to answer.

- Begin your answer to each question at the top of a fresh sheet of paper [or -5].

- Be sure your name is on each sheet of paper you hand in [or -5]. That is because I often separate your papers by problem number for grading and re-assemble to record and return.

- Write only on one side of a sheet [or -5]. That is because I scan your exam papers, and the backs do not get scanned.

- Be sure I can read what you write.

- If I ask questions with parts

- . . .

- . . .

your answer should show parts

- . . .

- . . .

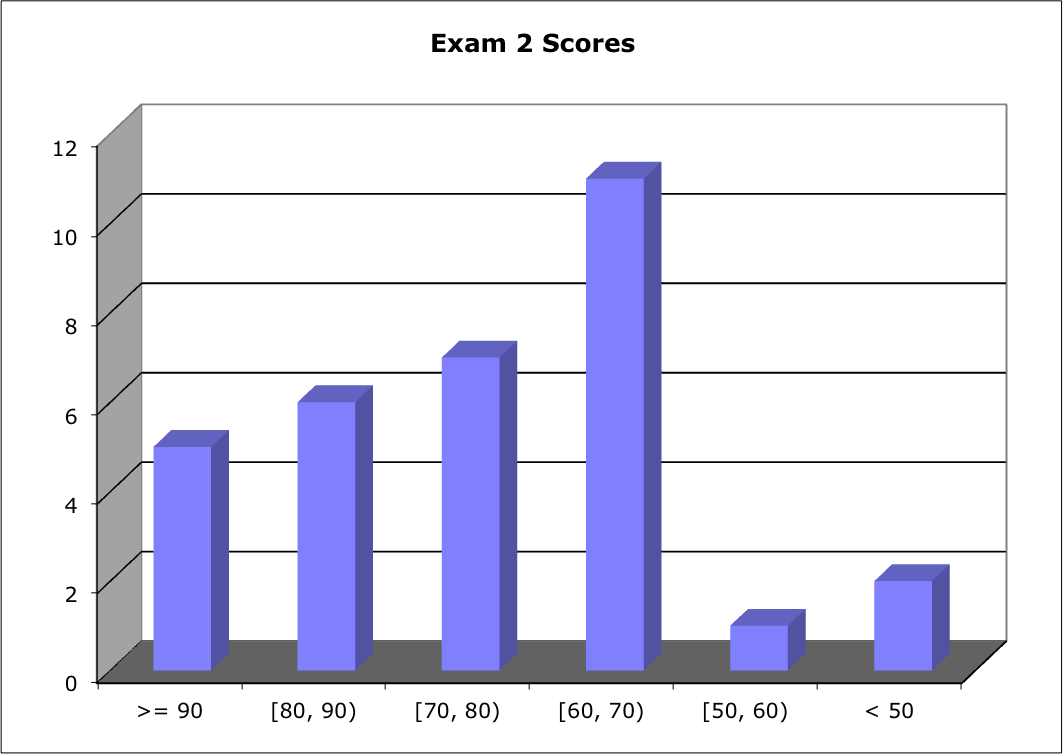

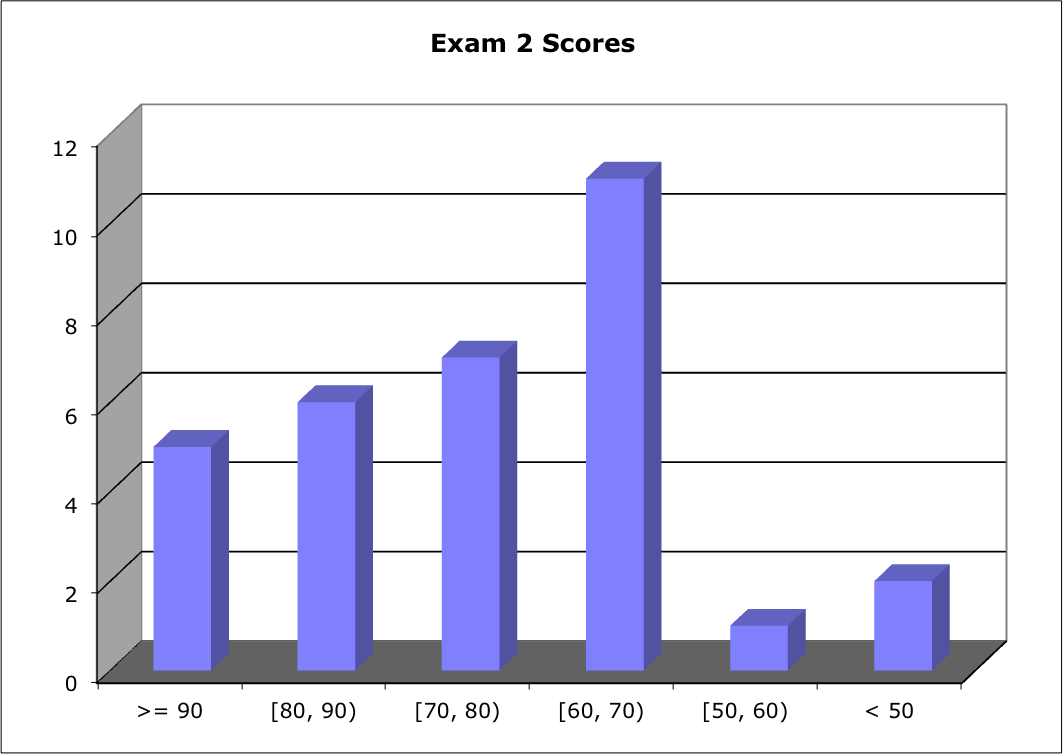

Score Distribution:

Range: [44 - 96]; Median: 72; Mean: 72.4; Standard Deviation: 13.1

These shaded block are intended to suggest solutions; they are not intended to be complete solutions.

Problem 1 Definitions

Problem 2 Embedded Xinu mailboxes

Problem 3 Paging

Problem 4 Tricky C - prioritize()

Problem 5 Mutual Exclusion - TestAndSet

Problem 1 Definitions

- List five scheduling algorithms

and give a brief description of each.

- List the necessary conditions for deadlock

and give a brief explanation of each

- Give careful definitions for each term (in the context of computer operating systems):

- Thread

- Atomic instruction

- Busy waiting

- Preemption

- (9 points) Several people answered with page replacement strategies, which were not even in the scope of this exam. See Section 5.3

5.3.1 First-come, first-served

Easy to understand

Not preemptive

Long average wait

5.3.2 Shortest-job-first

Provably optimal

Preempt or not

Cannot be implemented. Approximate implementation:

- Ask - Process should know how long it will run?

- Real-time may have counted clock cycles

- You know better than the OS

- Preempt at 2 x estimate?

- Penalize under-estimates?

- Predict CPU bursts?

5.3.3 Priority

Preempt or not

starvation ==> aging

5.3.4 Round-robin

Preemptive

Quantum = ?

5.3.5 Multilevel queue

See Fig. 5.6

Strict, time-slice, aging

5.3.6 Multilevel feedback-queue

Processes move between queues

Greedy are penalized; Good is rewarded

You could also list real-time strategies from Chapter 19, e.g., Earliest Deadline First.

I accepted at most one feasible-but-BAD strategy per student. For example, Longest- Job First or a stack discipline could be used, but whining by users would be deafening! Why?

- (8 points) See Section 7.2, p. 285+

- Mutual exclusion - Only one process can hold/use a resource at once. A critical section is but one type of resource.

- Hold and wait - When a process acquires a resource and it needs another resource, it holds the first and waits for the second.

- No preemption - The operating system cannot take a resource away from a process hold it. "No preemption" is very similar to the "hold" of "hold and wait." Is there a difference? Circular explanations such as "Resources cannot be preempted" or "Preemption is not allowed" received zero credit. You must explain what preemption means.

- Circular wait - There is a set of processes {P0, ... , Pn-1} with the property that process Pi is waiting for a resource held by process Pi+1 mod n, for i = 0, ..., n-1. A resource allocation graph contains a cycle. Circular explanations such as "Waiting processes form a circle" received zero credit.

Strategies for deadlock prevention deny each of the four necessary conditions, which required you to list the four necessary conditions. If you answered correctly "what are the four strategies for preventing deadlock?", I penalized that answer by 2 points for not knowing the difference, but the question you answered was harder.

- (2 points each) Definitions:

- A thread is a basic unit of CPU use. A process may include several threads. A thread includes a thread ID, a program counter, a register set, and a memory stack. See p. 153.

- A machine language instruction that cannot be interrupted. Once it starts, it must complete. See p. 232.

- Waiting while holding the CPU, typically waiting by loop, doing nothing. See p. 235. For example

while (TestAndSet(&lock) ; // Does nothing

A process in a Waiting state (in the sense of the Ready-Running-Waiting state diagram) is not busy waiting because it is not holding the CPU.

- Preemption - Taking resources away from a process that holds them. Preempting a running process by a timer interrupt is one example, but in principle, the operating system could preempt any resource. Preemption is more than interruption. Preempting a running process takes away the CPU resource, but not necessarily its other resources. Did you recognize that "preemption" here should mean the same as "preemption" as you used it in part b)?

Problem 2 Embedded Xinu mailboxes

Our Embedded Xinu mailbox implementation features an asynchronous,

bounded-capacity send() function for processes to communicate. Describe

what would be required to build a synchronous send with arbitrary capacity.

"Send with arbitrary capacity" means that a message can be arbitrarily large, not that there can be arbitrarily many of them.

I number the necessary points you need to make so that on your paper, I can indicate by number the points for which I consider you to have recognized. For full credit, you needed to say something about four out of five items.

#1: First, sending an arbitrary amount of interprocess communication would

require dynamic memory allocation -- the capacity to demand additional heap

space at run time from the O/S.

#2: Second, the send() function would need an additional parameter to specify

the size of the data when called, or else the message must end with some sentinal.

#3: An implementation of arbitrary capacity send() would allocate a new

buffer of the specified size, copy over the data to send, and store the

pointer to that buffer in the process control block.

#4: The receiving process could then either receive the entire buffer (and be

ultimately responsible for freeing it,) or have a read that copies from the

buffer and frees it. There are some interesting design decisions involved

in building a recv() function that needs to be able to handle arbitrary

amounts of data.

#5: To make send() synchronous, we would only need to have send() block the

sending process in a wait state until a receiving processes completed its

recv() call. An additional field in the process control block would need to

keep track of which sending process was blocked on the message receive.

#6 (optional): Additionally, one might develop a variant of send() that would timeout after

a lengthy wait if no receiver process acted.

We were disappointed that relatively few students had the confidence to write this question. It tests whether you understand two pairs of fundamental operating systems concepts: bounded vs. arbitrary size and synchronous vs. asynchronous. You might want to be sure you understand the implications of those concepts in several operating systems settings by May.

Problem 3 Paging

You are interviewing for a job as a heavy-duty C programmer. The interviewer asks, "I

see you took a class on Operating Systems. What can you tell me about how paging works as a memory management strategy?"

How do you answer?

Hint: I expect a moderately detailed answer such that it might take about 5 minutes to give orally. You want to impress the interviewer that you understand paging. Depending on your writing density, that probably is at least two pages in writing. Pictures are welcome, if you explain what they mean.

Hint: This question asks about paging, not about virtual memory. You may talk about virtual memory if that helps you explain paging. Virtual memory is beyond the scope of this exam. Virtual memory uses paging, so they are related, but paging is a concept our text presents on its own.

Compared with Problem 2, you should be able to BS your way through this question. The challenge is to be specific enopugh to demonstrate that you are not just BSing your way through it. Several students seemed to assume the interviewer was an HR person, and you used simple analogies with little depth. If I wanted depth, perhaps I should have said explicitly that the interviewer was the person who will be your team lead, if you get the job?

Summarize Section 8.4 Paging, pp. 328 - 337.

You should mention and describe

- Logical vs. physical address

- Logical vs. physical memory

- Page table

- Page vs. page frame

- External vs. internal fragmentation

- Translation Look-aside Buffer (TLB)

You might discuss

- Protection

- Page sharing

What are the advantages?

What are the disadvantages?

What difference does it make in the applications you will write?

What difference does it make to the users of the applications you will write?

(If this is a job interview, always tie every answer back to your performance in the job.)

You may discuss virtual memory concepts (but you do not have to):

- Page hit vs. page fault

- Demand paging

- Page replacement strategies

Problem 4 Tricky C - prioritize()

Consider the implementation of prioritize() below:

/**

* Prioritize a process into a queue in descending key order.

* @param pid process id to prioritize

* @param q queue in which process should be prioritized

* @param key sorting key (priority, for example)

*/

void prioritize(short pid, queue q, ulong key)

{

short head, prev, next, tail;

head = queuehead(q);

tail = queuetail(q);

while ( (queuetab[next].key > key) && (next != tail) )

{ next = queuetab[next]->next; }

queuetab[pid].next = next; // Set next and prev fields of new node

prev = queuetab[next].prev;

queuetab[pid].prev = prev;

queuetab[prev].next = next; // Finish linking list

queuetab[next].prev = prev;

}

The queuetab array models our Embedded Xinu process queues, and consists

of structs with next, prev, and key fields.

Does this code correctly insert a process in priority order? If so,

state the conditions under which it works correctly. If not, point out what

is wrong.

If you thought we'd put correct code in a tricky C question, you don't know your instructors very well. If you expected only one error, you don't know your instructors very well. On the other hand, it might be really tricky of us to give you correct code?

There are several major errors in this code.

- The "->" operator in the body of the while-loop is a syntax error for a

statically allocated array like queuetab, so this will not even compile;

- Index variable "next" needs to start out one element past the head node

before the while-loop, or else we potentially are considering inserting in

front of the head node;

- The key comparison within the while-loop needs to be ">=", or else

processes with the same priority will not exhibit round-robin behavior;

- The key value is never assigned into the new node;

- The last two assignments statements do not correctly finish linking the

new node into the list.

On some papers, I made notations such as "#2", or "#5" to indicate I credited you for identifying error #2 or error #5 from the list above. If you wish to argue that what you have written on your exam paper shows you recognized an error for which I did not give you credit, you are welcome to talk to me - Corliss.

Once these errors are all fixed, the assumptions under which it works

correctly are:

- Pid is a valid process ID, and it is not already in a process queue;

- Queue 'q' is a valid process queue representation;

- The key field in the queue struct is large enough to store an unsigned int.

You earned full credit if you identified 3 of these 5 errors and did not say anything false. Identifying 2 of the 5 errors earned 18 points. Identifying 1 of the 5 errors earned about 10 points, provided you did not say anything else false.

One student pointed out this code (if it otherwise worked) was not thread-safe. After some reflection, I like that answer. Of course, it does not work, but I really like that this student made a connection with the theoretical discussions of critical sections. Well done.

My favorite answer: "You would be very lucky if this code works." No extra credit, though.

Problem 5 Mutual Exclusion - TestAndSet

Suppose we have an atomic hardware instruction TestAndSet whose action is described by the pseudocode:

boolean TestAndSet (boolean *target) {

boolean returnValue = *target;

*target = TRUE;

return returnValue;

}

The following pseudocode claims to implement mutual exclusion using TestAndSet:

SHARED:

boolean lock = ???; |

| |

line

0.0

0.1

0.2

0.3

0.4

0.5

0.6 |

Process 0:

while (TRUE) {

while (TestAndSet(&lock)) ;

/* CRITICAL SECTION */

lock = FALSE;

/* REMAINDER SECTION */

} |

line

1.0

1.1

1.2

1.3

1.4

1.5

1.6 |

Process 1:

while (TRUE) {

while (TestAndSet(&lock)) ;

/* CRITICAL SECTION */

lock = FALSE;

/* REMAINDER SECTION */

} |

- Suppose the scheduler happens to cause statements to be executed in the order shown below. Complete the blank columns in the table.

| line | lock

value |

Explain non-obvious occurrences |

| 0.0 | ??? | Execution begins with Process P0 |

| 0.1 | | |

| 0.2 | | |

| 0.3 | | |

| 0.4 | | |

| 0.5 | | |

| 0.6 | | P0 is interrupted; P1 is placed in RUNNING state |

| 1.0 | | |

| 1.1 | | |

| 1.2 | | |

| 1.3 | | |

| 1.4 | | |

| 1.5 | | |

| 1.6 | | End of tracing |

Or is that sequence of statement execution not possible? Explain.

- With what value ??? must the lock be initialized in order that this solution work? Explain.

- Suppose the scheduler happens to cause statements to be executed in the order shown below. Complete the blank columns in the table.

| line | lock

value |

Explain non-obvious occurrences |

| 0.0 | ??? | Execution begins with Process P0 |

| 0.1 | | |

| 0.2 | | |

| 0.3 | | P0 is interrupted; P1 is placed in RUNNING state |

| 1.0 | | |

| 1.1 | | |

| 1.2 | | |

| 1.3 | | |

| . . . | | End of tracing |

Or is that sequence of statement execution not possible? Explain.

- What is the "critical section problem?"

- What properties must be satisfied by a solution to the critical section problem?

- Is the pseudocode in this problem a solution to the critical section problem? Explain.

This is a test of careful desk-checking, as well as a test of your understanding of TestAndSet().

See pages 231 & 232 of our text.

- I choose to show the value of lock before the indicated statement is begun. If you choose to show the value of lock after the indicated statement is completed, your sequence of instructions is the same, but in most cases, the value of lock is just shifted up one line from mine. No big deal, but it would be kind to make your convention clear.

| line | lock

value |

Explain non-obvious occurrences |

| 0.0 | FALSE | Execution begins with Process P0 |

| 0.1 | FALSE | Enter first loop |

| 0.2 | FALSE | TestAndSet sets lock to TRUE

and returns FALSE

While 0.2 falls through |

| 0.3 | TRUE | Enter critical section |

| 0.4 | TRUE | |

| 0.5 | FALSE | Leaves critical section |

| 0.6 | FALSE | P0 is interrupted; P1 is placed in RUNNING state |

| 1.0 | FALSE | |

| 1.1 | FALSE | Enter first loop |

| 1.2 | FALSE | TestAndSet sets lock to TRUE

and returns FALSE

While 1.2 falls through |

| 1.3 | TRUE | Enter critical section |

| 1.4 | TRUE | |

| 1.5 | FALSE | Leaves critical section |

| 1.6 | FALSE | End of tracing |

Works fine.

- FALSE. If we initialize lock to TRUE, neither process could ever enter its critical section.

-

| line | lock

value |

Explain non-obvious occurrences |

| 0.0 | FALSE | Execution begins with Process P0 |

| 0.1 | FALSE | Enter first loop |

| 0.2 | FALSE | TestAndSet sets lock to TRUE

and returns FALSE

While 0.2 falls through |

| 0.3 | TRUE | Enter critical section

P0 is interrupted; P1 is placed in RUNNING state |

| 1.0 | TRUE | |

| 1.1 | TRUE | Enter first loop |

| 1.2 | TRUE | TestAndSet sets lock to TRUE

and returns TRUE

While 1.2 loops forever |

| | | Eventually, P1 is interrupted

Suppose P0 gets to run (NOT necessary)

P0 could then exit its critical section

and set lock = FALSE

P0 might be interrupted

P1 might get rescheduled

NOW |

| 1.2 | FALSE | TestAndSet sets lock to TRUE

and returns FALSE

While 1.2 falls through |

| 1.3 | TRUE | |

| . . . | | End of tracing |

You received full credit for this part if you stopped after saying "While 1.2 loops forever."

- Design a protocol allowing two processes to cooperate. When one process is executing in its critical section, no other process is allowed to execute in its critical section. -- Text, p. 227. It is OK of a process is interrupted while it is executing its critical section, so long as another process does not get into its critical section. Disabling interrups is one way to enforce mutual exclusion, but it is not a very good way, and it is certainly not the only way.

Actually, it is more subtle than that. Process P and Q might each have critical sections in which a shared variable A is updated, and Processed Q and R might each have critical sections in which a shared variable B is updated. However, a section updating A is nor critical with respect to a section updating B. Two different processes each can be in their critical sections simultanously if those sections are protecting different shared resources. That is beyond the scope of this exam. If you had written something like that, I probably would give you 100 on the entire exam!

- Must show it works:

- Mutual exclusion

- Progress

- Bounded waiting

Problem: Strict alternation

- Yes.

I'm curious. Was it helpful to allow/suggest working on pages of the exam? Did that save a little time/effort?

|