| |

Your final examination will be a two hour, in-class examination.

You are permitted to bring and use one 3x5 paper study card, which you will submit with your exam.

It may be prepared in any manner you choose.

The exam covers Chapters 1 - 12, 16, and 17 of our textbook, plus Internet protocols discussed in class. It is a comprehensive exam.

No electronic devices of any kind are permitted.

Expectations:

- See course goals and objectives

- See Objectives at the beginning of each chapter

- See Summary at the end of each chapter

- Read, understand, explain, and desk-check C programs

-

See previous exams:

Section and page number references in old exams refer to the edition of the text in use when that exam was given. You may have to do a little looking to map to the edition currently in use. Also, which chapters were covered on each exam varies a little from year to year.

Questions may be similar to homework questions from the book. You will

be given a choice of questions.

I try to ask questions I think you might hear in a job interview. "I

see you took a class on Operating Systems. What can you tell me about ...?"

Preparation hints:

Read the assigned chapters in the text. Chapter summaries are especially helpful. Chapters 1 and 2 include excellent summaries of each of the later chapters.

Read and consider Practice Exercises and Exercises at the end of each chapter.

Review authors' slides and study guides from the textbook web site

Review previous exams listed above.

See also Dr. Barnard's notes. He was using a different edition of our text, so chapter and section numbers do not match, but his chapter summaries are excellent.

Question: Could you send an email about what we REALLY should focus on as we prepare for the exam?

I ABSOLUTELY get it that the exam appears to you very differently from how it appears to me. You have 40 different exams to look at as examples of questions I might ask, so you are able to judge from what I DO, as well as from what I say. This year, since I am allowing you to bring a study card, there might be fewer questions taken directly from previous exams.

See what we claim as Course Goals and Objectives

Some questions are adapted from questions previous students have reported being asked about operating systems at an interview.

Of course, there will be a vocabulary question, in some form. You should AT LEAST know what each line in the Table of Contents means.

Of course, there will be a tricky C question.

I like to ask a question or two in which you apply knowledge you should know to a situation you may not have considered.

If I will ask 6 questions (you write on 5) from 12 chapters, a final examination is a random sampling process. I cannot possibly ask about everything. It may not look like it to you, but I think I try to ask primarily high level conceptual questions, not tricky, detailed questions (except for the tricky C question, which is, well, tricky).

I always am tempted to ask, "What are the five BIG CONCEPTS of operating systems? What concept would you rate as #6? Why is it not as important as the first 5?" I will NOT ask that because I do not know how to grade it fairly, but it is a question you should be able to answer.

Read and follow the directions:

- Write on five of the six questions. Each question is

worth 20 points. If you write more than five questions, only the first

five will be graded. You do not get extra credit for doing extra problems.

- You are permitted to bring and use one 3x5 paper study card, which you will submit with your exam.

It may be prepared in any manner you choose.

- In the event this exam is interrupted (e.g., fire alarm or bomb threat), students will leave their papers on their desks and evacuate as instructed. The exam will not resume. Papers will be graded based on their current state.

- Read the entire exam. If there is anything you do not understand about a question, please ask at once.

- If you find a question ambiguous, begin your answer by stating clearly what interpretation of the question you intend to answer.

- Begin your answer to each question at the top of a fresh sheet of paper [or -5].

- Be sure your name is on each sheet of paper you hand in [or -5]. That is because I often separate your papers by problem number for grading and re-assemble to record and return.

- Write only on one side of a sheet [or -5]. That is because I scan your exam papers, and the backs do not get scanned.

- No electronic devices of any kind are permitted.

- Be sure I can read what you write.

- If I ask questions with parts

- . . .

- . . .

your answer should show parts

- . . .

- . . .

- Someone should have explained to you several years ago that "X is when ..." or "Y is where ..." are not suitable definitions (possibly excepting the cases in which X is a time or Y is a location). "X is when ..." or "Y is where ..." earn -5 points.

- The instructors reserve the right to assign bonus points beyond the stated value of the problem for exceptionally insightful answers. Conversely, we reserve the right to assign negative scores to answers that show less understanding than a blank sheet of paper. Both of these are rare events.

The university suggests exam rules:

- Silence all electronics (including cell phones, watches, and tablets) and place in your backpack.

- No electronic devices of any kind are permitted.

- No hoods, hats, or earbuds allowed.

- Be sure to visit the rest room prior to the exam.

In addition, you will be asked to sign the honor pledge at the top of the exam:

"I recognize the importance of personal integrity in all aspects of life and work. I commit myself to truthfulness, honor and responsibility, by which I earn the respect of others. I support the development of good character and commit myself to uphold the highest standards of academic integrity as an important aspect of personal integrity. My commitment obliges me to conduct myself according to the Marquette University Honor Code."

Name ________________________ Date ____________

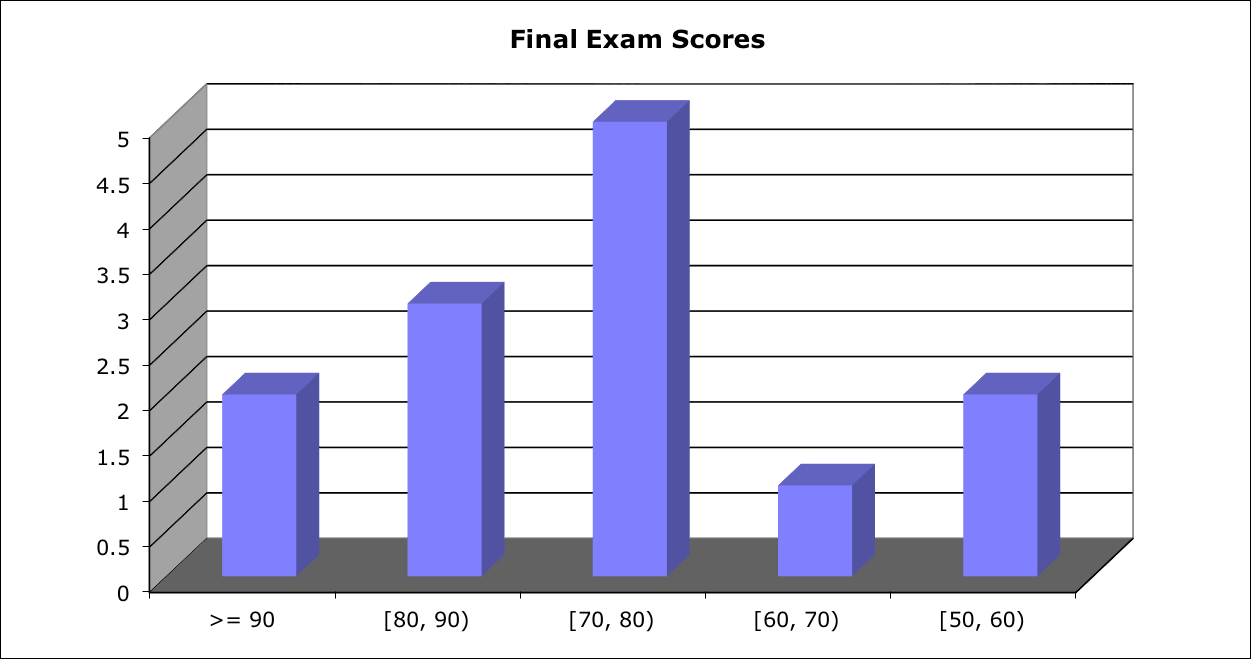

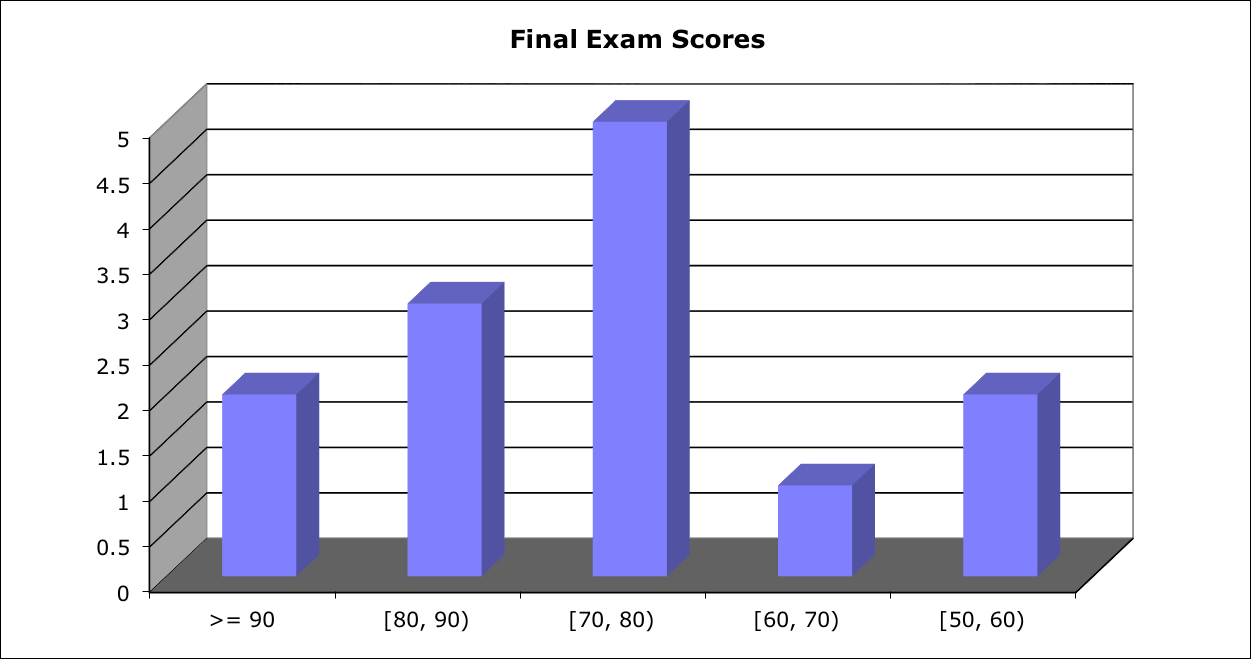

Score Distribution:

Median: 79; Mean: 75.5; Standard Deviation: 13.5

These shaded blocks are intended to suggest solutions; they are not intended to be complete solutions.

Several questions ask you to speculate about things you are not expected to know based on information you are expected to know. Is that fair?

As your careers unfold, I am pretty sure you will encounter more questions similar to 5 and 6 than to 2 or 3. No one will ask you #1 (unless it is whispered by a puzzled colleague not wanting to admit ignorance publicly), but you will be expected to be unphased by such alphabet soup.

Problem 1 Definitions - Internet Protocols

Problem 2 Tricky C - Fork()

Problem 3 Caching

Problem 4 Ultra-Tiny File System

Problem 5 Virtual Machines

Problem 6 Distributed File Systems

Rejected 7 Boot

Rejected 8 Virtual Machines

Problem 1 Definitions - Internet Protocols

For each acronym,

- Give the phrase for which the acronym stands (in the context of Internet protocols)

- What is the purpose of this protocol?

- ARP

- NDP

- IP

- ICMP

- TCP

- UDP

- DCCP

- SCTP

- RSVP

- FTP

- SSH

- TLS

- HTTP

- DNS

- LDAP

This list comes directly from the Internet protocol group assignment handout.

- ARP - Address Resolution Protocol. See

RFC 826;

Address_Resolution_Protocol (Wikipedia)

- NDP - Neighborhood Protocol. See

RFC 4861;

Neighbor_Discovery_Protocol (Wikipedia)

- IP - Internet Protocol. See

RFC 791 (v4);

RFC 2460 (v6);

Internet_Control_Message_Protocol (Wikipedia)

- ICMP - Internet Control Message Protocol . See

RFC 4884;

Internet_Control_Message_Protocol_version_6 (Wikipedia)

- TCP - Transmission Control Protocol. See

RFC 793;

Transmission_Control_Protocol (Wikipedia)

- UDP - User Datagram Protocol. See

RFC 768;

User_Datagram_Protocol (Wikipedia)

- DCCP - Datagram Congestion Control Protocol. See

RFC 4336;

RFC 4340;

Datagram_Congestion_Control_Protocol (Wikipedia)

- SCTP - Stream Control Transmission Protocol. See

RFC 4960;

Stream_Control_Transmission_Protocol (Wikipedia)

- RSVP - Resource Reservation Protocol. See

RFC 2205;

Resource_Reservation_Protocol (Wikipedia)

- FTP - File Transfer Protocol. See

RFC 959;

File_Transfer_Protocol (Wikipedia)

- SSH - Secure Shell. See

RFC 4253;

Secure_Shell (Wikipedia)

- TLS - Transport Layer Security. See

RFC 6101;

RFC 5246;

Transport_Layer_Security (Wikipedia)

- HTTP - Hypertext Transfer Protocol. See

RFC 2616;

Hypertext_Transfer_Protocol (Wikipedia)

- DNS - Domain Name System. See

RFC 1034;

RFC 1035;

Domain_Name_System (Wikipedia)

- LDAP - Lightweight Directory Access Protocol. See

RFC 4511;

Lightweight_Directory_Access_Protocol (Wikipedia)

Our field is full of TLA's (Three Letter Abbreviations) that are convenient short-hand for insiders, but barriers to understanding by outsiders. We had a bad reputation in many quarters for over-use of jargon, sometimes with the intent to confuse. Don't be like that. Speak English with your stakeholders.

Problem 2 Tricky C - Fork()

Consider execution of the program shown below. Comments and print statements do not necessarily tell the truth.

- How many times is the if statement at line 29 executed? Why?

- How many times is the if statement at line 35 executed? Why?

- How many times is the if statement at line 38 executed? Why?

- How many times is the if statement at line 46 executed? Why?

- What output is printed to the screen? You may assume all operating systems calls are successful so no fprintf(stderr, ...) statements are executed. Some of the numerical values printed you should know, and some values you cannot know in advance.

- (8 of the 25 points) Explain.

- Still assuming correct and successful operating systems calls, would it be possible for the output from lines 71 - 78 to be different from what you described in part f)? Explain.

1 /* Derived from: http://www.cs.cf.ac.uk/Dave/C/node25.html */

2

3 #include <sys/types.h>

4 #include <sys/ipc.h>

5 #include <sys/msg.h>

6 #include <stdio.h>

7 #include <stdlib.h>

8 #include <string.h>

9 #define MSGFLG (IPC_CREAT | 0666)

10 #define MSGSZ 128

11

12 typedef struct msgbuf { /* Declare the message structure */

13 long mtype;

14 char mtext[MSGSZ];

15 } message_buf;

16

17

18 void consumer(int msqid, int numberOfMessages);

19 void producer(int msqid, int numberOfMessages);

20

21 int main()

22 {

23 int msqid;

24 int numberOfMessages = 5;

25 key_t key = 1236;

26 pid_t pid;

27

28 printf("In MAIN, get message queue id\n");

29 if (0 > (msqid = msgget(key, MSGFLG ))) {

30 fprintf(stderr, "msgget failed\n");

31 exit(-1);

32 }

33

34 printf("msgget succeeded: msqid = %d. Fork child process ONE.\n", msqid);

35 if ( 0 > (pid = fork()) ) { /* fork a child process */

36 fprintf(stderr, "fork() failed.\n");

37 exit(-1);

38 } else if (0 == pid) {

39 printf("In child process ONE\n");

40 consumer(msqid, numberOfMessages);

41 printf("Child ONE is done\n");

42 exit(0);

43 }

44

45 printf("In PARENT after ONE. pid = %d. Fork child process TWO.\n", pid);

46 if ( 0 > (pid = fork()) ) { /* fork a child process */

47 fprintf(stderr, "fork() failed.\n");

48 exit(-1);

49 } else if (0 == pid) {

50 printf("In child process TWO\n");

51 producer(msqid, numberOfMessages);

52 printf("Child TWO is done\n");

53 exit(0);

54 }

55

56 printf("In PARENT after TWO. pid = %d\n", pid);

57

58 wait(NULL); /* parent waits for children to complete */

59 printf("PARENT is done\n");

60 exit(0);

61 }

62

63

64 void consumer(int msqid, int numberOfMessages) {

65 message_buf receive_buf;

66 int i = 0;

67 int ans = 100;

68

69 printf("Child ONE, consumer ...\n");

70

71 while (0 < ans) {

72 if (0 > msgrcv(msqid, &receive_buf, MSGSZ, 1, 0)) {

73 fprintf(stderr, "msgrcv failed\n");

74 exit(-1);

75 }

76 ans = receive_buf.mtext[0] - '0';

77 printf(" Received message %2d: %s --> %d\n", i, receive_buf.mtext, ans);

78 }

79 }

80

81 void producer(int msqid, int numberOfMessages) {

82 message_buf send_buf;

83 int buf_length;

84 int i;

85 char ans[MSGSZ];

86

87 printf("Child TWO, producer ...\n");

88

89 send_buf.mtype = 1;

90 for (i = 3; i <= numberOfMessages; i++) {

91 send_buf.mtext[0] = '0' + (i % numberOfMessages);

92 send_buf.mtext[1] = 0;

93 buf_length = strlen(send_buf.mtext) + 1 ;

94

95 printf ("Send a message: %s\n", send_buf.mtext);

96 if (0 > msgsnd(msqid, &send_buf, buf_length, IPC_NOWAIT)) {

97 printf ("msgsnd failed. %d, %ld, %s, %d\n", msqid, send_buf.mtype, send_buf.mtext, buf_length);

98 exit(-1);

99 }

100 }

101 }

- Once

- Twice. fork() returns twice, once in the parent, and once in the child. Each parent and child evaluates the Boolean expression once.

- Twice, once in the parent, and once in the child.

- Twice. The first child created at line 35 exits at line 42. Hence, line 46 is executed in the parent, but not in the first child. Then, fork() returns twice, like the fork() at line 35, having created a second child.

- I get

In MAIN, get message queue id

msgget succeeded: msqid = 65538. Fork child process ONE.

In PARENT after ONE. pid = 38280. Fork child process TWO.

In PARENT after TWO. pid = 38281

In child process ONE

Child ONE, consumer ...

In child process TWO

Child TWO, producer ...

Send a message: 3

Send a message: 4

Send a message: 0

Child TWO is done

Received message 0: 3 --> 3

Received message 0: 4 --> 4

Received message 0: 0 --> 0

Child ONE is done

PARENT is done

Depending on preemptive scheduling, printing from the first and second child processes could be different.

- Lines 35 and 46 create two child processes, in which the functions consumer() and producer(), respectively, are called. We cannot know which of those functions will execute first.

Suppose child ONE happens to call consumer() first, as in my sample run. consumer() blocks at line 72, waiting to receive a message. Eventually, child TWO runs, and producer() sends messages at line 96. producer() sends messages for i = 3, 4, and 5 with string values "3", "4", and "0". Eventually, child ONE runs, and consumer() receives the messages and prints them.

- It could be that child ONE and child TWO alternate execution, in which case Send and Received printf lines would alternate. We can make no assumptions about the relative progress of two processes.

Since the mailbox is owned by the operating system, it is entirely possible for other processes to be sending and receiving from the same mailbox. In that case, child ONE could receive messages child TWO did not send, and child TWO could send messages child ONE did not receive.

In an earlier draft of this question, I was going to give you this code, give you output showing child ONE receiving several message before the three sent by the producer(), and asking you to explain how that could be.

More tricky? In general, the number of messages sent by the producer() and the number of messages received by the consumer() do not have to be the same. A slight change to line 91?

Problem 3 Caching

- What is "caching?"

- List four different contexts in which modern computer operating systems implement caching.

For each, tell what is cached and where it is cached.

- What are the advantages of caching?

- What are the disadvantages of caching?

- In what ways is caching performed in a Distributed File System similar to segmentation or to paging for memory management? In what ways is it different? Explain in detail.

Hint: You do not know the answer. This question asks you to make a case from what you do know. I expect a detailed, technical answer, although it may be speculative.

Caching arguably is the single greatest performance enhancing tool an operating system can use on a single processor. How does the concept apply in several different OS settings.

- Caching replicates information from a relatively distant, relatively large, relatively slow, relatively inexpensive data store in a relatively close, relatively small, relatively fast, relatively expensive data store.

Caching is not the same as buffering.

- Virtual memory caches disk blocks in main memory

- Translation Look aside Buffer caches page table entries in a hardware TLB

- Hardware level 1, level 2, and sometimes level 3 caches cache information from lower levels in the memory hierarchy, level 2, level 3, and main memory, respectively.

- Distributed File System caches files from a remote server in a local machine's memory or disk

- Web browser caches files from a remote server on a local machine's disk

- Domain Name Service server caches (name, IP address) pairs from a remote server in a local table

-4 if all your examples are memory caching of disk, which I would consider one context.

- Speed, handle large data, reduced bus/network traffic, transparent to user (generally). Advantages means

more than one.

- Consistency, coherency, cost, complexity of hardware and OS, small size,

slower on a miss than direct access. Disadvantages means

more than one.

- Some thoughts:

The point was to take what you should understand about caching in the context of memory management and apply it to the context of DFS. Can you apply what you know in a strange context?

If locally cached files are kept on a local disk, the cache size is the free space on the local disk, so cache size probably is not a constraint. Hence, strategies for selecting a victim to be removed from the cache probably is not important.

If locally cached files are kept in local memory, cache size may be important. Then, strategies for selecting a victim to be removed from the cache including Optimal, First In First Out, Least Recently Used, and Approximate LRU are all candidates.

The biggest difference between segmentation and paging is that segments are different sizes (leading to external fragmentation), while each page is the same size (leading to internal fragmentation). Files are different sizes, so if we cache entire files, DFS is more like segmentation. Instead, if we cache disk blocks from remote files, each disk block (probably) is the same size, and DFS is more like paging.

If DFS caches entire files on a local disk, the external fragmentation is handled by the local file system.

DFS must handle writing to a cached file, just as both segmentation and paging must handle writing to a segment or a page in main memory by Write Through, Write Back, or some variant.

DFS must maintain a table of files cached locally, which may be different from the table of open files, just as segmentation or paging systems must maintain segment tables or page tables. However, the number of open files probably is much fewer than the number of segments or pages, so the cached files table is small. File access is much less frequent than memory access, to the speed of access to cached files table is not as critical as the speed of access to the segment or page table.

Problem 4 Ultra-Tiny File System

Describe how you would extend the XINU Ultra-Tiny File System of Project 8 to provide

arbitrary length files.

Hint: I expect 1 - 2 pages with a mixture of pictures/diagrams, English text,

and pseudo-code at a level of detail roughly similar to the explanations Dennis gives in class.

What is the current limitation that restricts the size of a file?

Size of a block (and number of blocks)

In the spirit of our OS, the block size is a property of the (virtual) hard drive, and the OS cannot change it by fiat.

Solution: Multiple blocks in a file. Any of the allocation methods in 11.3

would work, including contiguous allocation, linked allocation, file allocation table, in increasing order of complexity.

If you said, "We'll just declare how long the file is," assuming that block size is fixed, you are proposing a contiguous allocation. Then, you have to describe how the OS knows a) where the file begins and b) where the file ends. What are the disadvantages of contiguous allocation? What limits remain?

A linked allocation strategy was the most commonly suggested. That works, but storing the links in the block reduces the data block size. In a more advanced operating system, that would interfere with other OS capabilities, e.g., buffering files by block or paging. Also, if one block is lost, all following blocks are lost. It is fine for you to suggest linked allocation as a solution, but you should acknowledge its disadvantages.

Of course, that does not do much good with such a limited number of blocks,

but supporting a larger number of blocks is a different question.

Having the OS declare larger blocks would work in XINU (although with some BAD side effects), but it destroys the concept of hard disk blocks we are trying to understand and simulate.

Problem 5 Virtual Machines

- Describe an application of virtual machines with which you have some familiarity. You will answer the remaining parts of this question in the context of your example. If you have no example of your own, you might use Amazon, Google, or Microsoft cloud services or Dr. Corliss's running Windows under VMware on his Mac.

- In the context of your example in part a, how would you guess processes are scheduled by the guest operating systems vs. processes scheduled by the host operating system?

- In the context of your example in part a, how would you guess virtual memory is handled by the guest operating systems vs. virtual memory handled by the host operating system?

- In the context of your example in part a, how would you guess privileged hardware instructions are handled by the guest operating systems vs. privileged hardware instructions handled by the host operating system?

- In the context of your example in part a, how would you guess files are handled by the guest operating systems vs. files handled by the host operating system?

Hint: Much of your answer is speculation, based on what you know. Important evidence for my VMware example is that Windows applications run at about the same speed as they run on a native Windows machine with comparable hardware.

The intent of this question is to elicit respect and admiration for modern virtual machine technologies. It would be easy (not really) to run a virtual machine in a software simulator, but it would be excruciatingly SLOW. To achieve the speeds we take for granted, a guest OS and applications MUST have nearly the same hardware access as the native OS and applications. While protections are enforced. It's really pretty amazing.

"Speculate" is not a synonym for "bull s&#*." I expect your answer to be based on your sound understanding of operating system principles, and I expect that understanding to be apparent in your answers.

The pattern here is

- Consider what you know about how operating systems work

- Consider what you know about what a host OS knows and does

- Consider what you know about what a guest OS knows and does

- Other actors are the hardware and the virtual machine manager

- Eliminate unlikely suspects

- Formulate likely hypotheses about remaining actors

Some thoughts include:

- My example is my Mac running Windows 10 under VMware. For simplicity, let's assume I give VMware only one core. Fundamentally, neither Windows nor its applications (Word, Excel, Firefox, Matlab, etc.) has any indication they are running in a virtual machine. They just run. It is the job of the VM manager (VMware, in this case) to fulfill that fantasy. This question asks you to speculate on how that might be done.

- How do host and guest schedulers cooperate/compete?

From the perspective of the host Mac OS X operating system, VMware is one of about 100 processes running. Performance of Windows applications is within a few percent of what I observe on native Windows machines with comparable hardware, so the host OS must give VMware either higher priority or larger time quanta when it is active. I suspect Windows must be scheduling its own processes (because OS X seems to know almost nothing about what happens inside VMware). The good performance of Windows processes suggests it must be doing process scheduling using the hardware capabilities. There cannot be very much software simulation in the way.

Hypothesis: Windows processes are scheduled by the Windows process schedule (almost) directly using the hardware for context swap, etc.

- Code profiling shows an average of about 1.4 memory accesses per instruction, and modern processors average 4-6 instructions per clock cycle per core, so there are about 10 accesses to virtual memory per clock cycle. If there were any software simulation in a memory access by a Windows application, performance would suffer big time. I suppose there could be a little software simulation in handling a page fault, but not very much. A possible variant is the the processor has special hardware to support virtual machines virtual memory, but that makes my head hurt.

Hypothesis: Windows uses processor hardware directly for its virtual memory.

- Neither Windows nor its applications known they are running in a virtual machine, so they must execute privileged hardware instructions. The host OS cannot allow VMware to run completely in a privileged mode, so there has to be some magic here.

Hypothesis: Processor supports (at least) three levels of privilege. VMware runs semi-privileged so privileged and user modes are honored.

Alternative: Virtual machine manager traps guest privileged instructions and executed them by software simulation using only user instructions.

- To the host OS, the Windows virtual disk is one large file, so the host OS must do no file-level operations for the VM. Windows applications think they read and write files as always, which we know they perform by calling Windows functions. Hence, Windows things it reads and writes files as always. The only component left to bridge the fantasy. in which Windows is running with the reality of my disk has to be the VMware virtual machine manager.

Hypothesis: VMware intercepts the instructions from Windows to the disk it knows and translates those calls into low level calls (through low level OS X function calls) to the physical disk driver.

Critical insight: Reasonable performance of guest applications imply that there can be very little software inserted between the guest OS and the hardware, with the possible exception of file handling, which is slow anyway.

Do not confuse the virtual machine manager with the guest operating system and its application. We might say Windows is running on a virtual machine, which is managed by the virtual machine manager. The "virtual machine" in question is supposed to act as if it is normal hardware.

The Java Virtual Machine IS a virtual machine in the sense of our Chapter 16, but it behaves more like a host application than the virtual machine manager I describe here. JVM is an appropriate example, but the answers are a bit different. For example, one could argue Java Byte Code has no privileged instructions, and Java programs share the host file system, rather than have a virtual disk.

Another variant set of answers: If the virtual machine manager is allocate 1-2 cores, and the host OS retains its own cores, considerably less cooperation between host and guest is required. Excellent observation, someone. Files remain a point of cooperation.

Bonus: When an interrupt occurs, who is interrupted? Whose interrupt handler runs?

Problem 6 Distributed File Systems

I hope you have used Google Docs to share files. If not, the key features are that you store a file on Google's server and access it through a web browser. If you share the file with teammates, you can each open the file in your own browser. Changes made by one person appear almost instantly in everyone else's browser. In class, we saw that as several students updated the schedule for Design Day demonstrations of their Project 10.

- Would you consider Google Docs an example of a Distributed File System? Why, or why not?

- Does Google Docs provide location transparency or location independence? Why, or why not?

- If five teammates are editing the file at the same time, is there a cache-consistency problem? Why, or why not?

- Do you suppose Google employs a write-through policy or a write-back policy? [That asks for a choice, not a yes/no.] Why?

- Do you suppose Google employs a client-initiated approach or a server-initiated approach? [That asks for a choice, not a yes/no.] Why?

- Is there a race condition opportunity? Explain.

If you prefer, you may answer the same questions for Dropbox instead of Google Docs, although I think the answers are clearer and simpler for Google Docs.

Hint: Yes, I am testing you on the very last section for which you are responsible. No, we did not discuss it in class. If this material is unfamiliar, you should be able to make informed guesses based on your understanding of material we covered thoroughly. I suggest you begin your answer to each part with a (guessed, if necessary) definition of the terms. Then answer the question based on your definitions. This is not the last time you will be expected to be an expert on something you know little about. Do not lie about your expertise, but sometimes informed speculation is the highest wisdom in the room.

"Speculate" is not a synonym for "bull s&#*." I expect your answer to be based on your sound understanding of operating system principles, and I expect that understanding to be apparent in your answers.

Be clear whether you are answering for Google Docs or for Dropbox. If you did not say "Dropbox," I assumed you were thinking of Google.

See Section 17.9. Find definitions there.

- Yes. Clients (browsers), servers (Google's), and storage devices (Google's) are dispersed among machines of a widely distributed system.

- Transparency? No. To find the file, you must navigate to Google Docs. Our book says, "hint of the file's physical location." OK. I accept that "Google.com" really is only the slightest hint at a physical location.

Independence? Yes. You neither know nor care if Google moves your file to a different storage device.

Several answers suggested you did not know the correct definitions of these two terms.

- BIG TIME. In effect, you have five copies, each on a different machine, and each could be different. You could each change the same character at the same time.

- This part addresses how changes made in one client get sent to Google's servers. MUST be write-through, as fast as your changes appear on your teammates' screens.

- This part addresses how changes of which Google's servers become aware are reflected on the screens of multiple clients. For Google Docs to work as we see, surely the client sends changes back to Google's servers as fast as it can. I might call that "client-initiated," but our book would not; that is write-through. Once Google's server(s) learn of the change, it knows this browsers have copies, and the server sends updates to the clients. That is server-initiated. The process could be implemented using a client-initiated approach (client constantly asks the server, "Any changes for me?", but to provide the responsiveness we see, a client-initiated approach would generate huge network traffic.

Yes, opening the file at the beginning of each session should be considered client-initiated.

- Oh, yes! Google mitigates the danger be very frequent, very fast updates, but if two people change the same character at exactly the same time, only one is reflected.

For Dropbox, updates are not displayed instantly. If you change a shared file your teammate has open, your teammate does not see your changes.

- Yes.

- Transparency? Yes, sort of. You navigate to a file on your local machine. No, sort of; you need to know it is in Dropbox (unless you have a shortcut somewhere else)

Independence? Yes. You neither know nor care if Dropbox moves your file to a different storage device.

- BIG TIME. You have five copies, each on a different machine.

- Write-back. Dropbox sends the updated file after you save it, next time you are connected to the Internet.

- Client-initiated. Your machine asks Dropbox every 5-10 minutes, "Any changes for me?"

- BIG TIME.

Reject 7 Boot -- NOT used

What happens when you turn your computer on, up to the point it is ready for you to begin using it? Give a step-by-step sequence. Be as specific as you can.

I asked this question because descriptions are scattered throughout the

text, and I want to see if you can integrate them.

In brief:

- Must start executing code from nonvolatile Read Only Memory [or -4]

- which reads a loader from fixed location on disk or network

- which loads the full OS from disk or network

- initializes system tables

- starts various OS processes

- including command processor and/or GUI event handler [or -2]

This level of detail earns C. More detail is expected for scores > 10.

It is critical to tell where to find each loader and the OS itself.

Since the problem specifically mentioned "ready for

you to begin using it," you lost 2 points if you did not explicitly mention

something about receiving user input.

If you describe what you see on the screen (external manifestations) vs. the

inner workings, you earn 8 of 20 points.

Rejected 8 Virtual Machines -- NOT used

"With a virtual machine, guest operating systems and applications run in an environment that appears to them to be native hardware and that behaves to them as the native hardware would but that also protects, manages, and limits them." -- Text, p. 711

Explain what that means, give an example, and suggest how it might be implemented for each:

- "Run in an environment that appears to them to be native hardware"

- "Behaves to them as the native hardware would [behave]"

- "Protects them"

- "Manages them"

- "Limits them"

| |