| |

Your first midterm examination will appear here. It covers Chapters 1 - 4.

It will be a 50 minute, closed book exam.

Expectations:

- See course goals and objectives

- See Objectives at the beginning of each chapter

- See Summary at the end of each chapter

- Read, understand, explain, and desk-check C programs

-

See previous exams:

Section and page number references in old exams refer to the edition of the text in use when that exam was given. You may have to do a little looking to map to the edition currently in use. Also, which chapters were covered on each exam varies a little from year to year.

Some questions may be similar to homework questions from the book. You will be given a choice of questions. I try to ask questions I think you might hear in a job interview. "I see you took a class on Operating Systems. What can you tell me about ...?"

Preparation hints:

Read the assigned chapters in the text. Chapter summaries are especially helpful. Chapters 1 and 2 include excellent summaries of each of the later chapters.

Read and consider Practice Exercises and Exercises at the end of each chapter.

Review authors' slides and study guides from the textbook web site.

Review previous exams listed above.

See also Dr. Barnard's notes. He was using a different edition of our text, so chapter and section numbers do not match, but his chapter summaries are excellent.

Read and follow the directions:

- Write on four of the five questions. If you write more than four questions, only the first four will be graded. You do not get extra credit for doing extra problems.

- In the event this exam is interrupted (e.g., fire alarm or bomb threat), students will leave their papers on their desks and evacuate as instructed. The exam will not resume. Papers will be graded based on their current state.

- Read the entire exam. If there is anything you do not understand about a question, please ask at once.

- If you find a question ambiguous, begin your answer by stating clearly what interpretation of the question you intend to answer.

- Begin your answer to each question at the top of a fresh sheet of paper [or -5].

- Be sure your name is on each sheet of paper you hand in [or -5]. That is because I often separate your papers by problem number for grading and re-assemble to record and return.

- Write only on one side of a sheet [or -5]. That is because I scan your exam papers, and the backs do not get scanned.

- No electronic devices of any kind are permitted.

- Be sure I can read what you write.

- If I ask questions with parts

- . . .

- . . .

your answer should show parts

- . . .

- . . .

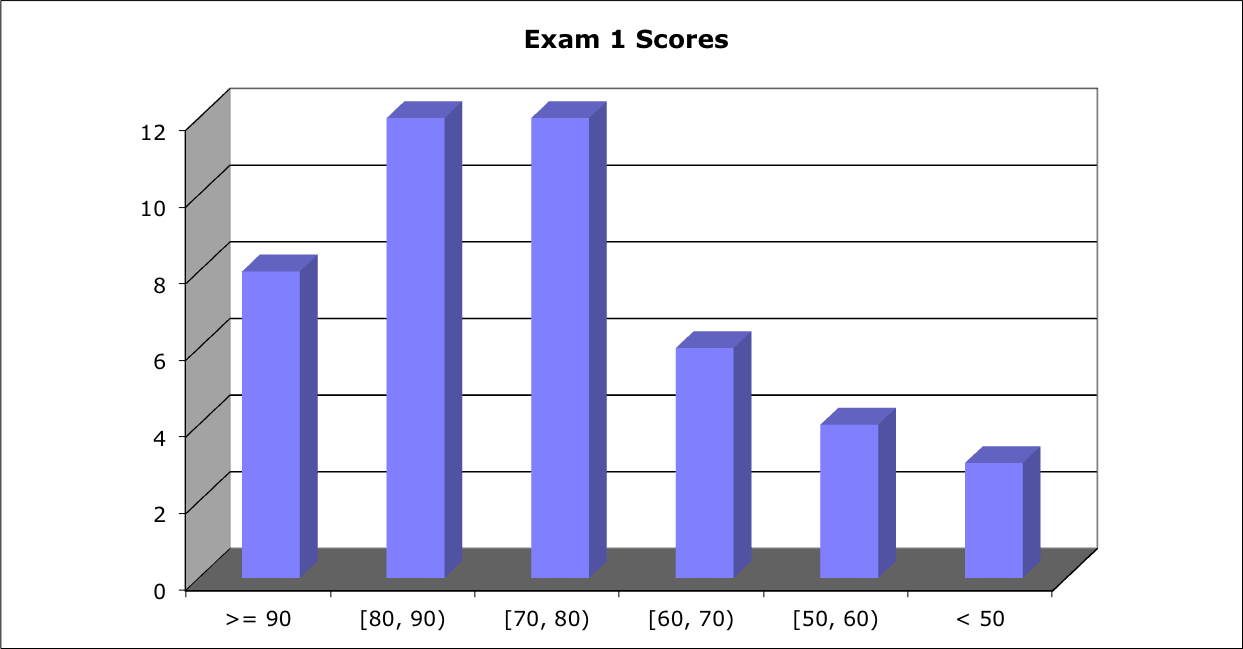

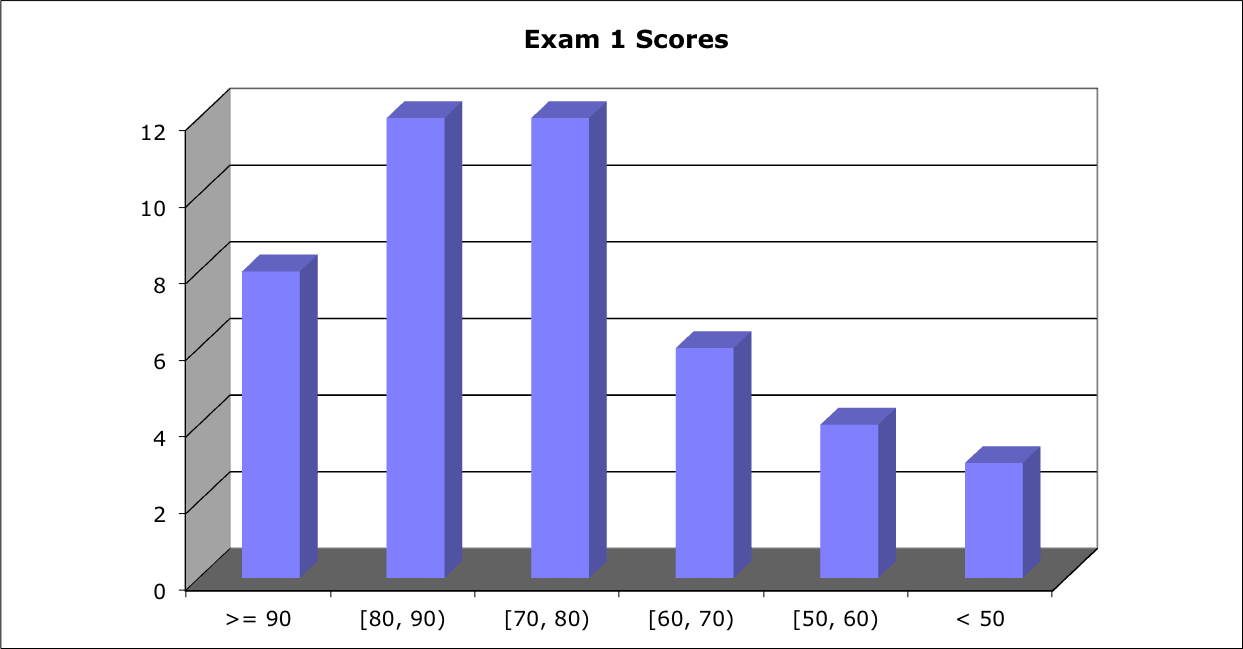

Score Distribution:

Median: 77; Mean: 75.9; Standard Deviation: 14.0

These shaded block are intended to suggest solutions; they are not intended to be complete solutions.

Problem 1 Compare and Contrast

Problem 2 Tricky C - fork()

Problem 3 "Best" Operating System

Problem 4 Process Context Switch

Problem 5 Waiting

Problem 1 Compare and Contrast each pair of terms

(in the context of computer operating systems):

- Protection and Security

- User mode and Kernel mode

- Shared memory and Message passing

- Blocking receive and Non-blocking receive

- Client-Server computing and Peer-to-peer computing

Hint: "Compare and contrast A and B" questions evoke a standard pattern for an answer:

- Define A

- Define B

- Tell how A and B are similar (that's the "compare" part)

- Tell how A and B are different (that's the "contrast" part)

That's what your answers to each part should look like.

Someone should have explained to you several years ago that "X is when ..." or "Y is where ..." are not suitable definitions (possibly excepting the cases in which X is a time or Y is a location). On Exam 2, "X is when ..." or "Y is where ..." earn -5 points. One template for a definition is first to identify a larger class to which X belongs; then descibe how X differs from other instances of the larger class.

- Protection and Security: See Section 1.9.

Protection controls access to computer system resources.

Security defends a system from internal and external attacks.

What threat is most likely to cause you data loss or other serious system compromise? You (operator error). The most helpful forms of protection prefent you from doing something you'll regret.

- User mode and Kernel mode: See Section 1.5.1. User vs. kernel modes are different from User vs. kernel threads

User mode is a state of the operating system in which most instructions of user applications are executed.

Kernel mode is a state of the operating system in which some critical portions (often involving priviledged instructions) of the operating system is executed.

What is it called when a user process makes an operating system call for service? Most operating system code is executed in user mode.

- Shared memory and Message passing: See Section 3.4. Be careful to avoid circular definitions: "Shared memory is memory that is shared" counts zero points.

- Blocking receive and Non-blocking receive: See Section 3.4.2.2. Spell "receive" correctly.

- Client-Server computing and Peer-to-peer computing: See Sections 1.12.2 and 1.12.3. Client-server is a master-slave relationship. Peer-to-peer is a relationship among equals. Was Napster before your time?

Some authorities say peer-to-peer is not client-server. Other authorities say peer-to-peer is client-server, but that the roles of client and of server are fluid.

Vocabulary list. I ask mostly about terms that appear as section, subsection, or subsubsection headings in the text, but any phrase printed in blue in the text is fair game. Here are some of the terms I might ask you to define. This list is not exhaustive:

- Batch system: Typically large, no user present. E.g., overnight accounting runs, daily update of Checkmarq records. Batch mode is an old business data processing mode, still VERY widely used in mainframe business data processing.

- Blocking send vs. Non-blocking send: [Section 3.4.2.2.] Spell "receive" correctly.

- Client-Server computing and Peer-to-peer computing: [Section 1.11.4 & 5.] Client-server is a master-slave relationship. Client requests, server answers. Which is the master?

- Clustered systems: [Section 1.3.3] Two or more individual (computer) systems gathered together to accomplish computational work.

- Interactive system: Typically, a user is present.

- IPC: [Sect. 3.4 & 3.5] Interprocess Communication: shared memory and message passing. It is important to know at least some of the TLAs (Three Letter Abbreviations).

- Kernel: [P. 6] Part of the OS that is running all the time, core OS processes.

- Kernel thread: [Section 4.3] Created by OS processes.

- Kernighan's Law: [P. 87] "Debugging is twice a hard as writing the code in the first place. Therefore, if you write the code as cleverly as possible, you are, by definition, not smart enough to debug it." You should recognize the name Kernighan as one of the authors of our C textbook

- Message passing systems: [Sect. 3.3.4.2] A mechanism to allow processes to communicate and to synchronize their actions without sharing the same address space. A message-passing facility provides at least two operations: send(message) and receive(message). Be careful to avoid circular definitions: "Message passing systems pass messages" counts zero points.

- Non-blocking receive: [Section 3.4.2.2] Receive a message. If no message is there, return from the receive() function all immediately.

- Operating system: [P. 3] A program that manages the computer hardware, provides a basis for application programs, and acts as an intermediary between the computer user and the computer hardware.

- Operating system calls: [Section 2.3] An interface to operating system services made available to applications software by an operating system.

- POSIX: Portable Operating System Interface. We looked at POSIX examples for shared memory, message passing, and Pthreads.

- Pipes: [Sect. 3.6.3] A pipe acts as a conduit allowing two processes to communicate. A pipe is usually treated as a special type of file, accessed by ordinary read() and write() system calls.

- Privileged instructions: [P. 22] Some hardware instructions that may cause harm. May be executed only in kernel mode. Examples: Instruction to switch to kernel mode, I/O control, timer management, interrupt management

- Process: [P. 105] Program in execution, a unit of work in a modern time-sharing system.

- Process Control Block (PCB): [Section 3.1.3] Representation of a process in the operating system.

- Process scheduling: [Section 3.2] Switch between processes so frequently that users can interact with each process. Select a process from the Ready list for execution in the CPU.

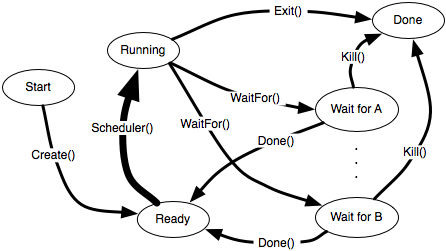

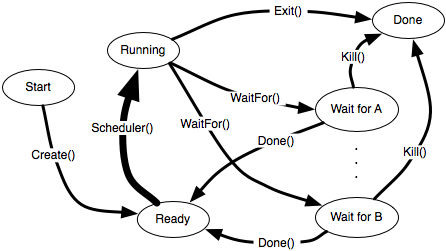

- Process State: [Sect. 3.1.2] Defined by the current activity of the process. Each process may be in one of: New, Running, Waiting, Ready, Terminated.

- Process vs. Thread: [P. 105-106, 163] A process is a program in execution. A process may include several threads. A thread is a basic unit of CPU use. It includes a thread ID, a program counter, a register set, and a memory stack.

- Protection vs. Security: [Section 1.9.] Protection - Mechanisms for controlling access to the resources provided by a computer system. Protection controls access to computer system resources. Security defends a system from internal and external attacks. Protection <> Security.

- Real-time system: [Section 1.11.8] Typically time-sensitive interaction with external devices.

- RPC (in context of inter-process communication): [Sect. 3.6.2] Remote Procedure Calls abstract the procedure-call mechanism for use between systems with network connections using message-based communication.

- Shared memory systems: [Sect. 3.4.1] A region of memory residing in the address space of two or more cooperating processes. "Shared memory is memory that is shared" is circular.

Alternative: Separate CPUs that address a common pool of memory

- Shared memory vs. Message passing: [Section 3.4.] Be careful to avoid circular definitions: "Shared memory is memory that is shared" counts zero points.

- Sockets: [Sect. 3.6.1] An endpoint for communication. A pair of processes communicating over a network employs a pair of sockets. A socket is identified by an IP address and a port number.

- Symmetric multiprocessing (SMP): [P. 15] Multiprocessing system in which each processor performs all tasks within the operating system. Does your "definition" hold also for asymmetric?

- Thread cancelation: [Sect. 4.6.3] Task of terminating a thread before it has completed.

- Thread library: [Section 4.4] Provides a programmer with an API for creating and managing threads.

- Thread-Local Storage: [ 4.6.4] Data of which each thread must keep its own copy. Do not confuse with local variables.

- Thread pool: [Sect. 4.5.1] Create a number of threads at process startup and place them in a pool, where they sit and wait for work.

- Time sharing: [P. 20] The CPU executes multiple jobs by switching among them, but the switches happen so frequently that the users can interact with each program while it is running.

- User mode vs. Kernel mode: [Section 1.5.1] User mode is a state of the operating system in which most instructions of user applications are executed. Kernel mode is a state of the operating system in which some critical portions of the operating system is executed. What is it called when a user process makes an operating system call for service? Most operating system code is executed in user mode.

- User thread: [Section 4.3] Created by user processes.

- Virtual machine: [Section 1.11.6] Abstract the hardware of a single computer into several different execution environments, creating the illusion that each computing environment is running its own private computer.

Problem 2 Tricky C - fork()

Consider this C program:

#include <stdio.h>

#include <unistd.h>

int main() {

fork();

fork();

fork();

printf(" Done!\n");

return 0;

}

This program compiles and runs to completion.

- [10 pts.] How many "Done!" lines are printed?

- [15 pts.] Explain your answer.

8

See exercise 3.2, p. 149, after Figure 3.31.

Consider this instrumented version of the same program:

#include <stdio.h>

#include <unistd.h>

int main() {

printf("%d\n", getpid());

fork();

printf("%d\n", getpid());

fork();

printf("%d\n", getpid());

fork();

printf("%d\n", getpid());

printf(" Done!\n");

return 0;

}

When I run it, I get

$ pwd

... /13materials/14osc9e/ch3

$ make 3.2

cc fork-question-2.c -o fork-question-2

fork-question-2

75870

75870

75870

75871

75872

75871

75870

Done!

75873

Done!

75872

Done!

75871

Done!

75874

75876

Done!

75875

Done!

75874

Done!

75877

Done!

$

Of course, we should test return codes. This assumes each fork() call is successful [or -5 pts.]

Problem 3 "Best" Operating System

Suppose you are hired to choose "the best operating system." How would you decide? What do you look for?

- Briefly outline your strategy.

- List five metrics you would use and their relative weights.

- [__ %] . . .

- [__ %] . . .

- [__ %] . . .

- [__ %] . . .

- [__ %] . . .

- Explain your choices.

Hint: Answer the question asked. What makes an operating system "good?" I am not asking what is the best OS. I am asking for the metrics by which you would judge the best. This is not an excuse for philosophical or OS religion BS; it is asking about your understanding of what an operating system should do.

Purpose: [-5 points] if you do not start with concerns of what the users of your OS should accomplish. We have computers to get work done.

- Strategy must begin with requirements. You should outline what you want the OS to do. You could address a general-purpose OS, a server OS, a network OS, an OS to host development, an OS for a printer, or an OS for a specific embedded system. Then talk about how well it does it. The more narrow is your assumed application, the easier it is to list metrics that measure its suitability to the task.

I liked the answer that suggested assembling a group of users to use the candidate OS's.

- Depending on the work you expect your OS to do, you might list cost (initial and long-term) resource management, protection and security, host applications, response time, throughput, software portability, development productivity, scalability, support for hardware, communications, system integration, openness/closedness, cost, or many specific features. Are your metrics measurable?

Most current security threats are threats because "security" was not on the list of requirements of early OS developers. Hence, security has been added on, rather than designed in. "Protection" was a requirement for mainframes, but when computers got "personal," we did not think we needed to worry (much) about that any more. Who needs protection from themselves? (All of us.) Then we connected our "personal" computers to the Internet.

Some lists were quite low level. If you were choosing between Windows, Mac, or Linux for a corporate standard, would you ask about support for micro-threading or the fairness in process scheduling? Or would you time benchmark applications? Exception: One student said, there are important business drivers including cost, reliability, etc., but I am focusing on technical matters. I accepted that.

- Explain your choices. Does your answer offer evidence you have learned something in this class you did not already know? Excellent if you recognize cost. Excellent2 if "cost" includes long-term support.

The question asked about OS, not about hardware. Factors such as size, protability, or storage capacity counted negatively.

In the context of scheduling and dispatching of processes on a single-core processor,

- (5 points) What is the purpose of a context switch?

- (20 points) Describe the steps, in order, taken by a kernel to context-switch between processes.

This question intentionally is repeated from last year's (2014) exam.

See Section 3.2.3. This question reflects what you are to do for Homework 4; theory (George) and homework (Dennis) are one.

Context switch changes which process holds the CPU. It does not interrupt

a running process, nor does it do the work of the scheduler to determine

which is the next process to run.

At a high level, the assignment saves state in the PCB. To

paraphrase the current comments in the TODO section of the code:

* save callee-save ("non-volatile") registers to the stack

* save outgoing stack pointer to Process Control Block

* load incoming stack pointer from PCB

* restore callee-save ("non-volatile") registers

* restore argument registers (for first time process is run)

* jump or return to destination address

Save and restore the "state"? What consitutes the state of a process?

How is control handed over to the incoming process? (or -5)

Think for a moment. Can you write code to save or to restore the Program

Counter? No. Why not? In our design, the last instruction executed by the

context switch is RETURN, as if from a function call.

Could the use of "kernel" in this question offer a hint for parts of Problem 1?

Problem 5 Waiting

Management of waiting is an essential part of every operating system. The text and I have discussed often the ready and blocked waiting states.

- ready state

- List several ways a process could have gotten into the ready state.

- What is the process waiting for?

- Could the process get "lost" waiting indefinitely? Explain.

- blocked state

- List several ways a process could have gotten into into the blocked state.

- What is the process waiting for?

- Could the process get "lost" waiting indefinitely? Explain.

- What features could an operating system incorporate to deal with the possibility that processes could start to wait for an event that might never happen?

This question is not about fork(). The only reason to even mention fork() might be in process creation.

- ready: A process can be placed into the ready state by the operating system when it is created, when it voluntaritly gives up the CPU (as by calling resched() on its own), when it is removed from the Run state following a timer interrupt, or from the Blocked state when the resource for which it was waiting becomes available.

CPU

Depends on the scheduling discipline. If there is no preemption and the Running process never gives up the CPU, all ready processes wait indefinitely. Could that be the case for a badly behaved process in your current assignment?

If the wait list is a queue, no. Under other disciplines (e.g., priorities) we will study in Chapter 6, perhaps.

- blocked: A running process effectively blocks itself by requesting certain resources from the operating system.

Whatever resource the process requested, for example, to receive a message, to access the hard drive, to receive network traffic, for another process to terminate, or in response to a page fault.

-5 points if you failed to point out in some way that there are multiple blocked states.

Easily. For example, the process might wait for a message no one ever sends.

- Different strategies (sort of) work for different resources. We will study several throughout the semester. "Aging" is a strategy to adjust priority artificially. Waiting for a message that never arrives may be acceptable, or we may force the process to wait for only a finite amount of time.

A later student asked:

Would another acceptable solution be that the operating system simply not incorporate it and instead use peripherals to generate interrupts (like when a button or a key is pressed / released) as needed? I am in an embedded class right now as well, where we are focusing on timers and interrupts, and was just curious if this answer would fall under the "OS umbrella" category, or if it is not entirely relevant.

Yes, that absolutely counts as an OS strategy. As an OS strategy, I'd call that, "Ignore the problem. Let the users deal with it." It is a VERY common OS strategy. That's what <CRTL> - <ALT> - <DELETE> is for, isn't it? Or just press and hold the [POWER] button?

We probably always should provide a feature such as a button for the user to press as a back-up, but if we can make the OS smart enough to get it right, we'd prefer our OS took care of preventing/avoiding such problems.

Using a timer to interrupt if a wait has been going on for a while is often an excellent way to prevent infinite waits.

IMHO, there is no boundary between hardware and software; there are only "systems," and their users simply expect them to WORK.

Any but the simplest OS cannot function without timers and interrupts. Otherwise, the OS would spend all the CPU time constantly polling, "Has anything happened yet?" Similarly, a good mechanism for handling timers and interrupts is a central mission of any OS.

Yes, there should be MANY connections between your embedded class and this class.

Wait on/for? One waits on a "superior" to provide a service for example, a server in a restaurant. One waits for a peer or a subordinate. In an operating systems context, a server may wait on a client as it services a request from the client, or it may wait for a client to make a request.

Language matters. Use it with care, love, and respect.

Problem 6 [Not included this year] Processes vs. Threads

Context: One operating system (Linux) treats processes and threads in the same way, allowing a task to be more akin to a process or a thread (as described in our text) depending on flags passed to the system call that creates it. Another operating system (Windows) treat processes and threads quite differently. The Process Control Block contains pointers to the separate threads belonging to the process.

Question: Contrast these two approaches for modeling processes and threads within the kernel. What differences do the two approaches make? What are some consequences?

Hint: Answer the question asked. This is not a question about Linux or Windows. It tests your ability to reason about differences in the treatments of processes and threads.

See Problem 4.16, p. 193.

Define what you understand "processes'' to mean.

Define what you understand "thread'' to mean.

The Windows interpretation is quite close to that of our text.

Explain how you think the Linux version must work.

Explain what differences you think it makes. For example, the Linux model probably makes it easier for you to flood your own computer with many threads competing for CPU time, slowing down non-participating processes.

| |