| |

Directions:

Read and follow the directions.

- Write on four of the five questions. If you write more

than four questions, only the first four will be graded. You do not get

extra credit for doing extra problems.

- Read the entire exam. If there is anything you do not understand about

a question, please ask at once.

- If you find a question ambiguous, begin your answer by stating clearly

what interpretation of the question you intend to answer.

- Begin your answer to each question at the top of a fresh sheet of

paper. [Or -5]

- Be sure I can read what you write.

- Write only on one side of a sheet.

- Be sure your name is on each sheet of paper you hand

in. [Or -5]

- If I ask questions with parts

- . . .

- . . .

your answer should show parts

- . . .

- . . .

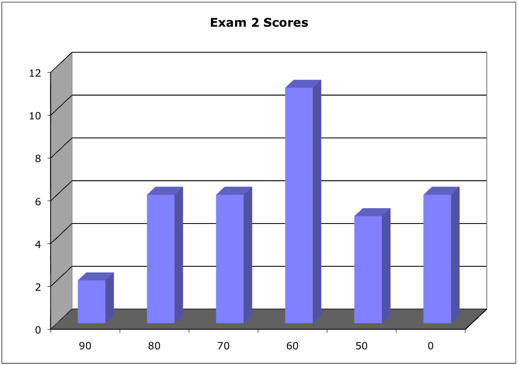

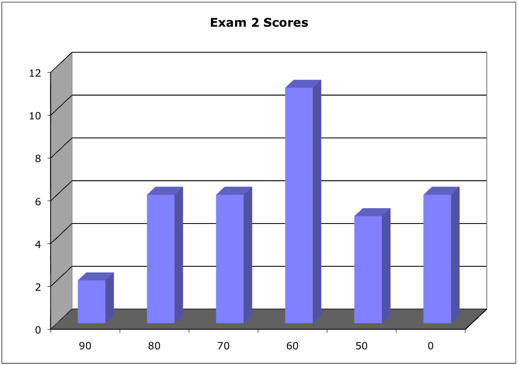

Score Distribution:

Problem 1 Threads

- Describe the actions taken by a kernel to context-switch between kernel-level threads.

- What resources are used when a thread is created?

How do they differ from those used when a process is created?

- Problem 4.3, p. 174. Answer similar to Assignment 4.

Half of the actions are on the outgoing thread; half are on the incoming thread.

- Save certain registers in Thread Control Block

- Move outgoing thread to Ready or a Waiting queue

- Select incoming thread

- Restore registers from incoming Thread Control Block

- Load Program Counter with previous Program Counter from incoming thread (after restoring registers)

Stack? Depends on the hardware architecture. Often, the stack for a thread is in the thread's main memory,

and only the Stack Pointer register needs to be saved and restores.

This question is not about one-to-one, one-to-many, or many-to-many.

- Problem 4.4, p. 174. Many said, "Threads use fewer resources." Yes, tell me about that.

Resources (be specific):

- Memory for Thread Control Block

- Memory for thread-specific variables

- Much memory is shared among threads belonging to one process

Some differences include:

- Process Control Block is larger than Thread Control Block

- Process needs memory for code, as well as data

- Process needs space for Open File Table

Problem 2 MIPS code from Project 6's testAndSet()

Here is the MIPS code from Project 6's testAndSet() function:

testAndSet:

ll t0, 0(a0)

ori v0, t0, 1

beq v0, t0, tasdone /* lock already held */

sc v0, 0(a0) /* try atomic update */

beq v0, zero, testAndSet /* failed, retry */

move v0, zero /* succeeded */

tasdone:

jr ra

- What does this code do?

What does it return?

Can you write an equivalent version in C?

- Write a short C function lock() that uses testAndSet() to acquire a lock before

entering a critical section.

- Is your lock() implementation blocking?

Is it

fair?

- How does this mutex mechanism compare with Xinu semaphores?

Dr. Brylow writes:

- What does this code do?

It attempts an atomic increment of a lock location in memory, pointed to by

the first argument. If the lock is already held, it returns immediately,

and if the "load-linked" and "store conditional" operations are interrupted

by any other access to the lock location, it tries again.

What does it return?

It returns the value of the memory location prior to the atomic update. If

the return value is 1, someone else already has the lock. If it is 0, the

lock is yours now.

Can you write an equivalent version in C?

You cannot write an equivalent version in C. The semantics of the hardware

op codes for performing the atomic update cannot be duplicated directly in a

platform-independent language like C.

- Write a short C function lock() that uses testAndSet() to acquire a

lock before entering a critical section.

void lock(int *lockVariable)

{

while (testAndSet(lockVariable))

;

// When this while loop ends, we can enter our critical section.

}

- Is your lock() implementation blocking?

Is it fair?

My implementation is blocking, and it is not "fair". Students may come up

with many variants, but they have to build a fairly elaborate function to

get either non-blocking or fair behavior. In fact, under the worst

hypothetical conditions in a heavily interrupted system, the testAndSet()

call could loop indefinitely, trying vainly to grab the lock but never

succeeding, even if the lock is free. (In practice, this is a bizarrely

unlikely degenerate case, though.)

- How does this mutex mechanism compare with Xinu semaphores?

Xinu semaphores are non-blocking -- in the sense that the waiting process

places itself in a wait queue and yields the processor for other work to be

accomplished -- and fair -- because the semaphore wait queues enforce FIFO

ordering of waiting processes.

To be clear, we *can* build a fair, non-blocking mutex implementation

that would combine the LL/SC hardware mechanism with something like the Xinu

semaphore design. But we didn't, because the interrupt disable/restore

mechanism we did use is easier and more widely portable. An

embedded/real-time system designer with a stronger interest in avoiding

disabled interrupts would want to invest the time in building such a system,

though.

Problem 3 Deadlock Prevention

Describe four strategies for preventing deadlock in an operating system. For each strategy,

describe what that strategy would mean in the Dining Philosophers problem and explain how

that strategy is applied in one example from a modern operating system.

Hint: You answer should have the form:

- Name of strategy

- Description

- In the Dining Philosophers problem, this strategy would mean ...

- Windows/Mac/Linux/... uses this strategy for ...

See Section 7.4, p. 291 - 294

- Deny mutual exclusion - Allow processes to share, e.g., memory or screen. Philosophers share chopsticks.

- Deny hold and wait - If a request from a process for resources is denied, the process relinquishes the resources it holds, e.g., a process blocking itself on some resource relinquishes the CPU.

If a philosopher cannot pick up both chopsticks, he must relinquish any he holds. Alternatively,

request and acquire both chopsticks before he starts.

- Deny no preemption - Allow a process to preempt a resource from a process that holds it, e.g., preemptive scheduling of the CPU. A philosopher can take a chopstick away from his neighbor.

- Deny circular wait - Request resources in some pre-determined order, e.g., a process must be allocated at least a little memory before it can run and request other resources. Either philosophers are numbered and

pick up chopsticks in order of their numbers, or chopsticks are numbered and must be picked up in order.

Many students were confused by the implied double negatives. For example,

NOT(mutual exclusion) means share. If anyone should be able to parse NOT(NOT(.)), it should be us.

Prevention is one of four strategies our book gives for handling deadlocks. Avoidance, detection, recovery, and doing nothing are not prevention strategies.

Problem 4 How large should a quantum be?

Suppose we are using preemptive process scheduling. Choosing the correct

quantum size (how long a process is allowed to run) is important to the

effective operation of the operating system. Consider a single processor

operating system that supports a large number of interactive users. Each

time a process gets the processor, the interrupt clock is set to interrupt

after the quantum Q expires. Assume that the same quantum is used for all

processes.

- What is the effect of setting the quantum to a very large value, say

ten minutes?

- What is the effect if the quantum is set to a very small value, say a

few processor cycles?

- Your answers to parts a) and b) should suggest that an appropriate

quantum must be in between these extremes. Suppose you could turn a dial

(or drag a slider bar) and vary the quantum. How would you know when you

had chosen the "right" value? What factors make this value right from

the user's perspective? What factors make it right from the system's

point of view?

Hint: This is a mathematical modeling question. It should remind you of

some calculus homework problems. Be as quantitative as possible.

- There is essentially no preemption, so this is like a run-to-completion scheduling, with the running process getting excellent service and some processes waiting a very long time. System response seems terrible. The advantage is that the CPU spends almost all of its time doing useful work. Throughput is high. If a process finishes, surely we would bring in the next process and not do nothing.

- Almost no useful work would be done. How long does a context swap take?

- Be as quantitative as possible. I expect a mathematical treatment of some kind

(or -5).

What are your criteria for "goodness?"

You might take Q = 1 / (2 x number of users) so everyone gets two CPU bursts per second.

You might take the max of that with about 100 x cost of context swap, so each process gets a chance to

do something useful while it is executing, even if there are MANY users.

You might take half (or 80%) of the average CPU burst of the job pool.

With both a) and b), one problem is long waiting times, so you might choose Q to minimize the

average waiting time.

You might adjust Q to minimize the number of help desk calls.

Problem 5 Explain virtual memory?

- In a modern virtual memory 64-bit processor, how many pages might there be?

How large might each page be? Why?

How large would the page table be? Why?

Hint: I am not expecting you to know the "right" answer, because there are many

"right" answers. I am asking you to speculate and to justify your speculation.

- Explain the actions of a virtual memory system making one memory access when there is a TLB hit. Your answer should include at least

- a diagram

- a list of the names of at least five components, e.g., TLB - written out in non-acronym form

- Which of those components would you expect to be implemented in hardware?

- Description of the sequence of actions

- In part b, what additional actions are needed if the memory access generates a TLB miss? Include a fresh diagram and describe the additional actions.

Hint: I am looking for evidence that you understand how virtual memory works.

Your figures might resemble Figures 8.7, 8.8, 8.9, 8.11, 8.14, 9.6, 9.9, 9.10.

One intent of this question is that you look critically at that you are being told.

It cannot all be true!

- The question asks, "How large?" Be quantitative.

To be a 64-bit machine means that addresses are 64 bits. In a virtual memory system,

we use n bits as the page number, leaving 64-n bits for the offset within a page.

If the page size is 4Kb, 64-n = 12 bits, so there are n = 52 bits left for the

page number, allowing 252 pages. Hence, the page table needs 252 entries.

Each entry in the page table needs to hold the number of the page frame in physical memory.

If you have 4 Gb of physical memory holding page frames of size 4 Kb, you have room for

about 220 page frames, so the entries in the page table must be at least 20 bits.

A good student of history might make them 32 bits, or perhaps 64 bits like the rest of the machine.

Let's assume 252 32 bit entries for the page table. That is 256 bytes,

or roughly 224 Gb !!!!! Clearly, that page table will not fit on all the disk drives

in the world combined, let alone in your machine's memory, let alone in hardware. And that is only

the page table for one process.

What do you conclude?

- This part is about how to access a specific memory location, given its virtual address.

Usually, a Translation Look-aside Buffer (TLB) is a cache for part of the Page Table.

If you treated the TLB as holding the entire page table, you missed the point of part a),

but you still got essentially the correct answer to part b).

Steps might look something like this:

- The CPU has a <virtual address>, for an instruction fetch, a data read (LOAD instruction),

or a data write (STORE instruction)

- View the <virtual address> as an n-bit <page number> and a 64-n bit <offset>

- Look up the <page number> in the TLB. A hit yields the corresponding <frame number>

in physical memory.

- Concatenate the <frame number> with the original <offset> to get the

<physical address>

- Use the <physical address> to access the physical memory

- Route the result to the CPU

- This part is also about how to access a specific memory location, given its virtual address.

Usually, a Translation Look-aside Buffer (TLB) is a cache for part of the Page Table.

Some students treated the TLB as holding the entire page table. I counted that as essentially

correct, but it gives a quite different answer.

Steps might look something like this:

- The CPU has a <virtual address>, for an instruction fetch, a data read (LOAD instruction),

or a data write (STORE instruction)

- View the <virtual address> as an n-bit <page number> and a 64-n bit <offset>

- Look up the <page number> in the TLB. A miss means that the <page number>

is not in the TLB.

- If the TLB is a cache for the page table, we next look in the page table in a similar

manner: look up the <page number> in the page table. There are two possibilities: hit or miss:

- If we hit in the page table, we get the corresponding <frame number> in physical memory.

- Concatenate the <frame number> with the original <offset> to get the

<physical address>

- Use the <physical address> to access the physical memory

- Route the result to the CPU

If we miss in the page table, we have a page fault

- Select a victim page frame

- If necessary (dirty), write the victim page back to disk

- Locate the desired page on disk

- Copy it from the disk into the selected page frame

- Update the page table for that <page number> with the appropriate <frame number>

- Route the result to the CPU

- In either case, in the TLB, we must select a victim location and update the TLB with the appropriate <frame number>

| |