|

Questions from the Cards

Week 4: Threads

|

Could you share a system flow chart showing the relationship between functions in kprintf.c? We explain it instead. Explicitly, kprintf calls kputc. This is the serious relationship between the code that is given to you. You are to write test cases to test the rest of the functions such as kgetc, kcheckc, and kungetc. Kgetc and kungetc will refer to a shared buffer that is ungetArray[UNGETMAX], which is defined at the top of kprintf.c. How are we supposed to use the unget buffer? The unget buffer is located at the top of kprintf. If you look in the C book, there is an example to which you should refer to (this is a major hint). What happens when an OS runs out of memory for the processors to share? Ideally, the line

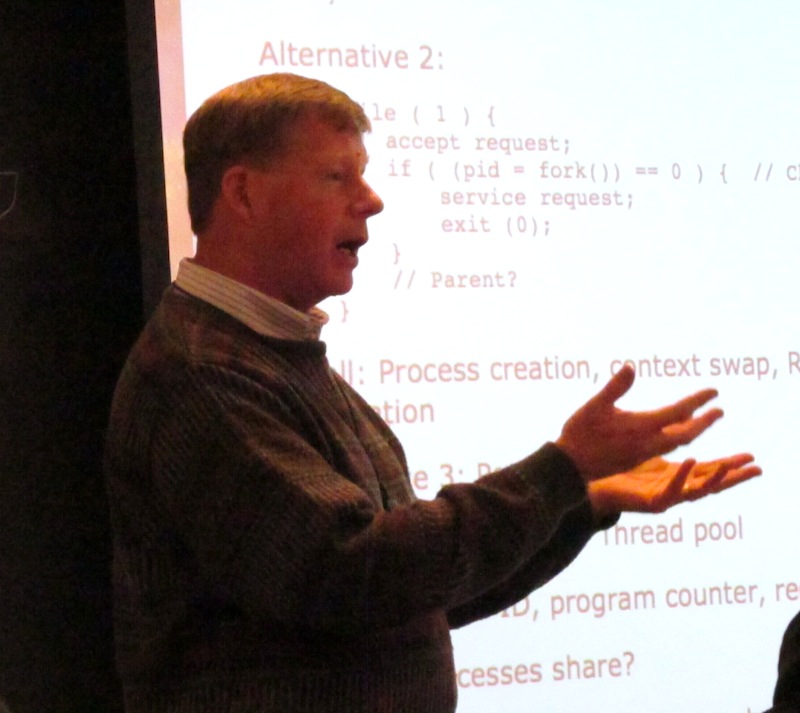

At the other extreme, a full system crash. Or worse, returns memory already being shared by someone else. If I do the MOOCS-for-final deal, do I have to pay the fee for an official certificate? No. I do not require an official certificate. The free versions suffice. What is required is that you do the work. At the end, you should see some sort of "Congratulations" message, email, splash screen, score summary, etc. A screen capture suffices. I want evidence of successful completion. You already have paid enough in tuition for this class. If you have not already signed up for your MOOCS instead of the final, see moocsOnOperSys.html". You have until Feb. 19. After that, the offer is over, and you may sit for the final. 1. TA's did a good job explaining the project--hopefully it will go well Thanks! 2. When we work on the project, should we start with the ARM assembly part since that's what we would need to call from C? Ideally, you should only call the assembly routines (_lock_acquire and _lock_release) from within the respective C methods (lock_acquire and lock_release). My suggestion is to start with the assembly code, and have the C method only call the assembly at first, then later adding the accounting parts to the C methods once your assembly code works. 3. Are the pigs thirsty? Maybe. 4. When a spin lock is on a resource when all other cores or more than one other try to access and spin when the resource becomes available is it a race condition or should it be handled differently? No, the other cores cannot get each other stuck when the resource becomes available. There are exclusive ARM assembly functions that make sure things like that can't happen. 5. Are there other ways to accomplish mutual exclusion other than spinlocks? Yes, and we will talk about several in Chapter 5. Semaphores may be the tool most widely used in modern operating systems. 6. Can we switch lab partners, what if they didn't really contribute even though you asked them for ideas/attempted to work together? Only in extraordinary circumstances, worked out with Dennis. Generally, no. You WILL have an opportunity to change at mid-terms. This is not the last time in your life you'll work with someone not of your choosing. Make it work. And keep a journal of challenges overcome to tell at job interviews. WARNING: If you are the lab partner from whom the questioner(s) is trying to escape, you need to pick up your end of the log. NOW! What sections will be on Exam 1? Chapters 1 through 5, EXCEPT sections 3.6, 4.3 and following, 5.3, and 5.6. And we will not ask details of specific modern operating systems. What if core A gets passed wait condition before core B can lock it? Checking the lock is an indivisible instruction. Whichever request the hardware accepts first is accepted, and any other nearly-simultaneous requests do not return from their lock call until their request is granted. Asking for a lock (like the semaphore wait() instruction) is blocking unless it is granted. Will we need to use counting Semaphore for any projects in this class? Yes. I still don't quite understand how it still works if the two processes run at the same time, and S gets set to -1. Why does it still work? For either your spin locks or for the semaphores I described in class today, the hardware enforces that one request is handled first. Hence, the first handled request sets the lock (or the semaphore to zero), and the next request waits. How can the hardware enforce, "One is handled first?" Bus contention. If one unit of hardware can respond to any of four cores, that unit and the four cores must share some sort of electrical bus. Any such bus MUST have a protocol for two simultaneous broadcasts to the bus. The most common conflict resolution strategy is similar to polite guests at a party. You speak AND you listen. If you detect that someone else started speaking at the same time you did, you pause (I said POLITE guests), wait a random amount of time, and start speaking again. Probably, one of you will start speaking again before the other. If you both start at the same time again, you each wait again, probably a little longer this time. Another strategy might decide ties based on core ID or some priority. Not a question, but I think it would be helpful to look at a few examples rather than just one. Agreed. We'll do Producer-Consumer next Friday. Is a semaphore declared as an "int" or a "Semaphore"? Depends on what library you are using. In POSIX, it's "sem_t". The OS needs to know you are asking for an operating system object. What happens to the program that waits? It allows for other programs to be useful, but when does the waiting program run? The waiting program is waiting for SOMEthing, a semaphore to be signaled in this discussion. Messages work the same way. When the operating system determines that the event for the waiter was waiting has occurred, the operating system changes the waiting process from its Waiting state to the Ready state. Eventually, the Ready process will be selected to Run. We will learn a lot more about process states in coming chapters and projects. Why are we using spinlock instead of a Semaphore for this assignment? On our Pis, spinlocks are a bit easier to use, and there are no other processes waiting to do useful things anyway, so spinning is not really wasteful. -- GC 1. What kind of test cases should we run for Project 3? three? You should write tests to check thoroughly the functionality of your four synchronous serial driver functions. In addition to TA-Bot's independent testing of your functions, a human TA will be assigning points to your testcases based upon their coverage. If TA-Bot's tests uncover significant bugs in your implementation, *and* there is no sign that you wrote tests of your own to uncover the same issues, that looks particularly bad. TA-Bot is not a crutch. You are expected to think about and devise your own tests for every assignment in this course. 2. In kcheckc(void), what part of the UART do we check for characters? The same part you check for characters in kgetc(). 3. Should kgetc() replicate getch() we have used in the past? Yes, it should be functional replacement for both the K&R getch() and the O/S getc() functions you have used in the past. 4. Will all of the future project build on themselves? For example, if our project five does not work, will that affect our project six? Not all, but many. We actually try to make them somewhat independent when we can, but since the overarching theme of this semester is building large chunks of your own embedded O/S, it is inevitable that some pieces will depend on other core components that you have written. -Dr. D 5. Do processes still share copies of memory after fork()? Yes, in the processes described by our text. There are MANY variants in details. It is common to have a variant of fork() that loads a new executable into the child’s memory instead of a copy of the parent. Processes and threads described in our text are extremes of a continuum. Windows has processes, but no threads, except that processes can have blocks of memory in common. Linux has threads but not processes, except that threads can be loaded with a copy of the parent’s memory. 6. What would be a situation where the process would be faster than a thread? If you only need one thread, it is faster do do the work in the parent itself. 7. What kinds of variables besides constants might a thread share with its main process? Depends on the application. Word shares the buffer contains the text of the document among (most of) its threads. A browser shares the screen buffer among (most of) its threads. 8. How do threats create their own copies of local variables? Give each thread its own stack of activation records that store variables local to each called function. In our runner example, any variables declared in void *runner() are local to the function, hence are stored in its activation record, which should be stored in the memory for its thread. 9. Why can any thread be interrupted at anytime? To give the illusion of many threads/processes running simultaneously. In (nearly) all general-purpose computers, there many be many processes or threads in a ready state. A hardware timer goes off at intervals (sub milli-second in modern processors). The interrupt handler swaps out the currently running process/thread, selects a new process/thread from the Ready list, and swaps that process/thread into the Running state. You will build that machinery for processes in Project 5. We will talk on Friday about whether the Scheduler should work on processes or on threads. 10. What destroys a thread? Similar to a process, a thread may terminate a) voluntary, usually by exit(), b) by being kill()ed by a sibling, a parent, or someone else, or c) when its parent terminates (in some systems). 11. How would websites that use features like infinite scrolling work? Would the threads work the same? Yes. 12. What is the solution to the race condition problem? Careful system design using synchronization primitives, as we will study in Chapter 5 and Project 6. 13. How often does this problem happen in real life? Frequently. I will tell a story some other day of a former student who got fired for pointing out to his boss a race condition in their customer billing system. Spoiler: The student won in the end. -- GC 1. What do we need to do for the ARM assembly part of the assignment? Store all of the context for the outgoing process, and load all of the context for the incoming process. This can only be done in assembly language because it directly manipulates values in processor registers. To quote directly from the project spec: "Our context switch can be completed using only arithmetic opcodes, and the load (ldr), store (str), and move (mov) opcodes." 2. If only one process can run at a time, how can we have between 0 and 50 processes at a time? Only one process can run at a time on a single core processor, but up to 49 others can be waiting their turn to run in the next timeslice. When your O/S rapidly context switches between many different process per second, it gives us the illusion that many processes are running at once. 3. Why does kernel memory go on the bottom of this stack if everything else is put at the top? A process stack (or activation record stack) in this assignment is a contiguous chunk of memory allocated to a specific process. We will be working with many process stacks. Kernel memory, in contrast, is the space in memory where the kernel is stored. Process stacks are data. Kernel memory is code. (Well, mostly code, with a little global data thrown in as well.) For Embedded Xinu, we have chosen to place the kernel in the lowest addresses of physical RAM, in part because that is where the ARM processor expects to begin execution when it boots. 4. Will we get grades before the deadline of the next project? The TAs will try. 5. Are the rest of these projects done in our teams? Yes. 6. Our projects build on previous ones. If the prior project has errors, and we used the files in the new project, will we be down-graded twice? Each project will be graded with a rubric primarily focused on the new component that is being built. However, if, after another week of time to work on it, you still have show-stopping bugs in previous components, you may have great difficulty getting the new components to work on top. 7. How will not having kgetc() affect the assignments moving forward? Without kgetc(), you cannot read input. You'll be unable to select your own testcases, and TA-Bot will not be able to communicate with your kernel for testing, either. 8. Is there some type of version control for TA-Bot? Is it possible to retrieve a file you turned into TA-Bot? I can retrieve them. We have not devised a mechanism for you to retrieve files submitted to TA-Bot. I strongly encourage everyone to use version control software for their projects. If you are not familiar with this, we will be suggesting some options in an upcoming lab session. 9. Gitlab.mscs has been down recently. Do you have another recommended method of version control? I can recommend subversion and GitHub, among others. 10. If Xinu is old and being phased out, why do we continue to maintain it? You have misunderstood me if you believe I've said that Xinu was being phased out. In the 1990's, it became difficult to keep Xinu current with more complex PC hardware that was being sold. The proliferation of inexpensive embedded devices with powerful network boot systems has largely eliminated those technical hurdles since the turn of the millenium. Xinu is old in the sense that George and I are old -- all of us were initially created decades ago. The same can be said for the C programming language, which is almost laughably immune to being "phased out". The version of Xinu we use is only a few years old, and is based on a fresh port of the system that was created here at Marquette just in the last decade. The variant you're using for the assignments this term was actually just forked this week, and combines both some tried and true ideas I've used for many years, and some new directions I'm trying for the first time. If we can shake out some last few bugs in the very newest version, you could be the first group of students to write code for multicore Xinu in an O/S class. Anywhere. Part of our work on this was presented by MU student researchers at an international CS Education conference just two months ago in Finland. All of the researchers and educators present agreed that nobody else had gotten something quite like this to work -- on any platform, with any code base. So, in short, we continue to maintain, extend and evolve Embedded Xinu because it allows us to give our students a cutting edge educational experience on par with -- and often ahead of -- what is being done at the best universities in the world. Xinu is old, but it is also constantly being reborn. 11. Do others still use Xinu outside Marquette? Other universities? Yes. There's no real central tracking mechanism, since the code we produce is freely shared on the Internet. But at last count, there were universities in every time zone of the U.S. using Xinu in their courses, and foriegn countries as far away as Qatar and South Korea. The second edition textbook, which uses a cousin to our Embedded Xinu, a few notches back in the family tree, was just published in 2015, and is sold all over the world. In the recent past, versions of our materials have been used at University of Buffalo, Indiana University, Tennessee Tech, Oberlin College, and Purdue, just to name schools that have contacted us for tech support. To be prefectly frank, it is still a hard sell. Most colleges don't have faculty willing to put in all of the work that is necessary to build and maintain a real, experimental O/S lab for their undergraduates. At Purdue, only graduate students have access, for example. For Marquette, the investment is made more practical because we've figured out how to leverage it to good effect in more courses than just O/S. -Dr. D 1. What is the main function the ctxsw.S file? To record and preserve the context of one outgoing process, and then restore and switch to the context of another process. 2. For the ARM part of Project 4, do you have to individually change each register, or is there a way to do this in a loop? I am not aware of a way to do this in a loop on ARM, because registers must be named in the load and store opcodes, and there is no mechanism for iterating through registers as a source operand. 3. Will the TA's ever have office hours? Yes -- that can be arranged. 4. What is the command to find all our TODOs in our program? grep TODO *.c

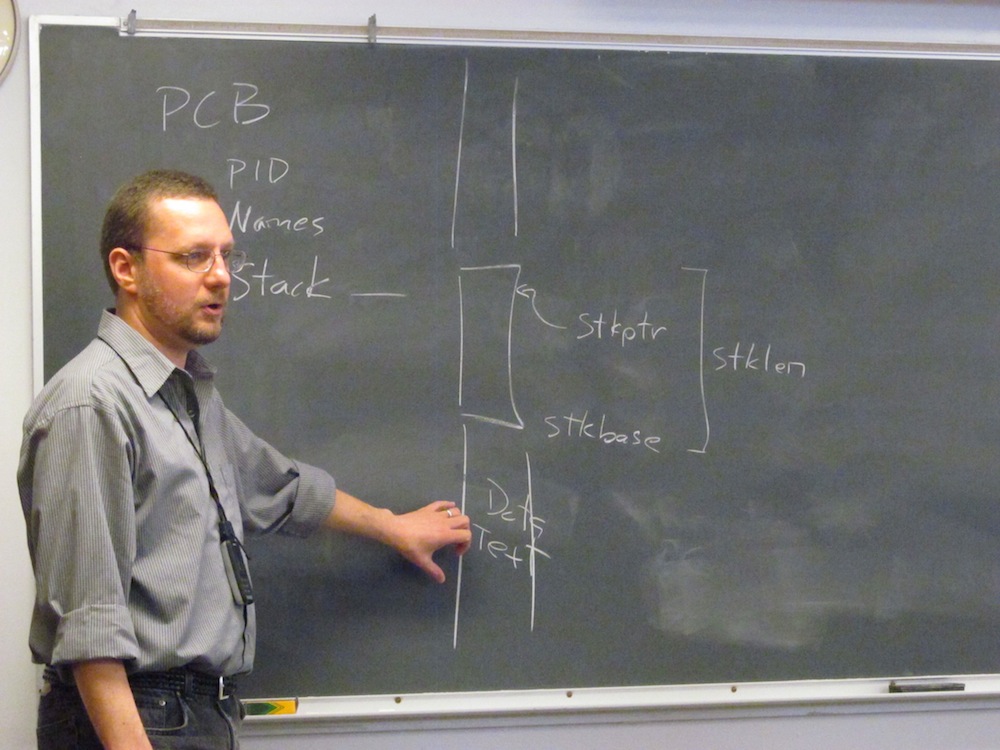

5. If we are writing create(), couldn't we just write another function that would act like fork()? Why is it either/or? Yes, you could. 6. When create() creates a brand-new process, how is it related to a thread concept? The similarity I was pointing out is that the XINU function create() creates a new process, but it loads fresh executable code (as our book attributes to threads) rather than making a copy of the contents of the parent process memory. The point is that in practice, there are MANY variants in the continuum between the concepts of “process” and “thread” described in the text. 7. It would be helpful to show diagrams for these concepts. Agreed. 8. How many threads can one processor handle? Thousands, but they won’t be fast. 9. What is the biggest piece of advice for studying this topic? For genuine learning, strive for understanding, not memorization. There are more questions in OS than answers. If the answers were clear, we would have a single OS. For doing well on exams, study old exams, read the text, and review lecture notes. — G 1) Did you write all of the code given to us for Projects 3 and 4? Probably not. The original author of Xinu did his work in the 1980's, and many Purdue graduate students enhanced that code and wrote new device drivers and subsystems over the years, including me. When I became a faculty member, I ported the Xinu design to embedded RISC processors (PowerPC and MIPS) and ANSI-standard C dialect. I've had a lot of Marquette research students -- undergraduate and graduate -- help me over the years, particularly with the latest port to ARM on Raspberry Pi. So while it is true that I've probably touched every line of code in Embedded Xinu at some point in the past decade, it is by no means all mine. Although the particular variants of the system we use in class are more mine than any other branch of the Xinu family tree, including the publically posted versions we give to other universities. 2) Where do we put the assembly code? system/ctxsw.S 3) In which chapter of K&R is the '...' argument to functions explained? Don't have my book with me in Arizona. Look in the index for 'variadic functions', or the macros 'va_list', 'va_start' and 'va_end'. Dr. C: See Section 7.3 Variable-length Argument Lists. 4) How do you link C and assembly? The C linker does not differentiate between .o object files that came from .c source code versus .S assembly code. They can all be linked together happily, provided that the assembly and C function agree on a Standard Calling Convention. 5) Where does a PCB get stored? Is it an O/S-specific area of memory? In a production-quality, general purpose O/S, yes, the PCBs are probably stored in a protected region of kernel memory that only the O/S can access directly. In our simplified embedded O/S, they are just a global variable structure like everything else. 6) How is a buffer different from an array in implementation? The notion of "buffer" in computing is, "a temporary memory area in which data is stored while it is being processed or transferred, especially one used while streaming video or downloading audio." Thus, a buffer could be an arbitrary size block that stores a single structure or data segment at a time. When talking about serial communications, which includes things like streaming sequential data, a buffer entails some kind of FIFO, queue-like behavior. This can certainly be implemented with an array, but it could also be implemented with a lot of other possible structures. 7) Embedded Systems? I'm pretty sure I mentioned "...when we're building an embedded operating system", like, five times. It is my favorite way to justify the absurdly simple shortcuts we've made in Embedded Xinu to make the assignments tractable for sophomores and juniors. 8) How do we test Project 4? The key in Project 4 is to sketch out the context record you are building on the stack in create(), and make sure that it matches what you're writing in ctxsw(). The early 'test' is thus to print out the resulting data carefully with kprintf() calls, and to compare with what your design expects. Once that is looking good, then you can fully test it by actually create()ing, ready()ing and resched()ing to a brand new process. If that process has output, you'll know whether it worked. More advanced tests check repeatability, process termination, and argument passing. Most of these can be tested without actually running resched(). 9) How do we code in ARM for the Project? Write assembly language in the system/ctxsw.S file, where the TODOs indicate. Run 'make' and 'arm-console' to compile and run your work from the compile/ directory. 10) What kind of process should we test for the next assignment? Mostly very simple processes that do or say one thing at a time to test functionality. In Embedded Xinu, any function can become the main program of a new process, just by virtue of being passed as an argument to create(). 11) How do I know when to use flag register bits or data register bits? They share similar bit functions. Generally speaking, the UART hardware on this platform uses the flag register to communicate status information, and the data register just for received or transmitted data. 12) Can you do a git review in lab this week? Didn't the TA's present a basic git HOWTO last week? In any case, feel free to ask about it in lab this Friday. I wish I was back in time for lab this week, but I know you're in good hands. 13) Do we need to move our code from Project 3 into our Project 4 folder? Yes! Copy your kprintf.c file from P3 into the system/ folder for P4, or you won't see any serial output. You can do this using the GUI file manager on the Linux machines, or the command line 'cp' command. 14) So we were talking about a scheduler, but we didn't mention it today. Does this mean a non-preemptive O/S does not have a scheduler? Which PCB in the PRREADY state runs next? Our O/S *does* have a scheduler, and it is the code in system/resched.c. This scheduler is very simple, and always picks the first ready process in the readylist queue to go next. We'll add more complex scheduling decisions as we go. 15) Why are the term/function/variable names so terse? Why resched() instead of reschedule()? Great question. You should know that the current version of Embedded Xinu we use in this class is positively talkative compared to most of its earlier ancestors. Nevertheless, it still betrays two aspects of its heritage. First, because Xinu was originally designed for pedagogical purposes, most of its names were restricted in length so that they would fit tidily into the textbook figures in which all the original source code was published. The books were the "octavo" size, and not the "quarto" format like the Dinosaur books, so it was even more compact. Second, most computer programmers in that era were just a lot more terse than today. Some early languages had actual hard limits on variable and function name lengths, and also it was common to connect by slow modem lines where those extra characters could really add up in longer chunks of code. The more common camel-casing and longer descriptive naming styles didn't really come into fashion until Xinu was past its first decade. You can still see remnants of this era in the terseness of Unix shell commands, too. 16) When can I get access to the lab? If you do not have access to the lab, please go see Mr. Steven Goodman, the MSCS department system administrator, in CU 374. Do not leave until he has fixed your access problems. This should have been automatic at the beginning of the term, but there are a lot of offices on campus that have a hand in the campus access databases, thus there are many wierd cases that crop up and require manual intervention. Contents: o General o Threads General1. Is there a difference between a hard disk and a hard drive? No. 2. Is computer science better than computer engineering? No. Ten years from now, you may be the only one who knows which was your major. Yes, if the job notice says, "Engineers need not apply." 3. What is your go-to programming language? Depends on the problem and especially the environment with which the solution must inter-operate. In the past week, Matlab, Perl, Javascript. 4. What do you like on your hotdog? Catsup, mustard, and relish. Or sauerkraut on GOOD hot dogs. ThreadsAround "threads," and many other operating systems concepts, you should beware of confining definitions. Our book is presenting *concepts* of "process" and "thread," but each operating systems has its own use of terms and its own implementation. Details can be *completely* different. RTFM. In the sense of our book, you fork() a process, and you spawn or create a thread. 5. In the example of the thread summing numbers 1 - 100, why are the threads sharing the sum variable? Doesn't this open the opportunity for a race condition? Yes, race conditions are possible whenever two apparently simultaneous tasks update a shared resource, but without sharing, little meaningful computing is possible. 6. How does memory handling work with threads? Generally, the operating system manages memory for processes. Management of memory belonging to multiple threads belonging to the same process is the responsibility of the designer and programmer of the process, as we made sum a global variable in today's example. 7. If threads are related to a parent process, does that mean that all threads are running while the parent processes is running? We will address that question on Friday. The answer depends on the interpretation of each operating system about what constitutes a thread. 8. Do you prefer the Windows or the Linux approach to threads? It has never made a noticeable difference in any work I have done. Therefore, I have no preference. 9. What would happen if you declared sum within the thread? Which variable will the program honor? I guessed that the compiler would complain. However, when I tried it, the local variable sum masked the global variable sum, so the main program printed sum = 0, instead of the correct answer. 10. If fork() always results in another process with the same source code, why do processes not share the same source code in memory with pointers to their next command like threats do? In principle, each process has its own memory. Processes share memory only by using a shared memory construct. That is the way they are designed. Clearly, they could be designed differently. 11. What is the difference between Pthreads and Java threads? APIs and implementation details. In concept, they are very similar. 12. Can threads have priority as processes can? In principle, yes. In practice, it requires support of the API. 13. If you can, then why and how can you have threads without a process? In the sense of threads we discussed today, no. 14. How do you prioritize various threads while forking? In the sense of our book, you fork a process, and you create or spawn a thread. In either case, assigning a priority depends on support from the API. In principle, it works as you think it should: pass a priority into create(), of call a setPriority() function. 15. Can you spawn a thread from within a thread? Yes. 16. Can you fork() a thread? In the sense of our book, no, you fork() a process. 17. Can a thread fork()? Yes, although exactly what that should mean is not clear. 18. Can a thread create a sub-thread? Yes, by calling create(). 19. So we spawn a thread, which is like a process but without fork()? In the sense of our book a thread is similar to a process in some ways, and very different in other ways. In a sense, create() for a thread is very similar to fork() for a process. In other ways, they are very different. 20. How are threads control-efficient? In most implementations, a context swap for a thread is simpler than a context swap for a process. Virtual memory (Chapters 8 & 9) introduces additional possible efficiencies. -- GC Contents:

Projects1. How do we move the Stack Register? That question could be interpreted a lot of different ways. I'll take stabs at several variants: 2. Will we be implementing any of the solutions discussed today? No, but we will be implementing processes, process scheduling, message passing, and process synchronization. 3. How do I delete a file in Putty? I used 'mv', but it doesn't work. The command you want is 'rm'. However, you are deleting the file in UNIX. Putty is the terminal application you are using to access the UNIX machine. General4. What exactly is a kernel? Exactly? I cannot say definitively. A kernel is the central core of an operating system. Different operating systems define the scope of their kernels differently. Read Chapter 2 of our text, especially Section 2.7. 5. Are there any external materials on the solutions and the problems discussed in class? Most of the material today came from Chapter 4 of our textbook. As always, Google is your friend. GE Hackathon6. Is the GE hackathon an extra credit opportunity? Yes. 7. Are we excused from class if we choose to do the GE Hackathon? Yes, if you notify me in advance. Processes and Threads8. I know we've been over this, but why do we use fork? Examples please? We use fork() whenever we want to create a new process. For example whenever you double click an icon on your desktop or a file, fork is called on your behalf to create a process in which the appropriate application should run. When you start your computer up, the operating system start-up calls fork() repeatedly to start each of the processes and services you see running in the task manager. In one application I have written, a portion of the code could possibly crash. I fork() a process and run the suspect code in the child process. That way, if it crashes, the parent is still running to at least notify me that something bad happened. In another application, I want to run the same program on two different sets of data. By forking two processes, one for each set of data, I can run them separately. On a two or more core machine, the result completes more than twice as fast as running the two processes one following the other. 9. Can you elaborate on the difference between user and kernel threads? A user thread is created by an application. In Wednesday's Pthreads examples, the function pthread_create() caused a *user* thread to come into existence. A kernel thread is the unit the operating system scheduler schedules For example, the operating system may schedule each user process thread independently. If I know that is happening, I can get more than my share of CPU time by creating many, many threats. Alternatively, the operating system may schedule each process independently, without regard for threads running within it. 10. Do user threads break into kernel threats? That sounded like what was happening based on lecture. As suggested in the previous answer, one possibility is that each user thread is a kernel thread, and the operating system schedules each user thread independently. It is critical that you understand that "user thread" and" kernel thread", in fact "threads" and "processes" themselves, are *concepts*, not implementations. In practice, each operating system addresses these and related issues as its designers have chosen. You should attempt to understand as *concepts*, meeting the details must be left unclear. You should also understand some of the design space choices available to an operating system designer. That was the point of today's exercise. 11. If you have a process that counts to 1000 and another process splits into two threads, each counting to 500, will the second process finished two times faster? It depends-in my best lawyer voice. In practice, for 1000 numbers, the single process probably completes first because it has less overhead. However, if you count to one billion on a single processor, the two process solution probably will finish slightly faster because it gets more slices of CPU time. On a dual processor machine, the two process solution might finish twice as fast, as you suggest. 12. Is it more acceptable to treat threads as processes (Linux vs. versus Windows)? Which one is better? I have never been able to tell any difference in my ability to get work done, so I have no opinion. Fork(), exec(), and kill()13. The solution to the kill() issue by choosing between process and thread seemed unclear. How does this solve the problem? The problem is, "What should be the meaning of kill()?" One solution is to say that the programmer who wants to kill() something has to say exactly what she/he wants to kill(). 14. Would there ever be a reason to exec before a processes completed? "Completed" may be ambiguous. Suppose you and a friend decide to buy a car together. Supposed you each go out shopping. Suppose your friend calls and says, "I found the perfect car." Wouldn't you terminate your search? Was your shopping process "complete?" 15. Has anyone ever taken your comments about killing children and parents seriously? No, but today's society is getting less and less tolerant of humor. 16. Are these still valid problems in today's systems? Yes, in the sense that the problems exist. No, in the sense that each of today's systems has adopted one or more solutions. From today's exercise, you should appreciate that most "solutions" come with their own problems. 17. Do methods such as fork(), kill(), or exec() differ in user vs. kernel threads? In concept, no; in implementation, surely. Dr. C. 1. Compared to the material covered in class and HW/lab, how important is the content in the book? Important to success on exams? Roughly: book 60%; HW 20%; class 20%, except that most of the class discussion is intended to amplify material from the book. However, the BEST answer is to review old exams and see what proportions YOU would assign. Important to career success? Depends hugely on your career path. If you do development, C skills from HW probably represents 3/4 of the value of the class. Lecture vs. book? The concepts from the book are more important than my in-class antics, but survivors of this class suggest they remember more about the concepts from lectures. 2. Can more than one program/process access one thread? No, sort of. A thread is spawned by a parent process. However, the thread can engage in Interprocess Communication (shared memory of message passing) with any other process. 3. Why is Brylow so tired? He is? Might have to do with two young children. Or with Office Hours starting at 9 P.M. 4. Would there ever be a time when it is better to use a process pool rather than a thread pool? Depends on how the OS schedules processes/threads. We will talk a little about that on Wednesday. 5. Will we need to create our own scheduler in this class? Yes, Assignments 4 and 5 are relatively simple schedulers. 6. Will we be having multi-core projects in this course? Not as multi-core, but the last couple assignments call for communication between different Pi's. 7. Is there a reason we would consider using fork() for another process as opposed to a thread? It seems like threads are advantageous because of the memory they save. Are threads only effective if they are going to be performing similar tasks? Can we get more information on when to use processes and when to use threads? Why do you suppose you have 50-150 processes running on your laptop? Probably because they have almost nothing to do with one another, so the memory protections built into the OS for separate processes ensure they work separately. If you are building one application, you may prefer the protection offered by processes, the small footprint and easier memory sharing offered bt threads, or a mix of the two. There may be process/thread scheduling advantages of one over the other. In some operating systems, you have no choice. 8. Is the idea of initially forking processes or threads the reason you can mount a Denial Of Service attack on a web site? In a way, a process/thread pool contributes, but MUCH more important is the understanding that if you can perform multiple tasks (essentially) simultaneously makes it possible to recognize that you are under attack and defend, e.g., refuse to accept requests from attacking IP addresses. 9. Where is the memory allocated for threads? From the memory allocated to the parent process. 10. Is there a way to declare immutable variables in C? In the sense that you intend, no. Or does #define do what you wish? 11. How often do you use threads? As a computer user, all the time. Word and your browser are heavy users of threads. As a developer, never. The scientific computation applications I develop do not call for them. Several embedded applications on which I have worked, we sort of rolled our own threads, avoiding any reliance on the underlying operating system. 12. Do threads need to be started/stopped like processes do? Yes, but the mechanisms for doing so sometimes differ. 13. Is it useful to be learning about one core when all machines have many? This is an introductory class. We are STARTING with one-core machines, hoping to understand what is going on there. We will see some aspects of multi-core machines soon. 14. When a thread is sharing a variable, how do other threads know where to access it? If the variable is shared within the address space of the parent program (as "sum" in today's example), other SIBLING threads access the shared variable be its variable name, which is translated into a memory address. Otherwise, threads can participate in shared memory and message passing using OS calls as in last week's examples. 1. Who wrote all this code? Is it the XINU operating system? The examples I am showing in class are adapted from figures in our OS text. They are intended to illustrate concepts discussed in the text in (relatively) simple examples of fully running code. Personally, I distrust code fragments; I never know . Today's XINU display was adapted (quite a bit) from Figure 4.9, p. 173. 2. Can you send the same command to two threads at the same time? You CAN broadcast a message to several threads. On a single core, only one of them can run at a time, so one will receive it before the other. It the two threads are running on separate cores, it COULD happen that both receive the message literally at the same time. 3. Can you describe threads in 1-2 sentences? "Light-weight processes." Re-read Chapter 4. 4. Do all servers contain thread pools? Not all. Depends on the application and expected loads. 5. What are magic numbers(tm)? I do not know about trademarked magic numbers, but "magic number" often is used by teachers of programming as a derogatory term for numbers appearing in a program with little indication of their meaning. If I have a payroll program with a "5", is that the number of workdays per week or the percent pay raise to award? 6. How can we tell C to run a program located at a memory location, as we talked about with runner? In battle, except in tightly coded, small embedded systems, the times when you want to do that are exactly like this example, you want to pass one function as a parameter to another. For example, if I want a function to compute (an approximation to) the definite integral from a to b of f, I may write a function void *foo(double x);

double integrate(void *f, double a, double b);

and call it as area = integrate(foo, 0.0, 5.0); In a more operating system-ly application void *callMeIfYouEncounterAnErrorCondition(int condition, char *errorText);

int doSomethingWonderful(void *errorHandler, ...);

and call it as index = doSomethingWonderful(callMeIfYouEncounterAnErrorCondition, ...); 7. What do you think of VR headsets? Will they flop? There are some serious and some non-serious applications in which they are compelling. I doubt they either will flop or will conquer the world. 8. Are there ways for the OS to communicate with an individual thread besides the mailbox described in class? Sure. The OS is all-powerful. For example, the OS can kill a thread, remove it from the Running state, or alter its internal state (although that would be considered bad practice). Perhaps you mean ways for another thread to communicate through the operating system? A threads can communicate with a thread belonging to a different process (or the same, but why would you) using shared memory handled by the OS. Much more widely used are synchronization primitives considered in Chapter 6. 9. How can we guard against race conditions? Careful programming. Synchronization primitives considered in Chapter 6 are tools we use to help us, but we allow races, and only we can prevent them. 10. How does C prevent race conditions? Does it have a "lock" function or something? Properly, C does NOTHING to prevent race conditions; C has no functions such as "lock". However, standard C libraries provide a variety of synchronization primitives, calling upon operating systems and hardware support. A wise programmer can use these primitives to prevent race conditions. 11. What is the difference between a process and a thread? See examMid08A.html, Problems 3 & 4. 12. What does the code for mailboxes look like? You will write it in Assignment 7. 1) What should we include in the testcases.c file? Devise tests for your four synchronous serial driver functions. TA-Bot is only running a partial test suite for this one during the week; You should also create your own tests to check corner cases and additional combinations. 2) What function should we start with first? kputc(). You need reliable output to see what you're doing. 3) How do we use the turnin command for HW3? Essentially, what parts do we need to turnin? To quote the first paragraph in the assignment specification: "Turn in your source code (kprintf.c and testcases.c file) using the turnin command on the lab machines. Please turn in only the files kprintf.c and testcases.c." Thus, the command would be: 4) Are fields in structs private ever? No. There is no notion of "private" or "public" in C. The closest equivalent is the "static" modifier for functions, which restricts their scope to just the file they are defined in. 5) How are struct variables scoped? If they are always global, how much of a security risk is that? Struct definitions and variables can be scoped globally or locally, just like other kinds of variables. When they are declared inside a function, they are local to that function. Global structs are a significant source of risk in complex software projects; C++ and Java are among the many languages that have aimed to improve upon this with protection modifiers. 6) What does wordp[10] refer to? An entire struct? Yes. 7) What was the first programming language to use something similar to structs? Aggregate records were a feature in COBOL, one of the earliest widespread languages from the dawn of computing in the 1950's. 8) For the homework, when accessing the UART, do we just do base address + (struct pointer -> flag register) to access the flag register data? Or do we not need to add it to the base address? By storing the base address in the struct pointer variable, we are implicitly adding it to any locations accessed through the pointer. Accessing struct fields, whether through static structs or struct pointers, boils down to having the compiler automatically add an offset of some base address. Thus, given a pointer variable that is already set to 0x20201000, and a struct definition that allows the compiler to calculate the 'fr' field is at offest 0x18 within the struct, a line like x = regptr->fr; will automatically figure out that 'x' should be loaded with the value in memory at address 0x20201018, starting with the 24th byte past the beginning of the struct. 9) So you can #define a struct? Yes, but I can't think of a good reason to do so. See section 6.7 in your K&R C book for a discussion of the differences between typedef and #define. 10) Can you have more than one anonymous struct? Yes. 11) How big is the biggest struct you've ever built? Using spacing macros, I've built control and status register structs that span several kilobytes of space. Networking code will frequently feature structs to manipulate data packets, which in the case of jumbo frames can be tens of thousands of bytes. 12) Could you also use calloc() to allocate memory for a struct? Which is preferred? In practice, you can use any of the *alloc() family of functions for dynamic memory allocation. Calloc() is attractive, when available, because it initializes memory contents to zero. That is probably why it is favored by Linux programmers such as the "How to C (2016)" author. But I've worked on systems where calloc() was not available, or incurred a steep performance penalty relative to malloc(). For this course, calloc() won't even be implemented on our embedded operating system. 13) We have been talking primarily about CPU interactions. Will we touch on GPUs at all? As Graphical Processing Units are typically unable to run operating systems themselves, they usually just appear as another I/O device to the O/S. I do not have anything I'm planning to touch on for GPUs. 14) Why did you laugh menacingly when mentioning fields of function pointers? I did not intend it to sound menacing. But we'll use fields of function pointers when we introduce a full-fledged device driver layer toward the end of the term. Function pointer syntax is fairly painful, and is one of the things I usually have to look up in K&R when I need to use it. 15) What really happens when you typecast something? In a sense, nothing. Typecasts are "free" operations that generate no real machine code. They are, however, valuable hints to the compiler during the typechecking phase. They reassure the compiler that you know what you are doing, and thereby prevent warnings or errors 1. Which box takes care of storing the message in the case of message passing between two different computers? We will study that in more detail in Chapter 17, but the message is split into TCP/IP packets and transmitted across the network. Both boxes have a role to play. 2. Can message sending act like a subscription where processes can say to the OS, "I want all messages of a certain type."? The sender would designate what type each message has. Message passing systems COULD be designed that way, but in practice, the message passing primitives provided by the OS are relatively simple. More complex protocols, such as the one you suggest, are build by an application atop the simpler structure. 3. What does free() do? The OS must keep track of which memory locations are being used by which processes, and which memory locations are NOT being used. free() must do the bookkeeping that the designated memory is no longer being used by its current owning process and instead now belongs to the pool of memory locations not in use. D-1. What is the format of the TAbot style command that we can use for testing? The syntax is: xest-test p1 p2 where p1 is the first program, for example the reference implementation, and p2 is the second program to compare, usually your program. Project 2 was the last assignment in which I can give you a reference implementation on UNIX -- from here on out, we're developing on the embedded MIPS platform. But we have an analogous testing system for the external targets that I will demonstrate on Friday. The xest-test command assumes that there is a subdirectory called "tests", with various subdirectories beneath it containing input files with the extension ".test". 1) What is the story of the "Everything is on fire" interrupt? In my previous life as a research and development engineer for an environmental embedded system manufacturer -- before I went back to grad school -- I worked on a system that used interrupts to detect the regular 60 Hz oscillations of the power supply it was connected to. In rural power grids, brown outs and power spikes are more common, and the 220VAC forced ventilation fan motors the controller managed were prone to overheat and melt the insulation on the windings if they remained fully engaged during a power grid anomaly. Hence, the embedded controller carefully monitored the incoming power. If it detected an impending brown out or power spike, it immediately logged the event and shutdown the triacs to the motors during the crucial miliseconds while its own power supply capacitors still ran the embedded processor. Amusingly, one side effect of this was that if you simply unplugged the controller from the wall, its little LCD display would briefly output a little panicky, "Power Going Down!" message for a fraction of a second before running out of juice. So the interrupt did not detect "everything is on fire," but was designed to explicitly prevent that situation. 3) Are there other routers you support? What about hardware obsolescence? xinu.mscs.mu.edu/List_of_supported_platforms. Obsolescence is always a concern. If you are interested in helping to finish new ports of Xinu to the Raspberry Pi, or the ARM mbed platform, consider applying to our summer research program (acm.mscs.mu.edu/reu). This could be our final year using the Linksys routers for Operating Systems, if we can complete the necessary infrastructure to move to one of the newer platforms this summer. 4) Is XINU purely academic? No. Variants of the venerable Xinu operating system have been used in commercial products ranging from vehicles to wind turbines, laser printers to pinball machines. 5) How did you figure out you could use the routers as embedded systems platforms? Some industrious Linux hackers had already figured out that they could break into the WRT54G and get it to run third party Linux distributions that enhanced the features of the device. It seems that I was the first to figure out its usefulness as a cheap and flexible target platform for hands-on computer science laboratory assignments. I (and several of my research students at the time) re-engineered the bootloader code for embedded Linux to instead start our much simpler, bare-bones O/S that could be used for reasonable programming projects. Our system based on routers cost a hundreth of what the experimental target PC labs at Purdue cost, and took a tiny fraction of the lab space. At least half a dozen other universities now use the Embedded Xinu infrastructure we developed here at Marquette to teach some of their systems courses. -D 1. Can you connect a router to a router modem duo to have the secondary router act as a repeater or booster if the two devices are connected LAN - LAN? Brylow: Yes. Generally speaking, these embedded devices are capable of far more complex configurations than their factory-shipped software can support. There is a vibrant, third-party firmware community that thrives on building more flexible software to run them. 2. How old is morbius? What was used before him/her? Brylow: Morbius, not unlike his Timelord namesake, has regenerated into several reincarnations since first coming online in 2006. The current instance is running on 2.5 year-old hardware, and the virtual machine image dates from May, 2011. Morbius started out as a rack-mounted PowerPC XServe, but has been a virtual server for at least the past five years. 3. What is your favorite video game? Who will win in a fight: a power ranger Megazard or Voltrong? Brylow: In the year between finishing my undergrad degrees and starting on graduate school, I took about six weeks to play all of "X-Wing". That was the last time I invested any significant time into a commercial computer game. I briefly toyed with the idea of modifying a Wine-driven Windows emulator to support "X-Wing vs. Tie Fighter" when I finished my doctoral dissertation, but never got around to it. I spend so much of my waking time working in front of various digital devices -- it is really hard to get excited about allocating any of my limited recreational time to the machine. Brylow: As for your second question, Voltron is the clear winner. Megazards are for my little brothers. 4. Do you like the "new Who?" Brylow: Immensely. It is so clear that it is made by people who grew up loving the original, like me. But like the original Star Wars -- well, one can never step into the same river twice. 5. Why is there a Playstation in the rack? Brylow: We were porting Embedded Xinu to the multi-core PowerPC Cell processor in the PS3. Sony's decision to eliminate the third-party software boot option in firmware updates killed the project before we were ready to scale it up. So we have a PS3 that can boot Xinu, but cannot be plugged into the network or otherwise be updated in any way. 6. Does XINU have any graphical OS capabilities? Corliss: No. No OS has graphical capabilities. Graphics are provided by applications. However, that argument is weakening with each new modern OS release. You can run various windows managers in Linux. In principle, you can in Windows or Mac OS X. Probably not on your smart phone. Brylow: Various ports of Xinu have run on platforms with graphics hardware, and have had direct support for linear framebuffer device drivers and graphical windowing. The PowerPC, PS3 and Raspberry Pi ports all fall in this category. There's a research poster for turtle graphics on Xinu in the hallway outside of my office. Last year's students from my Embedded Systems course built a touchscreen keyboard device for Raspberry Pi Xinu, which naturally included a graphical component. 7. Can you run graphics program under XINU? Corliss: Sure, if you are sufficiently clever, but you need your serial driver to work first. Brylow: Even with the limited serial interface on the WRT54GL routers, we have built frameworks that use terminal capabilities to create simple windows, display colors, and move the cursor around the screen to accomplish simple GUI tasks. With graphics hardware, it is much simpler. Again, see research poster with pretty pictures in the hall leading to my office. 8. Do you know if you can boot an OS you made onto a VM like Virtual Box? Corliss: I have not done it, but if your OS boots on a bare machine using techniques similar to usual operating systems, I would assume so. Brylow: Embedded Xinu boots on several variants of the Qemu virtual machine, and it is not hard to get your own code running on a raw VM. 9. How does checking a system return help prevent a fork bomb? Doesn't the system return just alert us that the fork could not be created? Yes, you are right. CHECKING the return does not prevent anything. What is really preventing the fork bomb program from crashing the system is that fork returns an error code if it cannot claim enough memory, rather than crashing then and there. 10. In Java, how do synchronized methods prevent race conditions? Java synchronized methods are an implementation of MONITORS, which we see in Chapter 5. Basically, the "shared" resource belongs to a single process/thread, so it cannot participate in a race condition. Two other processes that wish to access the "shared" resource each call a synchronized method, and the Java run-time message passing ensures that the synchronized method can receive only one message at a time. 11. Is a process responsible for context swapping between threads, or is the OS? OS, in fact, YOU, in the next assignment. Whether swapping of processes or threads. 1) Wouldn't blocking essentially waste a lot of time in many cases? Yes, that is why the operating system moves the blocked process A to a Wait state and switches a Ready process B into the Running state. Very soon after the message (or other resource) for which Process A was waiting arrives, the operating system moves Process A from its Wait state into the Ready state. Soon, it gets its turn at the CPU. Process A waited however long it took its message to arrive, but other processes were not disadvantaged by Process A's waiting. Study and consider the implications of "that diagram" (Figure 3.2). 2) Clearly, I need to read, because these explanations just don't make sense. Agreed. I assume you are able to read the text. 3) In line 47 of message_send.c, why did you use sbuf.mtype = 1; and not sbuf->type = 1? Let's unwind the data types. Line 25: message_buf sbuf; Lines 15-18: typedef struct ... message_buf; Hence, sbuf is a struct, not a pointer to a struct. 4) Are there any figures or examples of context switching to which I could refer? The photos from previous years' lectures at Assignment 4 may help a little.

As usual, Google is your friend. (What do you do with your friends? You USE them! — Prof. Jacoby)

For example, see The latter is a slide deck from lectures at the University of Nebraska - Lincoln. They include the only FIGURES I happened to find. 5) Should homework/project questions be felt with only in Dr. Brylow's office hours, or can Dr. Corliss's be used as well? Dr. Brylow is responsible for lab assignments. 6) For processes over networks, how can the same key be shared? What is needed is SOME concept of a "name" for a mailbox. There are MANY variants. One is that the sender sends to an IP address (machine) and then to a mailbox or a socket (see Wednesday's class) on that machine. 7) Can fork() happen over a network? Not exactly, but a full network OS can have a similar effect. 8) Is control switched every time a message is sent or received? Usually, only when send() or receive() must block. In most applications, send() is non-blocking, and receive() is blocking. -- Dr. Corliss Questions from cards Monday, Feb. 3, 2014 (Assignment-related):1) In our embedded system, how are the UART registers able to be mapped to memory? Aren't the registers part of a different piece of hardware? This is called "memory-mapped I/O". The processor has a memory bus that directs read and write requests to the RAM. Many modern systems set up the addressing mechanism of this bus so that certain, special memory address requests go not to RAM, but to other pieces of hardware such as I/O devices. In this way, the control and status registers of an I/O device appear to the programmer as though they sit "in memory" at certain locations. On our router platform, the UART registers appear at memory location 0xB8000300. Other devices, such as the second UART, the Ethernet core, the Wifi core, etc., appear in memory locations nearby. 2) How will Project 3 be tested? There will be 8 points for each of the four functions to be completed in kprintf.c. Our testing harness uses our own set of test cases to run your synchronous serial driver through the paces. You can see a subset of these in the TA-bot output each night. Finally, the remaining 8 points will be assigned based upon the breadth and quality of your own testing efforts in testcases.c. If you're asking about the details of how we test your code, we combine your synchronous serial driver with a more complete version of the O/S that allows us to communicate testing info even if your driver isn't working properly, and that allows us to run our own set of test cases. 3) Do we have to mask the bit when the transmitter is empty for bit 6 on HW 3? I don't have the bit positions committed to memory, but let me point out that there are human-readable bit flags defined in uart.h that may be of assistance when mapping the UART documentation to workable code. And yes, you'll want to mask off the non-relevant bits from the line status register before making any comparisons. 4) I still cannot get my e-mail to forward. Come to Dr. Brylow's office hours. Or, edit the file ".qmail" in your home directory to contain a single line consisting only of your forwarding e-mail address. 5) Can you explain pucsr->buffer = 'H';? The variable is named "pucsr", short for "pointer to UART control an status registers". You can see it defined as a local variable in each of the driver functions: volatile struct uart_csreg *pucsr = (struct uart_csreg *)0xB8000300;The keyword "volatile" is just a modifier to tell the compiler not to try out any of its fancy optimization tricks on this variable, because it will point to hardware registers instead of RAM. The "struct uart_csreg *" is the type of the variable, a pointer to a struct type called "uart_csreg", which is defined in uart.h. The pointer is being assigned to the memory-mapped I/O address for the start of the UART registers, 0xB8000300. The typecast is there to prevent a compiler warning about assigning integers (0xB8000300) to a pointer (pucsr) without a cast. In the line that send an 'H' to the UART, we see "pucsr->buffer" on the left side of the assignment. The "->" operator is a useful shortcut for pointers to structs. It says, de-reference the pointer variable (pucsr) and access the field "buffer" at that location in memory. With the pointer set to the start of the UART registers, each field of the struct corresponds to a different register on the UART. "buffer" is one of the names for register 0, which when written to, is the transmitter hold register (THR) of the UART. Values written to this location will be transmitted by the UART across the external serial connection, via the mips-console system, to your terminal window. The assignment writes the ASCII value 'H' to the transmitter, and an 'H' should appear when you run this code. 1) Wouldn't blocking essentially waste a lot of time in many cases? [Corliss: Yes, that is why the operating system moves the blocked process A to a Wait state and switches a Ready process B into the Running state. Very soon after the message (or other resource) for which Process A was waiting arrives, the operating system moves Process A from its Wait state into the Ready state. Soon, it gets its turn at the CPU. Process A waited however long it took its message to arrive, but other processes were not disadvantaged by Process A's waiting. Study and consider the implications of "that diagram" (Figure 3.2).] Note that George is talking about a full-fledged O/S here. In our current assignment, your primitive serial driver does, indeed, waste a great deal of time waiting for the UART hardware to catch up. And the O/S is not yet clever enough to go do something more useful while it waits. That would require separate processes, context switching, interrupt-driven device drivers, and process queues. We will introduce an interrupt-driven, asynchronous version of this serial driver later in the term, after we've built more of those prerequisite O/S pieces. -Dr. D 1) Why is foo() the standard function name for examples? "The word foo originated as a nonsense word from the 1930s, the military term FUBAR emerged in the 1940s, and the use of foo in a programming context is generally credited to the Tech Model Railroad Club (TMRC) of MIT from circa 1960." - wiki/Foobar. See also stackoverflow.com/questions/4868904/what-is-the-origin-of-foo-and-bar. I'll let YOU decode "FUBAR." 2) Can you have a server or client written in C connect to a client or server written in Java? Yes, thanks to standards. We will look at the HTTP standard in April. 3) Is it more efficient to request a machine other than your own to run trivial processes instead of doing it on your machine, or does it take too long to "talk"? That depends on how you interpret "efficient." Purveyors of cloud-based services have bet HUGE sums of money that you think the answer is, "Yes." If the server is much more powerful than your machine, or if it has unique resources (such as a file you don't have or a program for which you have no license), the answer is, "Yes." To discover today's date, not so much. 4) Prof. Corliss, why do your emails not show up in Chrome most of the time? The most likely culprit is my digital signature. We will study encryption and digital signatures in early April, see Chapter 15 notes. Suppose you receive a message in the early morning hours of Feb. 19 (the day of our Exam 1), apparently from me, announcing that the exam was canceled. I HOPE it would occur to you that the message just MIGHT be a fraud, and one of your "friends" is having "fun" with you. You should want some evidence that the message really came from me? (One hint: If you receive it at 1 or 2 A.M., it is probably not me; I don't get up until 4 A.M., but I am almost always asleep by 9 P.M.) The digital signature I usually include (omitted from this message as a test) allows you, if you care, to raise your level of confidence that the message really is authentic. Most modern email clients handle digital signatures without you even knowing, but some do not. If your client does not handle digital signatures, the message usually appears empty. I hate to blame the victim, but you might consider a different email client. -- Dr. C. Questions from cards Wednesday, Feb. 5, 2014 (Assignment-related):1) Can there be more TA-bot runs? In principle, yes. However, I'm wary of making everyone too dependent on TA-bot for basic testing. Designing strong test cases is a critically important skill -- we're actually going to assign some points based upon how well you test your code. Also, I noticed that no one submitted anything for the first two nights that TA-bot could have run for this project, and I really want TA-bot to encourage everyone to start earlier, so that they have more opportunities for feedback, more opportunities to ask good questions in class and in my office hours, more time to think about the problem between coding sessions. Increasing the frequency of TA-bot runs, especially in the few days before the assignment is due, seems like it might undermine both of those instructional goals. 2) For homework 3, when kgetc() is called, should it only take one character, or should you be able to keep typing? Each call to kgetc() should return one character. 3) What IDE does the Xinu embedded systems team use? Most of Team Xinu prefers command-line tools, vim, and emacs. Those who go in for an IDE generally prefer Eclipse. 4) Can you run embedded Xinu in a virtual machine? Yes. Embedded Xinu runs on the Qemu virtual machine, both big-endian and little-endian MIPS versions, as well as a Pentium port, and I've heard reports that an offshoot of our ARM port works in a Qemu virtual machine. However, the virtual platforms are not identical to the WRT54GL, so there are differences in the device drivers, boot process, and memory-mapped I/O locations. So, the research version of Embedded Xinu runs on virtual machines, but the stripped-down version you're writing for this class will mostly not. -Dr. D

1) I noticed you did not consider fp (frame pointer) as an important register. Any reason for that? It *is* important, in the sense that it is callee-save, and must be preserved across calls. But we don't have to use it as a frame pointer if we don't want to. On a CISC architecture like the Pentium, the frame pointer (called the "base pointer" in Intel-speak) is a critically important register. It anchors the stack frame, serving as a fixed point of reference for reaching arguments, local variables and return addresses. This is because the stack pointer moves around a lot during the lifetime of a function on Intel architecture -- pushing and popping callee function parameters, temporary values, even the return value. You need both a stack pointer and a frame pointer when writing Intel assembly functions. Not so on MIPS. The key insight from the RISC crowd was that most of that stack action on CISC machines is generating unnecessary traffic through the bottleneck of the bus between processor and main memory. Store all of that stuff in local registers most of the time, only pushing it to the stack when necessary. The result is that MIPS stack frames are almost always a fixed size, known at compile-time. The compiler totals up the register saves and parameters, rounds up to a multiple of 16 bytes, and that is your activation record size. MIPS functions subtract that value from the stack pointer register once in the function prologue, and then restore it in the function epilogue. In between, the stack pointer stays put, and can be used to reference arguments, locals, and register stores through load word and store word opcodes with known, fixed offsets. Thus, the frame pointer is redundant. Any called function can still make use of the frame pointer, merely by copying the stack pointer to it during the prologue, before allocating the stack frame. Because it is callee-save, your local use of the frame-pointer can't be interfered with by interacting with other functions that ignore the frame pointer, as long as they still treat it as callee-save, as required by the convention. 2) How can I email an attachment through putty? uuencode does not work. Not certain what you mean by that. Do you mean to ask how you can create a mail message with an attachment on the remote Unix machine you are connected to through putty? It certainly possible to do this through primitive console commands, (TA-bot does it, after a fashion,) but the easiest way is to use a proper, console-based mail reader to create the message. I recommend "mutt". 3) Why do you need multiple process queues? For this assignment, you do not. In future assignments, we will have additional process queues that correspond to other things that a process might wait for. Examples: Asynchronous I/O devices, timers, and interprocess communication primitives. When a process "blocks" on an I/O operation, the O/S is going to send it to a queue of processes waiting on that I/O source, and that is a different queue from the readylist. 4) What is your favorite ice cream? Kopp's grasshopper fudge. 5) Can you hold lab in this classroom? No. The room is used by the Biology department for colloquia on some Fridays. The problem of running out of room in the Systems Lab at the 4pm time slot is indeed vexing. The section is oversubscribed because, in a previous year, we had the registrar take the room capacity limits out of Checkmarq so that students wouldn't get locked out of registering for the course just because we'd run out of room in the optional lab section. At the time, we were running perhaps half a dozen more people over the lab capacity, not *double* the capacity. In future, I guess we're just going to have to bite the bullet and limit lab sections to 25-30 again, and there will have to be multiple sections from the outset. However, I know that almost all of you *can* come for the 4pm time, and simply prefer not to. Today we had five dedicated students come for 4pm, and it certainly seemed like we were pushing 45 at 3pm. I don't really blame them -- I'm not super happy to be presenting the lab material twice in a row after a long day and a long week, and I, too, would rather be done by 4pm on Friday. But these are the times we were given. I'm open to suggestions from the group on how we can properly incentivize a more equitable distribution of students between the lab sections. I would really like for everyone to be able to sit in chairs, login to a machine, and follow along / get started. 6) Can you explain *stkbase again? Relating to the lowest memory address and position of the stack. The stkbase field of the process control block should be used to store the lowest (numerically smallest) address in the block of memory set aside for the process stack. At this stage, it is purely for bookkeeping. When, in a few more projects, we ditch getstk() and replace it with a proper malloc() call, stkbase will be used to store the pointer returned by malloc(), and, more importantly, will be used to free() the stack memory when the process is kill()ed. -Dr. D

1) What does "User thread" mean? Can we as a user create it? How? On Monday (2/11) we will spend a little time discussing user mode vs. kernel mode of operating system operation. See Section 2.7. 2) Is the kernel named after corn? Or as the Indians call it, "maize?" No, but I appreciate your corny question. Actually, most of the rest of the world calls it "maize," too.

3) Any insight into what happens when a computer freezes? "Freezing" can be a symptom of many different diseases. Usually, it is a result of some sort of infinite loop that does not behave as intended. Deadlocks (see Chapter 7) is one manifestation. Sometimes, disabling interrupts (as you will do in coming homework projects) leads to an apparent freeze because the timer cannot interrupt so the scheduler can remove the offending process. Some types of excessive resource requests can lead to an apparent freeze. On some systems, a fork bomb leads to a freeze, rather than a crash. 4) What is a Cascading Style Sheet? Cascading Style Sheet (CSS) is a technology used in coding Web pages to help the web page author control the appearance of web page elements. For example, in your current project assignment, one of the headers is coded in HTML as 5) In the homework, kputc() can return SYSERROR. What is that? It is #defined at (-1) in kernel.h, and is the general error code we return from system calls when something goes wrong. 6) Is the difference between threads and processes how memory is allocated between them? That often is PART of the difference. Generally, two processes have separate memory allocations (although portions can be shared), while two threads belonging to the same process share some memory and have some separate blocks of memory. 7) Can you go over clone() once more? Yes, I CAN; no I WON'T. If you program Linux applications and you need clone(), RTFM (Read The Fine Manual).

1) What are other function of threads? If you mean applications, we will talk on Wednesday about how your browser and your word processor use threads. 2) What happens if a pool of 100 threads use up all memory? Excuse the "blame the victim" answer, but if a pool of 100 threads use up all memory, you probably should not create 100 threads. 3) Are there other ways to do multi-thread programming? Yes, the book gives an example using the Win32 API (Figure 4.10) and Java (Figure 4.11). There are other libraries, too. However, multi-thread programming is difficult, no matter what library you use. 4) Why is the runner thread have to be a pointer? You pass to pthread_create() the ADDRESS of the code it should execute. 5) "After a fork(), what do processes share?" Did this question refer to share as the same memory locations for the data, or more about having the data in common but in separate copies? My explanation (and that of the book) is a little fuzzy because the details are different in different operating system implementations. Conceptually, after a fork(), the parent and the child processes are separate and have separate memory locations, although the contents of those two memory areas are the same. By that device, they can share resources such as files open before the fork() (probably not a good idea). The point today is that creating and running a new process us conceptually (and often in practice) a FAR more expensive operation in terms of CPU and memory use than creating and running a thread. Threads were invented to be light-weight processes. 6) Explain again what the parent and child do and do not share? In principle, parent and child processes are separate and have separate memory locations, although the contents of those two memory areas are the same. Generally, they share nothing, unless they do so explicitly. Parent and child threads share as much as they can. Generally, that includes executable code and explicitly declared global variables. They may share some registers if the programmer or the compiler thinks it is safe to do so. 7) What are the differences between processes and threading? See 5) and 6). 8) Can you go over how to prevent unfair thread spamming? We'll touch on that next week in Chapter 5. 9) If global variables are frowned upon, what is a better way to pass variables? Use pointers? No, because then the pointer would be a global variable. 10) Would a thread pool just be an array? Or even a thread pointer? Operating systems programmers (and many programmers of high-performance applications) prefer arrays (used wisely) to dynamic data structures, so a thread pool is likely to be an array of pointers to Thread Control Blocks, which in turn are likely to be in an array. When either array is not large enough, we can either chain several arrays (a linked list of arrays) or allocate a larger array and copy everything. 1) Can you expand on the difference between PRFREE and PRREADY? PRFREE is used for PCB entries that are not in use. They are not processes, in some sense -- they are PCB slots waiting to be used when a new process is created. 2) If a program can have many processes running simultaneously, why each process can't share run-time stack? Is it related to efficiency and speed? Each thread of control in a multi-threaded or multi-process program may share some state, but must also have some unique, independent state. At the very least, this independent state includes the current program counter and the activation records of function calls that are currently running. This state is what is stored on the run-time stack. If you share the run-time stack, then you can't really have independent threads (or processes) of execution. 3) Will our processes keep track of parental relationships? Not directly. If a parent wishes to communicate with its child, it will be able to store the child's PID in a local variable when it uses create() to make the child. 4) ...Processes are always in a suspended state if they are needed but not being used? In our O/S, PRSUSP is currently used for processes that are under construction, or are otherwise not ready to run for some unspecified reason. 5) Are there any outside references you can recommend for someone confused by the course material? There are many good O/S textbooks that you can consult, in addition to ours. If you are confused by MIPS material, I can recommend the programmers' reference manual for MIPS, or "See MIPS Run". There are also a variety of textbooks on earlier versions of the Xinu operating system that might be helpful.

Questions from cards, 2012:In this class, we are creating an operating system using another OS (Linux). Just wondering. How was the first OS developed?